Thank you for responding...

pve-manager/8.0.4/d258a813cfa6b390 (running kernel: 6.2.16-10-pve

/etc/pve/lxc/200.conf

arch: amd64

cores: 2

features: nesting=1

hostname: FileServer

memory: 512

mp0: NVME:200/vm-200-disk-1.raw,mp=/mnt/nvme01,backup=1,size=256G

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=F2:C7:3C:B9:88:BA,ip=dhcp,type=veth

ostype: debian

rootfs: NVME:200/vm-200-disk-0.raw,size=8G

swap: 512

unprivileged: 1

Not sure how to use journalctl or what toi look for - sorry.

al...

Thanks for responding!

I would need to try to migrate the VM again in order to check. The last two tries at migration required me to shutdown both nodes to get the proecess to stop. So am a bit hesitant to try again.

al...

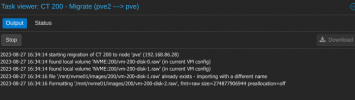

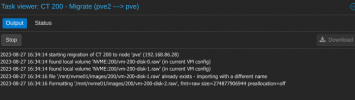

I did see this...

Aug 27 15:41:51 pve2 pvedaemon[1061]: migration aborted

Aug 27 15:41:51 pve2 pvedaemon[1061]: send/receive failed, cleaning up snapshot(s)..

Aug 27 15:40:42 pve2 pvedaemon[932]: worker 3960 started

Aug 27 15:40:42 pve2 pvedaemon[932]: starting 1 worker(s)

Aug 27 15:40:42 pve2 pvedaemon[932]: worker 933 finished

Aug 27 15:40:42 pve2 pvedaemon[933]: worker exit

Aug 27 15:40:05 pve2 pveproxy[941]: worker 3851 started

Aug 27 15:40:05 pve2 pveproxy[941]: starting 1 worker(s)

Aug 27 15:40:05 pve2 pveproxy[941]: worker 943 finished

Aug 27 15:40:05 pve2 pveproxy[943]: worker exit

Aug 27 15:39:37 pve2 pveproxy[941]: worker 3789 started

Aug 27 15:39:37 pve2 pveproxy[941]: starting 1 worker(s)

Aug 27 15:39:37 pve2 pveproxy[941]: worker 942 finished

Aug 27 15:39:37 pve2 pveproxy[942]: worker exit

Aug 27 15:38:02 pve2 systemd[1]: run-credentials-systemd\x2dtmpfiles\x2dclean.service.mount: Deactivated successfully.

Aug 27 15:38:02 pve2 systemd[1]: Finished systemd-tmpfiles-clean.service - Cleanup of Temporary Directories.

Aug 27 15:38:02 pve2 systemd[1]: systemd-tmpfiles-clean.service: Deactivated successfully.

Aug 27 15:38:02 pve2 systemd[1]: Starting systemd-tmpfiles-clean.service - Cleanup of Temporary Directories...

Aug 27 15:26:54 pve2 pmxcfs[773]: [status] notice: received log

Aug 27 15:23:43 pve2 pvedaemon[934]: <root@pam> starting task UPID

ve2:00000425:0000128C:64EBA2BF:vzmigrate:200:root@pam: