Hey, hope everyone is good ?

First of all, I'm sorry for the long post, I just try to give all the important info I have, basically it is explained in the title, at a random moment, ALL my vms shut down, and I tried restarting proxmox (the Poweredge R820 server directly) but same error :

(I still have 1vm running because it's on another drive)

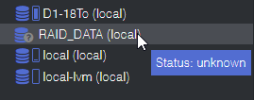

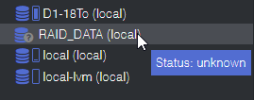

And the storage status is also unknown in the Gui :

This is the Node informations I have, it is set up as a directory :

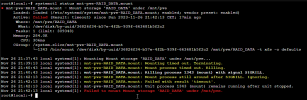

The systemctl status of the mnt-pve-RAID_DATA.mount :

Cat of storage.cfg :

The RAID_DATA fdisk -l output :

output of ls -lh disk/by-uuid :

And sorry for the long text, but here is my lsblk if it can help :

SDA - SDA1 is the RAID_DATA (4.9T)

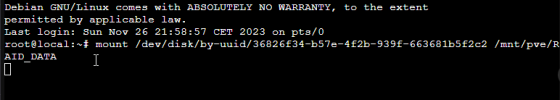

I already tried to mount manually, but it was leading me to litteraly infinit consol waiting ? it was doing nothing

If someone have any idea I would appreciate any help

First of all, I'm sorry for the long post, I just try to give all the important info I have, basically it is explained in the title, at a random moment, ALL my vms shut down, and I tried restarting proxmox (the Poweredge R820 server directly) but same error :

(I still have 1vm running because it's on another drive)

Code:

unable to activate storage 'RAID_DATA' - directory is expected to be a mount point but is not mounted: '/mnt/pve/RAID_DATA'And the storage status is also unknown in the Gui :

This is the Node informations I have, it is set up as a directory :

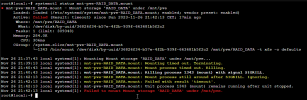

The systemctl status of the mnt-pve-RAID_DATA.mount :

Cat of storage.cfg :

Bash:

root@local:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content backup,vztmpl,iso

lvmthin: local-lvm

thinpool data

vgname pve

content images,rootdir

dir: RAID_DATA

path /mnt/pve/RAID_DATA

content backup,rootdir,images,vztmpl,snippets,iso

is_mountpoint 1

nodes local

preallocation falloc

shared 0

zfspool: D1-18To

pool D1-18To

content images,rootdir

mountpoint /D1-18To

nodes localThe RAID_DATA fdisk -l output :

Code:

Disk /dev/sda: 4.91 TiB, 5397163278336 bytes, 10541334528 sectors

Disk model: PERC H710

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 22C1FFAC-A004-4A02-854E-42A22353169B

Device Start End Sectors Size Type

/dev/sda1 2048 10541334494 10541332447 4.9T Linux filesystemoutput of ls -lh disk/by-uuid :

Code:

root@local:~# ls -lh /dev/disk/by-uuid/

total 0

lrwxrwxrwx 1 root root 12 Nov 26 21:37 02943FE6943FDABD -> ../../zd48p3

lrwxrwxrwx 1 root root 10 Nov 26 21:37 12CC-4126 -> ../../sdc1

lrwxrwxrwx 1 root root 10 Nov 26 21:37 15791782985296914653 -> ../../sdb1

lrwxrwxrwx 1 root root 10 Nov 26 21:37 20c893b4-0f05-4256-9728-0370dfe544ce -> ../../dm-1

lrwxrwxrwx 1 root root 11 Nov 26 21:37 22E649F6E649CB2D -> ../../zd0p2

lrwxrwxrwx 1 root root 12 Nov 26 21:37 2b0cb994-b7fb-4580-8f1a-b11a4018401b -> ../../zd32p2

lrwxrwxrwx 1 root root 10 Nov 26 21:37 36826f34-b57e-4f2b-939f-663681b5f2c2 -> ../../sda1

lrwxrwxrwx 1 root root 15 Nov 26 21:37 62D1-6091 -> ../../nvme0n1p2

lrwxrwxrwx 1 root root 11 Nov 26 21:37 7042486142482E62 -> ../../zd0p1

lrwxrwxrwx 1 root root 10 Nov 26 21:37 8bb64fdf-b3c9-48ea-b854-52a22a20a30f -> ../../dm-0

lrwxrwxrwx 1 root root 12 Nov 26 21:37 de3462f2-10c1-4ced-af89-d56654ee8811 -> ../../zd16p2

lrwxrwxrwx 1 root root 12 Nov 26 21:37 FA16ECEC16ECAAB9 -> ../../zd48p2And sorry for the long text, but here is my lsblk if it can help :

SDA - SDA1 is the RAID_DATA (4.9T)

Code:

root@local:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 4.9T 0 disk

└─sda1 8:1 0 4.9T 0 part

sdb 8:16 0 16.4T 0 disk

├─sdb1 8:17 0 16.4T 0 part

└─sdb9 8:25 0 8M 0 part

sdc 8:32 1 956M 0 disk

├─sdc1 8:33 1 200M 0 part

└─sdc2 8:34 1 756M 0 part

zd0 230:0 0 32G 0 disk

├─zd0p1 230:1 0 549M 0 part

└─zd0p2 230:2 0 31.5G 0 part

zd16 230:16 0 100G 0 disk

├─zd16p1 230:17 0 1M 0 part

├─zd16p2 230:18 0 2G 0 part

└─zd16p3 230:19 0 98G 0 part

zd32 230:32 0 100G 0 disk

├─zd32p1 230:33 0 1M 0 part

├─zd32p2 230:34 0 2G 0 part

└─zd32p3 230:35 0 98G 0 part

zd48 230:48 0 14.6T 0 disk

├─zd48p1 230:49 0 16M 0 part

├─zd48p2 230:50 0 14.6T 0 part

└─zd48p3 230:51 0 2G 0 part

nvme0n1 259:0 0 465.8G 0 disk

├─nvme0n1p1 259:1 0 1007K 0 part

├─nvme0n1p2 259:2 0 512M 0 part /boot/efi

└─nvme0n1p3 259:3 0 465.3G 0 part

├─pve-swap 253:0 0 8G 0 lvm [SWAP]

├─pve-root 253:1 0 96G 0 lvm /

├─pve-data_tmeta 253:2 0 3.5G 0 lvm

│ └─pve-data 253:4 0 338.4G 0 lvm

└─pve-data_tdata 253:3 0 338.4G 0 lvm

└─pve-data 253:4 0 338.4G 0 lvmI already tried to mount manually, but it was leading me to litteraly infinit consol waiting ? it was doing nothing

If someone have any idea I would appreciate any help

Last edited: