Hi group

Could really need some help !!

After reboot of my Proxmox server, one of my VM's can't boot any longer

And of course it is the most important of the VM's I have running on my Proxmox server

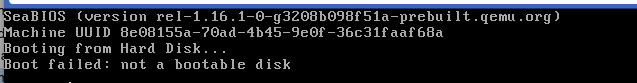

I get this error trying to boot the VM

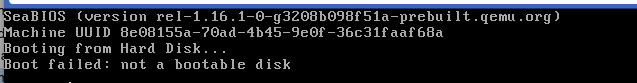

I do off course have backups of my VM's and LXC's, but a restore of the backups for this particular VM is given exact same problem. All of the backups !!

There isn't any entries in the logs I've looked in regarding what is the problem why this VM wont boot

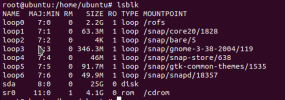

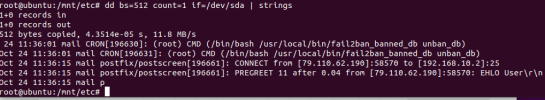

The system can see the partitions on the HD belonging to this "Non-bootable" VM

Any help or suggestion is appreciated.

root@pve1:/var/log/pve/tasks# pveversion -v

proxmox-ve: 7.4-1 (running kernel: 5.15.126-1-pve)

pve-manager: 7.4-17 (running version: 7.4-17/513c62be)

pve-kernel-5.15: 7.4-7

pve-kernel-5.13: 7.1-9

pve-kernel-5.0: 6.0-11

pve-kernel-5.15.126-1-pve: 5.15.126-1

pve-kernel-5.15.116-1-pve: 5.15.116-1

pve-kernel-5.15.108-1-pve: 5.15.108-2

pve-kernel-5.15.107-2-pve: 5.15.107-2

pve-kernel-5.15.107-1-pve: 5.15.107-1

pve-kernel-5.15.104-1-pve: 5.15.104-2

pve-kernel-5.15.102-1-pve: 5.15.102-1

pve-kernel-5.15.85-1-pve: 5.15.85-1

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.0.21-5-pve: 5.0.21-10

pve-kernel-5.0.15-1-pve: 5.0.15-1

ceph-fuse: 14.2.21-1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: 0.8.36+pve2

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4.1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.4-2

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-3

libpve-rs-perl: 0.7.7

libpve-storage-perl: 7.4-3

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.4.3-1

proxmox-backup-file-restore: 2.4.3-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.2

proxmox-widget-toolkit: 3.7.3

pve-cluster: 7.3-3

pve-container: 4.4-6

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-4~bpo11+1

pve-firewall: 4.3-5

pve-firmware: 3.6-5

pve-ha-manager: 3.6.1

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-2

qemu-server: 7.4-4

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.11-pve1

Could really need some help !!

After reboot of my Proxmox server, one of my VM's can't boot any longer

And of course it is the most important of the VM's I have running on my Proxmox server

I get this error trying to boot the VM

I do off course have backups of my VM's and LXC's, but a restore of the backups for this particular VM is given exact same problem. All of the backups !!

There isn't any entries in the logs I've looked in regarding what is the problem why this VM wont boot

The system can see the partitions on the HD belonging to this "Non-bootable" VM

Any help or suggestion is appreciated.

root@pve1:/var/log/pve/tasks# pveversion -v

proxmox-ve: 7.4-1 (running kernel: 5.15.126-1-pve)

pve-manager: 7.4-17 (running version: 7.4-17/513c62be)

pve-kernel-5.15: 7.4-7

pve-kernel-5.13: 7.1-9

pve-kernel-5.0: 6.0-11

pve-kernel-5.15.126-1-pve: 5.15.126-1

pve-kernel-5.15.116-1-pve: 5.15.116-1

pve-kernel-5.15.108-1-pve: 5.15.108-2

pve-kernel-5.15.107-2-pve: 5.15.107-2

pve-kernel-5.15.107-1-pve: 5.15.107-1

pve-kernel-5.15.104-1-pve: 5.15.104-2

pve-kernel-5.15.102-1-pve: 5.15.102-1

pve-kernel-5.15.85-1-pve: 5.15.85-1

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.0.21-5-pve: 5.0.21-10

pve-kernel-5.0.15-1-pve: 5.0.15-1

ceph-fuse: 14.2.21-1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: 0.8.36+pve2

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4.1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.4-2

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-3

libpve-rs-perl: 0.7.7

libpve-storage-perl: 7.4-3

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.4.3-1

proxmox-backup-file-restore: 2.4.3-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.2

proxmox-widget-toolkit: 3.7.3

pve-cluster: 7.3-3

pve-container: 4.4-6

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-4~bpo11+1

pve-firewall: 4.3-5

pve-firmware: 3.6-5

pve-ha-manager: 3.6.1

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-2

qemu-server: 7.4-4

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.11-pve1