Hi

Every so often, all of a sudden, all guest VMs terminate. Can you please help me troubleshoot why that happens?

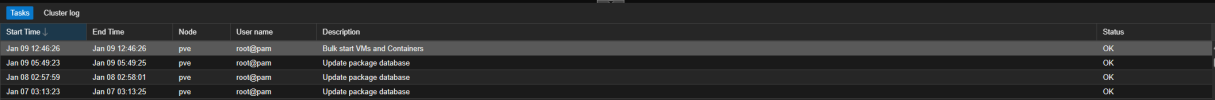

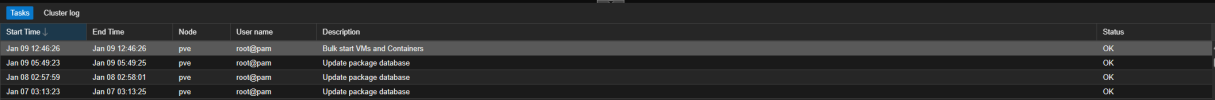

I see Bulk start VMs and Containers in the log:

dmesg has the proxmox host has normal start-up messages. Nothing for why the VMs were terminated.

All VMs are running Windows 11.

Should I be looking anywhere else for logs?

root@pve:~# dmesg

[ 0.000000] Linux version 6.5.11-7-pve (build@proxmox) (gcc (Debian 12.2.0-14) 12.2.0, GNU ld (GNU Binutils for Debian) 2.40) #1 SMP PREEMPT_DYNAMIC PMX 6.5.11-7 (2023-12-05T09:44Z) ()

[ 0.000000] Command line: BOOT_IMAGE=/boot/vmlinuz-6.5.11-7-pve root=/dev/mapper/pve-root ro quiet

[ 0.000000] KERNEL supported cpus:

[ 0.000000] Intel GenuineIntel

[ 0.000000] AMD AuthenticAMD

[ 0.000000] Hygon HygonGenuine

[ 0.000000] Centaur CentaurHauls

[ 0.000000] zhaoxin Shanghai

[ 0.000000] BIOS-provided physical RAM map:

Thanks in advance!

SMK

Every so often, all of a sudden, all guest VMs terminate. Can you please help me troubleshoot why that happens?

I see Bulk start VMs and Containers in the log:

dmesg has the proxmox host has normal start-up messages. Nothing for why the VMs were terminated.

All VMs are running Windows 11.

Should I be looking anywhere else for logs?

root@pve:~# dmesg

[ 0.000000] Linux version 6.5.11-7-pve (build@proxmox) (gcc (Debian 12.2.0-14) 12.2.0, GNU ld (GNU Binutils for Debian) 2.40) #1 SMP PREEMPT_DYNAMIC PMX 6.5.11-7 (2023-12-05T09:44Z) ()

[ 0.000000] Command line: BOOT_IMAGE=/boot/vmlinuz-6.5.11-7-pve root=/dev/mapper/pve-root ro quiet

[ 0.000000] KERNEL supported cpus:

[ 0.000000] Intel GenuineIntel

[ 0.000000] AMD AuthenticAMD

[ 0.000000] Hygon HygonGenuine

[ 0.000000] Centaur CentaurHauls

[ 0.000000] zhaoxin Shanghai

[ 0.000000] BIOS-provided physical RAM map:

Thanks in advance!

SMK

Last edited: