/usr/bin/kvm \

-id 102 \

-name TrueNAS \

-no-shutdown \

-chardev 'socket,id=qmp,path=/var/run/qemu-server/102.qmp,server=on,wait=off' \

-mon 'chardev=qmp,mode=control' \

-chardev 'socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5' \

-mon 'chardev=qmp-event,mode=control' \

-pidfile /var/run/qemu-server/102.pid \

-daemonize \

-smbios 'type=1,uuid=6d4faa8b-af3a-4f40-8321-f192cdcc0549' \

-smp '6,sockets=1,cores=6,maxcpus=6' \

-nodefaults \

-boot 'menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg' \

-vnc 'unix:/var/run/qemu-server/102.vnc,password=on' \

-cpu kvm64,enforce,+kvm_pv_eoi,+kvm_pv_unhalt,+lahf_lm,+sep \

-m 10240 \

-device 'pci-bridge,id=pci.1,chassis_nr=1,bus=pci.0,addr=0x1e' \

-device 'pci-bridge,id=pci.2,chassis_nr=2,bus=pci.0,addr=0x1f' \

-device 'vmgenid,guid=ac42dea7-d3fc-4c73-9b8f-b0da859c9def' \

-device 'piix3-usb-uhci,id=uhci,bus=pci.0,addr=0x1.0x2' \

-device 'usb-tablet,id=tablet,bus=uhci.0,port=1' \

-device 'VGA,id=vga,bus=pci.0,addr=0x2' \

-device 'virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x3,free-page-reporting=on' \

-iscsi 'initiator-name=iqn.1993-08.org.debian:01:6c6f19a981f' \

-device 'virtio-scsi-pci,id=scsihw0,bus=pci.0,addr=0x5' \

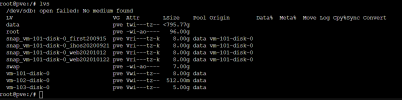

-drive 'file=/dev/pve/vm-102-disk-0,if=none,id=drive-scsi0,format=raw,cache=none,aio=io_uring,detect-zeroes=on' \

-device 'scsi-hd,bus=scsihw0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0,rotation_rate=1,bootindex=100' \

-drive 'file=/dev/disk/by-id/wwn-0x5000c500e60216a8,if=none,id=drive-scsi1,format=raw,cache=none,aio=io_uring,detect-zeroes=on' \

-device 'scsi-hd,bus=scsihw0.0,channel=0,scsi-id=0,lun=1,drive=drive-scsi1,id=scsi1' \

-drive 'file=/dev/disk/by-id/wwn-0x5000c500e60141ec,if=none,id=drive-scsi2,format=raw,cache=none,aio=io_uring,detect-zeroes=on' \

-device 'scsi-hd,bus=scsihw0.0,channel=0,scsi-id=0,lun=2,drive=drive-scsi2,id=scsi2' \

-drive 'file=/dev/disk/by-id/wwn-0x5000c500e601c85b,if=none,id=drive-scsi3,format=raw,cache=none,aio=io_uring,detect-zeroes=on' \

-device 'scsi-hd,bus=scsihw0.0,channel=0,scsi-id=0,lun=3,drive=drive-scsi3,id=scsi3' \

-netdev 'type=tap,id=net0,ifname=tap102i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on' \

-device 'virtio-net-pci,mac=0E:E0:98:3F:8D:39,netdev=net0,bus=pci.0,addr=0x12,id=net0' \

-machine 'type=pc+pve0'