Hi,

I've been reading about others facing a similar issue but I wanted to share mine and see if there is any solution to it. I could not find a solution that I would understand or implement so far... Sorry if it's obvious but please help!

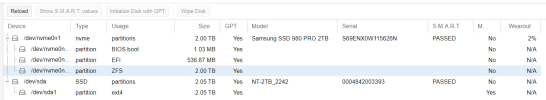

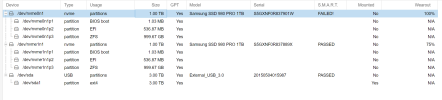

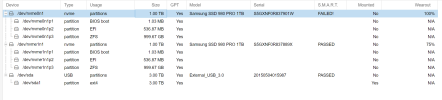

I run proxmox for a while now. In Aug 2022 I bought two Samsung SSD 980 Pro 1 TB and configured them as RAID 1 with ZFS.

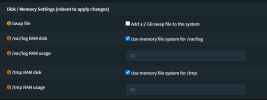

I haven't changed any settings for it, leaving everything at default from the initial installation. I don't run a DB on it. Just a firewall (OPNsense on ZFS in VM) and some smaller VMs and containers.

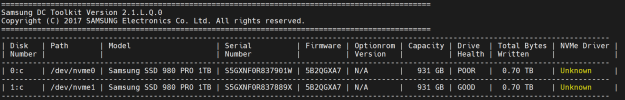

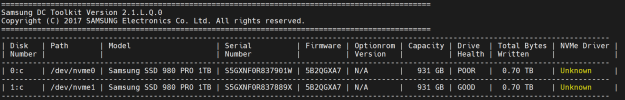

Now I have to admit I didn't really pay attention to the rapidly increasing wear until I got SMART errors today! But the wear level is astonishing and very annoying because it seems to have killed both SSDs. I know it's not enterprise SSDs but they are also not cheap crap and quite frankly also an enterprise SSD is going to be in trouble with over 700 TB written in 16 months!

It seems 1 disk is killed already with 774TB written in ~16 months!!!

Are there sensible config changes that I can still make to extend the remaining lifetime? I don't want to replace the SSDs now but if I have to, what will be a sensible alternative? I'm not prepared to through another 200+ Euro out of the window!

Some further info below.

Note: It says 3,197 power on hours which would be around 133 days? That's not possible as the machine is running 24/7...

I've been reading about others facing a similar issue but I wanted to share mine and see if there is any solution to it. I could not find a solution that I would understand or implement so far... Sorry if it's obvious but please help!

I run proxmox for a while now. In Aug 2022 I bought two Samsung SSD 980 Pro 1 TB and configured them as RAID 1 with ZFS.

Code:

root@pve-1:~# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool 928G 259G 669G - - 41% 27% 1.00x ONLINE -I haven't changed any settings for it, leaving everything at default from the initial installation. I don't run a DB on it. Just a firewall (OPNsense on ZFS in VM) and some smaller VMs and containers.

Now I have to admit I didn't really pay attention to the rapidly increasing wear until I got SMART errors today! But the wear level is astonishing and very annoying because it seems to have killed both SSDs. I know it's not enterprise SSDs but they are also not cheap crap and quite frankly also an enterprise SSD is going to be in trouble with over 700 TB written in 16 months!

It seems 1 disk is killed already with 774TB written in ~16 months!!!

Are there sensible config changes that I can still make to extend the remaining lifetime? I don't want to replace the SSDs now but if I have to, what will be a sensible alternative? I'm not prepared to through another 200+ Euro out of the window!

Some further info below.

Note: It says 3,197 power on hours which would be around 133 days? That's not possible as the machine is running 24/7...

Last edited: