I have a VM where there is no network when I pass through a GPU and when I remove the GPU there is network. I believe I've setup IOMMU correctly, but please double check my work.

I believe I'm running grub as my bootloader.

The iGPU in question is an Intel iGPU

I've updated the

After a reboot, I see that

I've enabled VFIO modules and ran

Verify the modules are enabled with

Finally I isolated the iGPU from the host and blacklisted the i915 drivers.

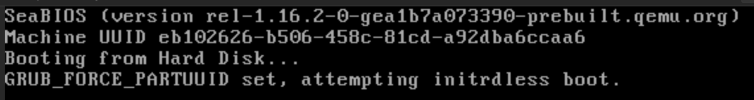

I created a VM without the GPU first to start the cloud-init install of Ubuntu noble and confirmed that I can SSH in and can see the install via the Console. After shutting down the VM, I added the GPU and after restarting the VM. The VM starts without errors, but has no ping or SSH response. Also console shows nothing other than

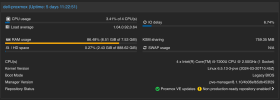

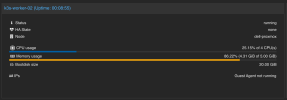

The following is my VM configuration.

Am I doing something wrong or am I missing something in setting up GPU Passthrough? Any help would be very helpful. Thanks in advance.

I believe I'm running grub as my bootloader.

Bash:

root@dell-proxmox:~# proxmox-boot-tool status

Re-executing '/usr/sbin/proxmox-boot-tool' in new private mount namespace..

System currently booted with legacy bios

3AC6-1450 is configured with: grub (versions: 6.5.13-1-pve, 6.5.13-3-pve)The iGPU in question is an Intel iGPU

Bash:

root@dell-proxmox:~# lspci -nn

00:00.0 Host bridge [0600]: Intel Corporation Xeon E3-1200 v6/7th Gen Core Processor Host Bridge/DRAM Registers [8086:5904] (rev 02)

00:02.0 VGA compatible controller [0300]: Intel Corporation HD Graphics 620 [8086:5916] (rev 02)

00:04.0 Signal processing controller [1180]: Intel Corporation Xeon E3-1200 v5/E3-1500 v5/6th Gen Core Processor Thermal Subsystem [8086:1903] (rev 02)

00:13.0 Non-VGA unclassified device [0000]: Intel Corporation Sunrise Point-LP Integrated Sensor Hub [8086:9d35] (rev 21)

00:14.0 USB controller [0c03]: Intel Corporation Sunrise Point-LP USB 3.0 xHCI Controller [8086:9d2f] (rev 21)

00:14.2 Signal processing controller [1180]: Intel Corporation Sunrise Point-LP Thermal subsystem [8086:9d31] (rev 21)

00:15.0 Signal processing controller [1180]: Intel Corporation Sunrise Point-LP Serial IO I2C Controller #0 [8086:9d60] (rev 21)

00:15.1 Signal processing controller [1180]: Intel Corporation Sunrise Point-LP Serial IO I2C Controller #1 [8086:9d61] (rev 21)

00:16.0 Communication controller [0780]: Intel Corporation Sunrise Point-LP CSME HECI #1 [8086:9d3a] (rev 21)

00:17.0 SATA controller [0106]: Intel Corporation Sunrise Point-LP SATA Controller [AHCI mode] [8086:9d03] (rev 21)

00:1c.0 PCI bridge [0604]: Intel Corporation Sunrise Point-LP PCI Express Root Port #5 [8086:9d14] (rev f1)

00:1f.0 ISA bridge [0601]: Intel Corporation Sunrise Point-LP LPC Controller [8086:9d58] (rev 21)

00:1f.2 Memory controller [0580]: Intel Corporation Sunrise Point-LP PMC [8086:9d21] (rev 21)

00:1f.3 Audio device [0403]: Intel Corporation Sunrise Point-LP HD Audio [8086:9d71] (rev 21)

00:1f.4 SMBus [0c05]: Intel Corporation Sunrise Point-LP SMBus [8086:9d23] (rev 21)

01:00.0 Network controller [0280]: Intel Corporation Wireless 3165 [8086:3165] (rev 79)I've updated the

/etc/default/grub and ran update-grub

Bash:

root@dell-proxmox:~# cat /etc/default/grub

# If you change this file, run 'update-grub' afterwards to update

# /boot/grub/grub.cfg.

# For full documentation of the options in this file, see:

# info -f grub -n 'Simple configuration'

GRUB_DEFAULT=0

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR=`lsb_release -i -s 2> /dev/null || echo Debian`

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt"

#GRUB_CMDLINE_LINUX_DEFAULT="quiet"

GRUB_CMDLINE_LINUX="consoleblank=300"

# If your computer has multiple operating systems installed, then you

# probably want to run os-prober. However, if your computer is a host

# for guest OSes installed via LVM or raw disk devices, running

# os-prober can cause damage to those guest OSes as it mounts

# filesystems to look for things.

#GRUB_DISABLE_OS_PROBER=false

# Uncomment to enable BadRAM filtering, modify to suit your needs

# This works with Linux (no patch required) and with any kernel that obtains

# the memory map information from GRUB (GNU Mach, kernel of FreeBSD ...)

#GRUB_BADRAM="0x01234567,0xfefefefe,0x89abcdef,0xefefefef"

# Uncomment to disable graphical terminal

#GRUB_TERMINAL=console

# The resolution used on graphical terminal

# note that you can use only modes which your graphic card supports via VBE

# you can see them in real GRUB with the command `vbeinfo'

#GRUB_GFXMODE=640x480

# Uncomment if you don't want GRUB to pass "root=UUID=xxx" parameter to Linux

#GRUB_DISABLE_LINUX_UUID=true

# Uncomment to disable generation of recovery mode menu entries

#GRUB_DISABLE_RECOVERY="true"

# Uncomment to get a beep at grub start

#GRUB_INIT_TUNE="480 440 1"After a reboot, I see that

/proc/cmdline seems to have my iommu flags enabled from grub and that there are no conflicting IOMMU groupings with the iGPU.

Code:

root@dell-proxmox:~# cat /proc/cmdline; for d in /sys/kernel/iommu_groups/*/devices/*; do n=${d#*/iommu_groups/*}; n=${n%%/*}; printf 'IOMMU group %s ' "$n"; lspci -nns "${d##*/}"; done

BOOT_IMAGE=/vmlinuz-6.5.13-3-pve root=ZFS=/ROOT/pve-1 ro consoleblank=300 root=ZFS=rpool/ROOT/pve-1 boot=zfs quiet intel_iommu=on iommu=pt

IOMMU group 0 00:02.0 VGA compatible controller [0300]: Intel Corporation HD Graphics 620 [8086:5916] (rev 02)

IOMMU group 10 01:00.0 Network controller [0280]: Intel Corporation Wireless 3165 [8086:3165] (rev 79)

IOMMU group 11 lspci: -s: Invalid slot number

IOMMU group 12 lspci: -s: Invalid slot number

IOMMU group 13 lspci: -s: Invalid slot number

IOMMU group 14 lspci: -s: Invalid slot number

IOMMU group 1 00:00.0 Host bridge [0600]: Intel Corporation Xeon E3-1200 v6/7th Gen Core Processor Host Bridge/DRAM Registers [8086:5904] (rev 02)

IOMMU group 2 00:04.0 Signal processing controller [1180]: Intel Corporation Xeon E3-1200 v5/E3-1500 v5/6th Gen Core Processor Thermal Subsystem [8086:1903] (rev 02)

IOMMU group 3 00:13.0 Non-VGA unclassified device [0000]: Intel Corporation Sunrise Point-LP Integrated Sensor Hub [8086:9d35] (rev 21)

IOMMU group 4 00:14.0 USB controller [0c03]: Intel Corporation Sunrise Point-LP USB 3.0 xHCI Controller [8086:9d2f] (rev 21)

IOMMU group 4 00:14.2 Signal processing controller [1180]: Intel Corporation Sunrise Point-LP Thermal subsystem [8086:9d31] (rev 21)

IOMMU group 5 00:15.0 Signal processing controller [1180]: Intel Corporation Sunrise Point-LP Serial IO I2C Controller #0 [8086:9d60] (rev 21)

IOMMU group 5 00:15.1 Signal processing controller [1180]: Intel Corporation Sunrise Point-LP Serial IO I2C Controller #1 [8086:9d61] (rev 21)

IOMMU group 6 00:16.0 Communication controller [0780]: Intel Corporation Sunrise Point-LP CSME HECI #1 [8086:9d3a] (rev 21)

IOMMU group 7 00:17.0 SATA controller [0106]: Intel Corporation Sunrise Point-LP SATA Controller [AHCI mode] [8086:9d03] (rev 21)

IOMMU group 8 00:1c.0 PCI bridge [0604]: Intel Corporation Sunrise Point-LP PCI Express Root Port #5 [8086:9d14] (rev f1)

IOMMU group 9 00:1f.0 ISA bridge [0601]: Intel Corporation Sunrise Point-LP LPC Controller [8086:9d58] (rev 21)

IOMMU group 9 00:1f.2 Memory controller [0580]: Intel Corporation Sunrise Point-LP PMC [8086:9d21] (rev 21)

IOMMU group 9 00:1f.3 Audio device [0403]: Intel Corporation Sunrise Point-LP HD Audio [8086:9d71] (rev 21)

IOMMU group 9 00:1f.4 SMBus [0c05]: Intel Corporation Sunrise Point-LP SMBus [8086:9d23] (rev 21)I've enabled VFIO modules and ran

update-initramfs -u -k all

Bash:

root@dell-proxmox:~# cat /etc/modules

# /etc/modules: kernel modules to load at boot time.

#

# This file contains the names of kernel modules that should be loaded

# at boot time, one per line. Lines beginning with "#" are ignored.

# Parameters can be specified after the module name.

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfdVerify the modules are enabled with

dmesg | grep -i vfio

Bash:

root@dell-proxmox:~# dmesg | grep -i vfio

[ 13.730131] VFIO - User Level meta-driver version: 0.3

[ 14.210224] vfio-pci 0000:00:02.0: vgaarb: deactivate vga console

[ 14.211048] vfio-pci 0000:00:02.0: vgaarb: changed VGA decodes: olddecodes=io+mem,decodes=io+mem:owns=io+mem

[ 14.211269] vfio_pci: add [8086:5916[ffffffff:ffffffff]] class 0x000000/00000000

[ 50.057812] vfio-pci 0000:00:02.0: ready 1023ms after FLR

[ 52.605734] vaddr_get_pfns+0x78/0x290 [vfio_iommu_type1]

[ 52.605741] vfio_pin_pages_remote+0x370/0x4e0 [vfio_iommu_type1]

[ 52.605750] vfio_iommu_type1_ioctl+0x10c7/0x1af0 [vfio_iommu_type1]

[ 52.605760] vfio_fops_unl_ioctl+0x68/0x380 [vfio]

[ 604.126009] vaddr_get_pfns+0x78/0x290 [vfio_iommu_type1]

[ 604.126018] vfio_pin_pages_remote+0x370/0x4e0 [vfio_iommu_type1]

[ 604.126029] vfio_iommu_type1_ioctl+0x10c7/0x1af0 [vfio_iommu_type1]

[ 604.126040] vfio_fops_unl_ioctl+0x68/0x380 [vfio]

[ 617.317921] vaddr_get_pfns+0x78/0x290 [vfio_iommu_type1]

[ 617.317930] vfio_pin_pages_remote+0x370/0x4e0 [vfio_iommu_type1]

[ 617.317941] vfio_iommu_type1_ioctl+0x10c7/0x1af0 [vfio_iommu_type1]

[ 617.317956] vfio_fops_unl_ioctl+0x68/0x380 [vfio]

[ 636.497782] vaddr_get_pfns+0x78/0x290 [vfio_iommu_type1]

[ 636.497791] vfio_pin_pages_remote+0x370/0x4e0 [vfio_iommu_type1]

[ 636.497802] vfio_iommu_type1_ioctl+0x10c7/0x1af0 [vfio_iommu_type1]

[ 636.497813] vfio_fops_unl_ioctl+0x68/0x380 [vfio]

[ 648.065668] vaddr_get_pfns+0x78/0x290 [vfio_iommu_type1]

[ 648.065678] vfio_pin_pages_remote+0x370/0x4e0 [vfio_iommu_type1]

[ 648.065689] vfio_iommu_type1_ioctl+0x10c7/0x1af0 [vfio_iommu_type1]

[ 648.065700] vfio_fops_unl_ioctl+0x68/0x380 [vfio]

[ 672.413453] vaddr_get_pfns+0x78/0x290 [vfio_iommu_type1]

[ 672.413460] vfio_pin_pages_remote+0x370/0x4e0 [vfio_iommu_type1]

[ 672.413469] vfio_iommu_type1_ioctl+0x10c7/0x1af0 [vfio_iommu_type1]

[ 672.413477] vfio_fops_unl_ioctl+0x68/0x380 [vfio]Finally I isolated the iGPU from the host and blacklisted the i915 drivers.

Bash:

root@dell-proxmox:~# cat /etc/modprobe.d/vfio.conf

options vfio-pci ids=8086:5916 disable_vga=1

root@dell-proxmox:~# cat /etc/modprobe.d/blacklist.conf

blacklist i915I created a VM without the GPU first to start the cloud-init install of Ubuntu noble and confirmed that I can SSH in and can see the install via the Console. After shutting down the VM, I added the GPU and after restarting the VM. The VM starts without errors, but has no ping or SSH response. Also console shows nothing other than

starting serial terminal on interface serial0The following is my VM configuration.

Code:

root@dell-proxmox:~# qm config 107

agent: 1,fstrim_cloned_disks=1

balloon: 0

boot: c

bootdisk: scsi0

cipassword: **REDACTED**

ciuser: **REDACTED**

cores: 4

cpu: host

hostpci0: 0000:00:02

ide2: local-zfs:vm-107-cloudinit,media=cdrom,size=4M

ipconfig0: ip=10.0.1.82/24,gw=10.0.1.1

machine: q35

memory: 4096

meta: creation-qemu=8.1.5,ctime=1712184918

name: k3s-worker-02

net0: virtio=BC:24:11:93:A9:DE,bridge=vmbr0

numa: 0

onboot: 1

scsi0: local-zfs:vm-107-disk-0,size=10G

scsihw: virtio-scsi-pci

serial0: socket

smbios1: uuid=e610d5f0-de97-49a2-b319-7492ad5aac08

sockets: 1

sshkeys: **REDACTED**

vga: serial0

vmgenid: 521d8757-8938-4504-ba6c-0de310dbce78Am I doing something wrong or am I missing something in setting up GPU Passthrough? Any help would be very helpful. Thanks in advance.