I am trying now to restore VM because migrating didn't work and it didn't because of the same reason.

I really don't know what to do to transfer VM server to another proxmox server.

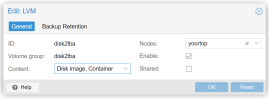

I can not have the same disks on both servers just to migrate. So what to do to solve this problem with disk which is not the same name on second server?

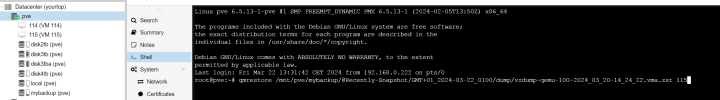

root@pve:~# qmrestore /mnt/pve/mybackup/@Recently-Snapshot/GMT+01_2024-03-21_0100/dump/vzdump-qemu-100-2024_03_20-14_24_22.vma.zst 108

restore vma archive: zstd -q -d -c /mnt/pve/mybackup/@Recently-Snapshot/GMT+01_2024-03-21_0100/dump/vzdump-qemu-100-2024_03_20-14_24_22.vma.zst | vma extract -v -r /var/tmp/vzdumptmp493410.fifo - /var/tmp/vzdumptmp493410

CFG: size: 488 name: qemu-server.conf

DEV: dev_id=1 size: 128849018880 devname: drive-scsi0

DEV: dev_id=2 size: 2147483648000 devname: drive-scsi1

CTIME: Wed Mar 20 14:24:23 2024

no lock found trying to remove 'create' lock

error before or during data restore, some or all disks were not completely restored. VM 108 state is NOT cleaned up.

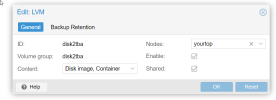

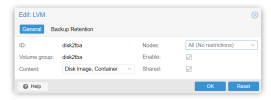

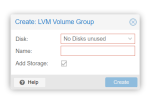

command 'set -o pipefail && zstd -q -d -c /mnt/pve/mybackup/@Recently-Snapshot/GMT+01_2024-03-21_0100/dump/vzdump-qemu-100-2024_03_20-14_24_22.vma.zst | vma extract -v -r /var/tmp/vzdumptmp493410.fifo - /var/tmp/vzdumptmp493410' failed: no such volume group 'disk2tba'

I really don't know what to do to transfer VM server to another proxmox server.

I can not have the same disks on both servers just to migrate. So what to do to solve this problem with disk which is not the same name on second server?

root@pve:~# qmrestore /mnt/pve/mybackup/@Recently-Snapshot/GMT+01_2024-03-21_0100/dump/vzdump-qemu-100-2024_03_20-14_24_22.vma.zst 108

restore vma archive: zstd -q -d -c /mnt/pve/mybackup/@Recently-Snapshot/GMT+01_2024-03-21_0100/dump/vzdump-qemu-100-2024_03_20-14_24_22.vma.zst | vma extract -v -r /var/tmp/vzdumptmp493410.fifo - /var/tmp/vzdumptmp493410

CFG: size: 488 name: qemu-server.conf

DEV: dev_id=1 size: 128849018880 devname: drive-scsi0

DEV: dev_id=2 size: 2147483648000 devname: drive-scsi1

CTIME: Wed Mar 20 14:24:23 2024

no lock found trying to remove 'create' lock

error before or during data restore, some or all disks were not completely restored. VM 108 state is NOT cleaned up.

command 'set -o pipefail && zstd -q -d -c /mnt/pve/mybackup/@Recently-Snapshot/GMT+01_2024-03-21_0100/dump/vzdump-qemu-100-2024_03_20-14_24_22.vma.zst | vma extract -v -r /var/tmp/vzdumptmp493410.fifo - /var/tmp/vzdumptmp493410' failed: no such volume group 'disk2tba'