I have Proxmox VE 7.4 on 3 physical servers.

After update Cepth from 16.2.15 to 17.2.7 the RBD store is inactive but enabled. Cepth healt is OK.

All VMs are working, but I can't migrate them, create new ones and if I turn them off, they can't be turned on.

For example when migrating or taking snapshopt I get these errors:

When I enter the command "pve7to8 --full | more", I get the following error

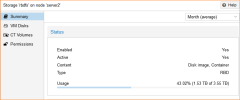

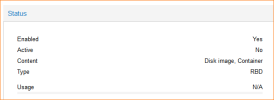

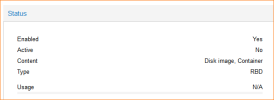

This is summary of RBD storage

How can I activate RBD storage?

After update Cepth from 16.2.15 to 17.2.7 the RBD store is inactive but enabled. Cepth healt is OK.

All VMs are working, but I can't migrate them, create new ones and if I turn them off, they can't be turned on.

For example when migrating or taking snapshopt I get these errors:

Code:

TASK ERROR: rbd error: rbd: error opening pool 'device_health_metrics': (2) No such file or directory

Code:

rbd snapshot 'vm-200-disk-0' error: rbd: error opening pool 'device_health_metrics': (2) No such file or directoryWhen I enter the command "pve7to8 --full | more", I get the following error

Code:

could not get usage stats for pool 'device_health_metrics' mounting container failedThis is summary of RBD storage

How can I activate RBD storage?