Hi all,

since October 10th, my Proxmox server is randomly crashing with a kernel panic about once a day.

Initially I was looking for hardware issues since I thought I had not done any updates prior to the first crash. However, I recently realized I had apparently forgot that, according to /var/log/apt I did some upgrades two days before the first crash.

I enabled kdump-tools to collect dmesg and reboot the server after a kernel panic. Rarely it sometimes just reboots according to syslog without causing a kernel panic. This is syslog from the most recent reboot this morning:

I attached the last three dmesg when kernel panics occurred.

So far I have not been able to find a pattern except that it always includes

Searching for this I found this post (https://bbs.archlinux.org/viewtopic.php?id=288632&p=2) stating disabling c states helped so I tried that yesterday with no luck.

There is also a comment about nftables and my last upgrade prior to the first kernel panic did upgrade libnftables1 and nftables (cf. attached apt logs) but that may be just a coincidence as I found no signs of nftables in the kernel panic call traces. I'm no expert so I'm probably missing something here.

Soon after the first crash I booted with a 5.15 kernel with same result.

* replace a HDD in a zfs mirror that showed bad SMART values (but was not indicated as failing)

* cloned the boot SSD to a different SSD

* ran memory test

* upgraded BIOS

* disconnected all USB devices (eg. UPS, RS232 adapter)

* moved boot SSD, storage SSD and the ZFS HDD pool (4 HDDs) to a different server case with same type of motherboard, CPU and PSU using other SATA cables

Since none of that helped I ruled out hardware issues.

Does anyone have ideas what I can do next?

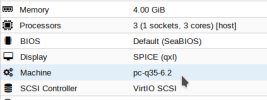

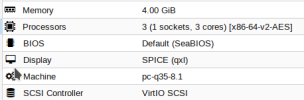

pveversion -v

since October 10th, my Proxmox server is randomly crashing with a kernel panic about once a day.

Initially I was looking for hardware issues since I thought I had not done any updates prior to the first crash. However, I recently realized I had apparently forgot that, according to /var/log/apt I did some upgrades two days before the first crash.

I enabled kdump-tools to collect dmesg and reboot the server after a kernel panic. Rarely it sometimes just reboots according to syslog without causing a kernel panic. This is syslog from the most recent reboot this morning:

Code:

2023-11-24T08:52:56.268970+01:00 proxmox1 pveproxy[2505222]: worker exit

2023-11-24T08:52:56.296703+01:00 proxmox1 pveproxy[2707]: worker 2505222 finished

2023-11-24T08:52:56.296808+01:00 proxmox1 pveproxy[2707]: starting 1 worker(s)

2023-11-24T08:52:56.299943+01:00 proxmox1 pveproxy[2707]: worker 2528632 started

2023-11-24T08:55:03.029844+01:00 proxmox1 systemd-modules-load[424]: Inserted module 'vhost_net'

2023-11-24T08:55:03.029939+01:00 proxmox1 kernel: [ 0.000000] Linux version 6.5.11-4-pve (fgruenbichler@yuna) (gcc (Debian 12.2.0-14) 12.2.0, GNU ld (GNU Binutils for Debian) 2.40) #1 SMP PREEMPT_DYNAMIC PMX 6.5.11-4 (2023-11-20T10:19Z) ()

2023-11-24T08:55:03.029944+01:00 proxmox1 kernel: [ 0.000000] Command line: BOOT_IMAGE=/boot/vmlinuz-6.5.11-4-pve root=/dev/mapper/pve-root ro quiet intel_idle.max_cstate=1 processor.max_cstate=1 crashkernel=384M-:256M

2023-11-24T08:55:03.029945+01:00 proxmox1 kernel: [ 0.000000] KERNEL supported cpus:

2023-11-24T08:55:03.029945+01:00 proxmox1 kernel: [ 0.000000] Intel GenuineIntel

2023-11-24T08:55:03.029946+01:00 proxmox1 kernel: [ 0.000000] AMD AuthenticAMD

2023-11-24T08:55:03.029946+01:00 proxmox1 kernel: [ 0.000000] Hygon HygonGenuine

2023-11-24T08:55:03.029947+01:00 proxmox1 kernel: [ 0.000000] Centaur CentaurHauls

2023-11-24T08:55:03.029951+01:00 proxmox1 kernel: [ 0.000000] zhaoxin Shanghai

2023-11-24T08:55:03.029944+01:00 proxmox1 dmeventd[443]: dmeventd ready for processing.

2023-11-24T08:55:03.029951+01:00 proxmox1 kernel: [ 0.000000] BIOS-provided physical RAM map:

2023-11-24T08:55:03.029952+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x0000000000000000-0x000000000009ffff] usable

2023-11-24T08:55:03.029954+01:00 proxmox1 systemd[1]: Starting systemd-journal-flush.service - Flush Journal to Persistent Storage...

2023-11-24T08:55:03.029958+01:00 proxmox1 lvm[415]: 5 logical volume(s) in volume group "pve" monitored

2023-11-24T08:55:03.029953+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000000a0000-0x00000000000fffff] reserved

2023-11-24T08:55:03.029967+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x0000000000100000-0x0000000009bfefff] usable

2023-11-24T08:55:03.029967+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x0000000009bff000-0x0000000009ffffff] reserved

2023-11-24T08:55:03.029965+01:00 proxmox1 systemd-udevd[444]: Using default interface naming scheme 'v252'.

2023-11-24T08:55:03.029970+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x000000000a000000-0x000000000a1fffff] usable

2023-11-24T08:55:03.029970+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x000000000a200000-0x000000000a20efff] ACPI NVS

2023-11-24T08:55:03.029971+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x000000000a20f000-0x000000000affffff] usable

2023-11-24T08:55:03.029969+01:00 proxmox1 dmeventd[443]: Monitoring thin pool pve-data-tpool.

2023-11-24T08:55:03.029971+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x000000000b000000-0x000000000b01ffff] reserved

2023-11-24T08:55:03.029972+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x000000000b020000-0x0000000098f90fff] usable

2023-11-24T08:55:03.029972+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x0000000098f91000-0x000000009a6d0fff] reserved

2023-11-24T08:55:03.029973+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x000000009a6d1000-0x000000009a70afff] ACPI data

2023-11-24T08:55:03.029975+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x000000009a70b000-0x000000009c1bdfff] ACPI NVS

2023-11-24T08:55:03.029973+01:00 proxmox1 systemd[1]: Started systemd-udevd.service - Rule-based Manager for Device Events and Files.

2023-11-24T08:55:03.029976+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x000000009c1be000-0x000000009cf76fff] reserved

2023-11-24T08:55:03.029976+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x000000009cf77000-0x000000009cffefff] type 20

2023-11-24T08:55:03.029977+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x000000009cfff000-0x000000009dffffff] usable

2023-11-24T08:55:03.029977+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x000000009e000000-0x00000000bfffffff] reserved

2023-11-24T08:55:03.029977+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000f0000000-0x00000000f7ffffff] reserved

2023-11-24T08:55:03.029980+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000fd200000-0x00000000fd2fffff] reserved

2023-11-24T08:55:03.029981+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000fd600000-0x00000000fd6fffff] reserved

2023-11-24T08:55:03.029982+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000fea00000-0x00000000fea0ffff] reserved

2023-11-24T08:55:03.029982+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000feb80000-0x00000000fec01fff] reserved

2023-11-24T08:55:03.029982+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000fec10000-0x00000000fec10fff] reserved

2023-11-24T08:55:03.029983+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000fec30000-0x00000000fec30fff] reserved

2023-11-24T08:55:03.029983+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000fed00000-0x00000000fed00fff] reserved

2023-11-24T08:55:03.029978+01:00 proxmox1 udevadm[460]: systemd-udev-settle.service is deprecated. Please fix nut-driver@apc.service, zfs-import-scan.service, zfs-import-cache.service not to pull it in.

2023-11-24T08:55:03.029986+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000fed40000-0x00000000fed44fff] reserved

2023-11-24T08:55:03.029986+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000fed80000-0x00000000fed8ffff] reserved

2023-11-24T08:55:03.029987+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000fedc2000-0x00000000fedcffff] reserved

2023-11-24T08:55:03.029987+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000fedd4000-0x00000000fedd5fff] reserved

2023-11-24T08:55:03.029986+01:00 proxmox1 systemd[1]: Finished systemd-udev-trigger.service - Coldplug All udev Devices.

2023-11-24T08:55:03.029988+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x00000000ff000000-0x00000000ffffffff] reserved

2023-11-24T08:55:03.029988+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x0000000100000000-0x000000183e2fffff] usable

2023-11-24T08:55:03.029990+01:00 proxmox1 kernel: [ 0.000000] BIOS-e820: [mem 0x000000183e300000-0x000000183fffffff] reserved

2023-11-24T08:55:03.029991+01:00 proxmox1 kernel: [ 0.000000] NX (Execute Disable) protection: active

2023-11-24T08:55:03.029991+01:00 proxmox1 kernel: [ 0.000000] efi: EFI v2.7 by American Megatrends

2023-11-24T08:55:03.029989+01:00 proxmox1 systemd[1]: Starting ifupdown2-pre.service - Helper to synchronize boot up for ifupdown...

2023-11-24T08:55:03.029992+01:00 proxmox1 kernel: [ 0.000000] efi: ACPI=0x9c1a7000 ACPI 2.0=0x9c1a7014 TPMFinalLog=0x9b171000 SMBIOS=0x9ce25000 SMBIOS 3.0=0x9ce24000 MEMATTR=0x95a0c018 ESRT=0x95a81698

2023-11-24T08:55:03.029992+01:00 proxmox1 kernel: [ 0.000000] efi: Remove mem306: MMIO range=[0xf0000000-0xf7ffffff] (128MB) from e820 map

2023-11-24T08:55:03.029993+01:00 proxmox1 kernel: [ 0.000000] e820: remove [mem 0xf0000000-0xf7ffffff] reserved

2023-11-24T08:55:03.029995+01:00 proxmox1 kernel: [ 0.000000] efi: Remove mem307: MMIO range=[0xfd200000-0xfd2fffff] (1MB) from e820 map

2023-11-24T08:55:03.029994+01:00 proxmox1 systemd[1]: Starting systemd-udev-settle.service - Wait for udev To Complete Device Initialization...

2023-11-24T08:55:03.029996+01:00 proxmox1 kernel: [ 0.000000] e820: remove [mem 0xfd200000-0xfd2fffff] reserved

2023-11-24T08:55:03.029996+01:00 proxmox1 kernel: [ 0.000000] efi: Remove mem308: MMIO range=[0xfd600000-0xfd6fffff] (1MB) from e820 map

2023-11-24T08:55:03.029997+01:00 proxmox1 kernel: [ 0.000000] e820: remove [mem 0xfd600000-0xfd6fffff] reserved

2023-11-24T08:55:03.029997+01:00 proxmox1 kernel: [ 0.000000] efi: Not removing mem309: MMIO range=[0xfea00000-0xfea0ffff] (64KB) from e820 map

2023-11-24T08:55:03.029998+01:00 proxmox1 kernel: [ 0.000000] efi: Remove mem310: MMIO range=[0xfeb80000-0xfec01fff] (0MB) from e820 map

2023-11-24T08:55:03.029998+01:00 proxmox1 kernel: [ 0.000000] e820: remove [mem 0xfeb80000-0xfec01fff] reserved

2023-11-24T08:55:03.029998+01:00 proxmox1 systemd[1]: Finished systemd-journal-flush.service - Flush Journal to Persistent Storage.

2023-11-24T08:55:03.030000+01:00 proxmox1 kernel: [ 0.000000] efi: Not removing mem311: MMIO range=[0xfec10000-0xfec10fff] (4KB) from e820 map

2023-11-24T08:55:03.030001+01:00 proxmox1 kernel: [ 0.000000] efi: Not removing mem312: MMIO range=[0xfec30000-0xfec30fff] (4KB) from e820 map

2023-11-24T08:55:03.030001+01:00 proxmox1 kernel: [ 0.000000] efi: Not removing mem313: MMIO range=[0xfed00000-0xfed00fff] (4KB) from e820 map

2023-11-24T08:55:03.030002+01:00 proxmox1 kernel: [ 0.000000] efi: Not removing mem314: MMIO range=[0xfed40000-0xfed44fff] (20KB) from e820 map

2023-11-24T08:55:03.030002+01:00 proxmox1 kernel: [ 0.000000] efi: Not removing mem315: MMIO range=[0xfed80000-0xfed8ffff] (64KB) from e820 map

2023-11-24T08:55:03.030002+01:00 proxmox1 kernel: [ 0.000000] efi: Not removing mem316: MMIO range=[0xfedc2000-0xfedcffff] (56KB) from e820 map

2023-11-24T08:55:03.030005+01:00 proxmox1 kernel: [ 0.000000] efi: Not removing mem317: MMIO range=[0xfedd4000-0xfedd5fff] (8KB) from e820 map

2023-11-24T08:55:03.030005+01:00 proxmox1 kernel: [ 0.000000] efi: Remove mem318: MMIO range=[0xff000000-0xffffffff] (16MB) from e820 map

2023-11-24T08:55:03.030006+01:00 proxmox1 kernel: [ 0.000000] e820: remove [mem 0xff000000-0xffffffff] reserved

2023-11-24T08:55:03.030001+01:00 proxmox1 systemd[1]: Found device dev-pve-swap.device - /dev/pve/swap.

2023-11-24T08:55:03.030006+01:00 proxmox1 kernel: [ 0.000000] secureboot: Secure boot could not be determined (mode 0)

2023-11-24T08:55:03.030006+01:00 proxmox1 kernel: [ 0.000000] SMBIOS 3.3.0 present.

2023-11-24T08:55:03.030007+01:00 proxmox1 kernel: [ 0.000000] DMI: To Be Filled By O.E.M. B550 Phantom Gaming 4/B550 Phantom Gaming 4, BIOS P3.20 09/27/2023

2023-11-24T08:55:03.030008+01:00 proxmox1 systemd[1]: Activating swap dev-pve-swap.swap - /dev/pve/swap...

2023-11-24T08:55:03.030013+01:00 proxmox1 kernel: [ 0.000000] tsc: Fast TSC calibration using PIT

2023-11-24T08:55:03.030014+01:00 proxmox1 kernel: [ 0.000000] tsc: Detected 3693.185 MHz processor

2023-11-24T08:55:03.030012+01:00 proxmox1 systemd[1]: Activated swap dev-pve-swap.swap - /dev/pve/swap.

2023-11-24T08:55:03.030014+01:00 proxmox1 kernel: [ 0.000643] e820: update [mem 0x00000000-0x00000fff] usable ==> reserved

2023-11-24T08:55:03.030016+01:00 proxmox1 kernel: [ 0.000645] e820: remove [mem 0x000a0000-0x000fffff] usable

2023-11-24T08:55:03.030017+01:00 proxmox1 kernel: [ 0.000654] last_pfn = 0x183e300 max_arch_pfn = 0x400000000

2023-11-24T08:55:03.030017+01:00 proxmox1 kernel: [ 0.000660] MTRR map: 5 entries (3 fixed + 2 variable; max 20), built from 9 variable MTRRs

2023-11-24T08:55:03.030017+01:00 proxmox1 kernel: [ 0.000662] x86/PAT: Configuration [0-7]: WB WC UC- UC WB WP UC- WT

2023-11-24T08:55:03.030017+01:00 proxmox1 systemd[1]: Reached target swap.target - Swaps.

2023-11-24T08:55:03.030020+01:00 proxmox1 kernel: [ 0.000850] e820: update [mem 0xc0000000-0xffffffff] usable ==> reserved

2023-11-24T08:55:03.030020+01:00 proxmox1 kernel: [ 0.000858] last_pfn = 0x9e000 max_arch_pfn = 0x400000000I attached the last three dmesg when kernel panics occurred.

So far I have not been able to find a pattern except that it always includes

Code:

BUG: kernel NULL pointer dereference, address: ...

#PF: supervisor read access in kernel mode

#PF: error_code(0x0000) - not-present pageSearching for this I found this post (https://bbs.archlinux.org/viewtopic.php?id=288632&p=2) stating disabling c states helped so I tried that yesterday with no luck.

There is also a comment about nftables and my last upgrade prior to the first kernel panic did upgrade libnftables1 and nftables (cf. attached apt logs) but that may be just a coincidence as I found no signs of nftables in the kernel panic call traces. I'm no expert so I'm probably missing something here.

Soon after the first crash I booted with a 5.15 kernel with same result.

What I have done on the hardware side to diagnose:

* replace multiple old storage SSDs with a new NVMe* replace a HDD in a zfs mirror that showed bad SMART values (but was not indicated as failing)

* cloned the boot SSD to a different SSD

* ran memory test

* upgraded BIOS

* disconnected all USB devices (eg. UPS, RS232 adapter)

* moved boot SSD, storage SSD and the ZFS HDD pool (4 HDDs) to a different server case with same type of motherboard, CPU and PSU using other SATA cables

Since none of that helped I ruled out hardware issues.

Does anyone have ideas what I can do next?

pveversion -v

Code:

proxmox-ve: 8.1.0 (running kernel: 6.5.11-4-pve)

pve-manager: 8.1.3 (running version: 8.1.3/b46aac3b42da5d15)

proxmox-kernel-helper: 8.0.9

pve-kernel-5.15: 7.4-6

proxmox-kernel-6.5.11-4-pve-signed: 6.5.11-4

proxmox-kernel-6.5: 6.5.11-4

proxmox-kernel-6.2.16-19-pve: 6.2.16-19

proxmox-kernel-6.2: 6.2.16-19

proxmox-kernel-6.2.16-18-pve: 6.2.16-18

proxmox-kernel-6.2.16-15-pve: 6.2.16-15

proxmox-kernel-6.2.16-12-pve: 6.2.16-12

pve-kernel-5.15.116-1-pve: 5.15.116-1

pve-kernel-5.15.30-2-pve: 5.15.30-3

ceph-fuse: 16.2.11+ds-2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx7

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.0

libproxmox-rs-perl: 0.3.1

libpve-access-control: 8.0.7

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.1.0

libpve-guest-common-perl: 5.0.6

libpve-http-server-perl: 5.0.5

libpve-network-perl: 0.9.4

libpve-rs-perl: 0.8.7

libpve-storage-perl: 8.0.5

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve3

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.0.4-1

proxmox-backup-file-restore: 3.0.4-1

proxmox-kernel-helper: 8.0.9

proxmox-mail-forward: 0.2.2

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.2

proxmox-widget-toolkit: 4.1.3

pve-cluster: 8.0.5

pve-container: 5.0.8

pve-docs: 8.1.3

pve-edk2-firmware: 4.2023.08-1

pve-firewall: 5.0.3

pve-firmware: 3.9-1

pve-ha-manager: 4.0.3

pve-i18n: 3.1.2

pve-qemu-kvm: 8.1.2-4

pve-xtermjs: 5.3.0-2

qemu-server: 8.0.10

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.0-pve3

Last edited: