Hello,

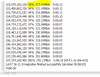

I first noticed this when using VZDump to backup a VM sitting on a ZFS filesystem to an NFS share on a different host. I am at the moment trying to migrate a 1 TB virtual disk from a Zpool that I wish to destroy and rebuild. The NFS target is backed by a ZFS file system on a fast SSD based zpool. I am using IP over IB. What happens is that after a period of time, the host stops posting telemetry to the PVE GUI and the copy job appears to stop completely. After some minutes of freaking out the host "comes back" and the copy job continues. I took a screen shot to better illustrate this event.

I can see from the lack of blinking lights on the hosts that the zpools are not in use, this is backed up with zpool -iostat, etc. I find errors in the syslog complaining that "cannot find volume..." I will paste in a few of these if it helps.

These two entries show first the unable to find disk type of message and then a copy job being kicked off a fraction of a second later.

Jun 23 13:18:47 pve-1 pvedaemon[8838]: zfs error: cannot open 'pve2zpool2/zsync': dataset does not exist

Jun 23 13:19:08 pve-1 pvedaemon[14058]: <root@pam> move disk VM 101: move --disk ide0 --storage pve1raid10temp

Actually this copy job was initiated while another larger copy job was in process. It may be coincidence but as soon as the vm 101 job began, my current job for vm 100 seemed to crash and that is how the first gap in telemetry occurred. The vm 100 job did complete, however, and then telemetry seemed to come back and the VM 100 job resumed chugging along. Then spontaneously stopped, started again, stopped, etc.

Another error pops up from time to time in the Syslog relating to the NFS share itself. Here it is:

Jun 23 14:01:22 pve-1 kernel: nfs: server 10.0.1.70 not responding, still trying

Jun 23 14:01:22 pve-1 kernel: nfs: server 10.0.1.70 OK

This error is puzzling in that the server then apparently responds immediately after the error is posted. I might have expected the "not responding, still trying" error to appear while the problem is occurring and then after some time passes the second item saying the server is OK would be posted. Instead it seems that something causes PVE to think NFS is down and it just stops trying, then after a varying amount of time passes, it decides to give it a try again and it works fine. So, what is causing it to stop trying? I am not sure how to go about looking. Any thoughts as to what is causing the server to "go away"? Could it be some part of the clustering system that gets bogged down and requires the host to essentially stop doing whatever it is doing while it catches up?

The host servers are both SuperMicro DX10 motherboards with two Xeon E2640 V4 (10 core) in SuperMicro 4U chassis with 256 GB RAM and SSD based Zpools with Infiniband for the storage traffic (host to host backups, disk migration, etc.). I don't think that the bottleneck is the target being busy writing from write cache to disk because there is absolutely no disk activity during these pauses.

I recently upgraded the Mellanox stack, which did help transfer speeds some and made VZDump backups possible. They would crash before and require manual unlocking of the host and killing of the VZDump job. Sometimes a reboot would be needed after it crashed. Now at least when it stops copying, it doesn't just give up completely and crash. The host just "goes away" from the cluster for a while. In any case, I now have a few production servers in this environment and not being able to migrate the vdisks without bringing the host offline intermittently is bad for my stomach.

Sincerely,

GB

I first noticed this when using VZDump to backup a VM sitting on a ZFS filesystem to an NFS share on a different host. I am at the moment trying to migrate a 1 TB virtual disk from a Zpool that I wish to destroy and rebuild. The NFS target is backed by a ZFS file system on a fast SSD based zpool. I am using IP over IB. What happens is that after a period of time, the host stops posting telemetry to the PVE GUI and the copy job appears to stop completely. After some minutes of freaking out the host "comes back" and the copy job continues. I took a screen shot to better illustrate this event.

I can see from the lack of blinking lights on the hosts that the zpools are not in use, this is backed up with zpool -iostat, etc. I find errors in the syslog complaining that "cannot find volume..." I will paste in a few of these if it helps.

These two entries show first the unable to find disk type of message and then a copy job being kicked off a fraction of a second later.

Jun 23 13:18:47 pve-1 pvedaemon[8838]: zfs error: cannot open 'pve2zpool2/zsync': dataset does not exist

Jun 23 13:19:08 pve-1 pvedaemon[14058]: <root@pam> move disk VM 101: move --disk ide0 --storage pve1raid10temp

Actually this copy job was initiated while another larger copy job was in process. It may be coincidence but as soon as the vm 101 job began, my current job for vm 100 seemed to crash and that is how the first gap in telemetry occurred. The vm 100 job did complete, however, and then telemetry seemed to come back and the VM 100 job resumed chugging along. Then spontaneously stopped, started again, stopped, etc.

Another error pops up from time to time in the Syslog relating to the NFS share itself. Here it is:

Jun 23 14:01:22 pve-1 kernel: nfs: server 10.0.1.70 not responding, still trying

Jun 23 14:01:22 pve-1 kernel: nfs: server 10.0.1.70 OK

This error is puzzling in that the server then apparently responds immediately after the error is posted. I might have expected the "not responding, still trying" error to appear while the problem is occurring and then after some time passes the second item saying the server is OK would be posted. Instead it seems that something causes PVE to think NFS is down and it just stops trying, then after a varying amount of time passes, it decides to give it a try again and it works fine. So, what is causing it to stop trying? I am not sure how to go about looking. Any thoughts as to what is causing the server to "go away"? Could it be some part of the clustering system that gets bogged down and requires the host to essentially stop doing whatever it is doing while it catches up?

The host servers are both SuperMicro DX10 motherboards with two Xeon E2640 V4 (10 core) in SuperMicro 4U chassis with 256 GB RAM and SSD based Zpools with Infiniband for the storage traffic (host to host backups, disk migration, etc.). I don't think that the bottleneck is the target being busy writing from write cache to disk because there is absolutely no disk activity during these pauses.

I recently upgraded the Mellanox stack, which did help transfer speeds some and made VZDump backups possible. They would crash before and require manual unlocking of the host and killing of the VZDump job. Sometimes a reboot would be needed after it crashed. Now at least when it stops copying, it doesn't just give up completely and crash. The host just "goes away" from the cluster for a while. In any case, I now have a few production servers in this environment and not being able to migrate the vdisks without bringing the host offline intermittently is bad for my stomach.

Sincerely,

GB