Hi all,

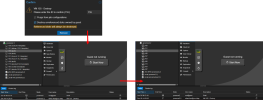

I am trying to use the clone function to create a new VM. If I only execute the process once, it works fine. However, if I attempt to run it multiple times simultaneously, the following error occurs:

At the time of the error occurrence, I tried to find PVE-related logs but couldn't find any warning messages indicating the cause of this issue.

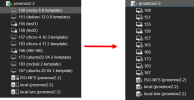

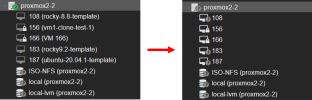

Additionally, I observed the progress output of the ongoing cloning task and noticed that the virtual machine undergoing the latest cloning process (ID: 166) did not display any progress output.

Although the progress for other virtual machines reached 100%, there was no sign of "TASK OK" even after waiting for a prolonged period.

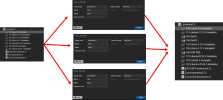

In the occurrence of an unknown state, I observed that the unknown state initially appears on three storages and quickly extends to the virtual machine.

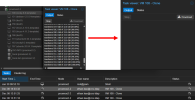

The cloning process in the task continues to run under such circumstances, but the log interface does not display any output.

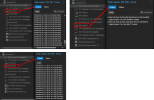

I managed to restart the service using the command

When I unlocked and attempted to delete one of the interrupted virtual machines, I found that it had been running the deletion process for a long time, ultimately leading to the recurrence of an unknown state.

I am trying to use the clone function to create a new VM. If I only execute the process once, it works fine. However, if I attempt to run it multiple times simultaneously, the following error occurs:

At the time of the error occurrence, I tried to find PVE-related logs but couldn't find any warning messages indicating the cause of this issue.

root@proxmox2-2:~# journalctl -efu pveproxy.service

-- Journal begins at Fri 2023-12-01 15:04:50 CST. --

……

Dec 08 13:55:40 proxmox2-2 pveproxy[1408]: worker 679914 finished

Dec 08 13:55:40 proxmox2-2 pveproxy[1408]: starting 1 worker(s)

Dec 08 13:55:40 proxmox2-2 pveproxy[1408]: worker 682954 started

Dec 08 13:55:41 proxmox2-2 pveproxy[682953]: got inotify poll request in wrong process - disabling inotify

Dec 08 13:55:43 proxmox2-2 pveproxy[682953]: worker exit

Dec 08 13:57:57 proxmox2-2 pveproxy[680937]: worker exit

Dec 08 13:57:57 proxmox2-2 pveproxy[1408]: worker 680937 finished

Dec 08 13:57:57 proxmox2-2 pveproxy[1408]: starting 1 worker(s)

Dec 08 13:57:57 proxmox2-2 pveproxy[1408]: worker 683307 started

Dec 08 14:00:08 proxmox2-2 pveproxy[680671]: worker exit

Dec 08 14:00:08 proxmox2-2 pveproxy[1408]: worker 680671 finished

Dec 08 14:00:08 proxmox2-2 pveproxy[1408]: starting 1 worker(s)

Dec 08 14:00:08 proxmox2-2 pveproxy[1408]: worker 683640 started

Dec 08 14:05:10 proxmox2-2 pveproxy[682954]: worker exit

Dec 08 14:05:10 proxmox2-2 pveproxy[1408]: worker 682954 finished

Dec 08 14:05:10 proxmox2-2 pveproxy[1408]: starting 1 worker(s)

Dec 08 14:05:10 proxmox2-2 pveproxy[1408]: worker 684406 started

Dec 08 14:09:57 proxmox2-2 pveproxy[683640]: worker exit

Dec 08 14:09:57 proxmox2-2 pveproxy[1408]: worker 683640 finished

Dec 08 14:09:57 proxmox2-2 pveproxy[1408]: starting 1 worker(s)

Dec 08 14:09:57 proxmox2-2 pveproxy[1408]: worker 684936 started

Dec 08 14:11:02 proxmox2-2 pveproxy[684406]: proxy detected vanished client connection

Dec 08 14:11:02 proxmox2-2 pveproxy[684406]: proxy detected vanished client connection

Dec 08 14:11:03 proxmox2-2 pveproxy[683307]: proxy detected vanished client connection

Dec 08 14:11:20 proxmox2-2 pveproxy[684406]: proxy detected vanished client connection

Dec 08 14:11:20 proxmox2-2 pveproxy[683307]: proxy detected vanished client connection

Dec 08 14:12:35 proxmox2-2 pveproxy[683307]: proxy detected vanished client connection

Dec 08 14:13:34 proxmox2-2 pveproxy[684936]: proxy detected vanished client connection

Dec 08 14:14:09 proxmox2-2 pveproxy[684406]: proxy detected vanished client connection

root@proxmox2-2:~# journalctl -efu pvestatd.service

-- Journal begins at Fri 2023-12-01 15:04:50 CST. --

……

Dec 08 13:51:44 proxmox2-2 pvestatd[681158]: status update time (8.053 seconds)

Dec 08 14:03:49 proxmox2-2 pvestatd[681158]: status update time (13.025 seconds)

root@proxmox2-2:~# journalctl -efu pvedaemon.service

-- Journal begins at Fri 2023-12-01 15:04:50 CST. --

……

Dec 08 13:51:18 proxmox2-2 pvedaemon[681054]: <ethan.kao@cns-ldap> starting task UPIDroxmox2-2:000A68D2:0175D2C0:6572AED6:qmclone:108:ethan.kao@cns-ldap:

Dec 08 13:51:25 proxmox2-2 pvedaemon[681052]: <ethan.kao@cns-ldap> starting task UPIDroxmox2-2:000A6919:0175D534:6572AEDD:qmclone:108:ethan.kao@cns-ldap:

Dec 08 14:03:17 proxmox2-2 pvedaemon[681052]: worker exit

Dec 08 14:03:17 proxmox2-2 pvedaemon[681051]: worker 681052 finished

Dec 08 14:03:17 proxmox2-2 pvedaemon[681051]: starting 1 worker(s)

Dec 08 14:03:17 proxmox2-2 pvedaemon[681051]: worker 684142 started

Dec 08 14:03:32 proxmox2-2 pvedaemon[681054]: <ethan.kao@cns-ldap> starting task UPIDroxmox2-2:000A709F:0176F165:6572B1B4:qmclone:108:ethan.kao@cns-ldap:

Dec 08 14:06:46 proxmox2-2 pvedaemon[681054]: worker exit

Dec 08 14:06:46 proxmox2-2 pvedaemon[681051]: worker 681054 finished

Dec 08 14:06:46 proxmox2-2 pvedaemon[681051]: starting 1 worker(s)

Dec 08 14:06:46 proxmox2-2 pvedaemon[681051]: worker 684589 started

Dec 08 14:06:50 proxmox2-2 pvedaemon[684142]: <root@pam> successful auth for user 'sam.huang@cns-ldap'

Additionally, I observed the progress output of the ongoing cloning task and noticed that the virtual machine undergoing the latest cloning process (ID: 166) did not display any progress output.

Although the progress for other virtual machines reached 100%, there was no sign of "TASK OK" even after waiting for a prolonged period.

In the occurrence of an unknown state, I observed that the unknown state initially appears on three storages and quickly extends to the virtual machine.

The cloning process in the task continues to run under such circumstances, but the log interface does not display any output.

I managed to restart the service using the command

systemctl restart pvedaemon and waited for about a minute for it to succeed. After the screen was restored, the three virtual machines executing the cloning process were locked.

When I unlocked and attempted to delete one of the interrupted virtual machines, I found that it had been running the deletion process for a long time, ultimately leading to the recurrence of an unknown state.