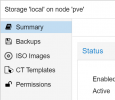

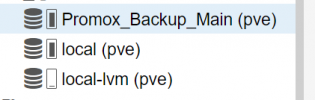

I've gained a much better understanding on where my data is

Proxmox_Backup_Main - Has my backups, shares the exact same storage as local(pve)

There was also a "Proxmox Backup" which I had deleted which I suspect is still taking storage space on the disk

Local_lvm is where the VMs which are currently running are living

@bbgeek17

@bbgeek17

root@pve:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

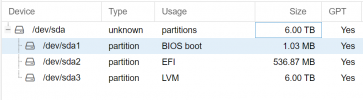

sda 8:0 0 5.5T 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 512M 0 part

└─sda3 8:3 0 5.5T 0 part

├─pve-swap 253:0 0 8G 0 lvm [SWAP]

├─pve-root 253:1 0 96G 0 lvm /

├─pve-data_tmeta 253:2 0 15.8G 0 lvm

│ └─pve-data-tpool 253:4 0 5.3T 0 lvm

│ ├─pve-data 253:5 0 5.3T 1 lvm

│ ├─pve-vm--101--disk--0 253:6 0 100G 0 lvm

│ ├─pve-vm--300--disk--0 253:7 0 100G 0 lvm

│ └─pve-vm--200--disk--0 253:9 0 100G 0 lvm

└─pve-data_tdata 253:3 0 5.3T 0 lvm

└─pve-data-tpool 253:4 0 5.3T 0 lvm

├─pve-data 253:5 0 5.3T 1 lvm

├─pve-vm--101--disk--0 253:6 0 100G 0 lvm

├─pve-vm--300--disk--0 253:7 0 100G 0 lvm

└─pve-vm--200--disk--0 253:9 0 100G 0 lvm

sr0 11:0 1 1024M 0 rom

-------------------------------------------------------------------------------------

root@pve:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

base-100-disk-0 pve Vri---tz-k 100.00g data

base-102-disk-0 pve Vri---tz-k 100.00g data

data pve twi-aotz-- <5.31t 0.97 0.33

root pve -wi-ao---- 96.00g

snap_vm-101-disk-0_Wazuh_installed_loopback pve Vri---tz-k 100.00g data vm-101-disk-0

snap_vm-300-disk-0_hybrid_running pve Vri---tz-k 100.00g data vm-300-disk-0

swap pve -wi-ao---- 8.00g

vm-101-disk-0 pve Vwi-aotz-- 100.00g data 12.37

vm-200-disk-0 pve Vwi-aotz-- 100.00g data 4.20

vm-300-disk-0 pve Vwi-aotz-- 100.00g data 11.46

-------------------------------------------------------------------------------------

root@pve:~# mount

sysfs on /sys type sysfs (rw,nosuid,nodev,noexec,relatime)

proc on /proc type proc (rw,relatime)

udev on /dev type devtmpfs (rw,nosuid,relatime,size=65944968k,nr_inodes=16486242,mode=755,inode64)

devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000)

tmpfs on /run type tmpfs (rw,nosuid,nodev,noexec,relatime,size=13195864k,mode=755,inode64)

/dev/mapper/pve-root on / type ext4 (rw,relatime,errors=remount-ro)

securityfs on /sys/kernel/security type securityfs (rw,nosuid,nodev,noexec,relatime)

tmpfs on /dev/shm type tmpfs (rw,nosuid,nodev,inode64)

tmpfs on /run/lock type tmpfs (rw,nosuid,nodev,noexec,relatime,size=5120k,inode64)

cgroup2 on /sys/fs/cgroup type cgroup2 (rw,nosuid,nodev,noexec,relatime)

pstore on /sys/fs/pstore type pstore (rw,nosuid,nodev,noexec,relatime)

bpf on /sys/fs/bpf type bpf (rw,nosuid,nodev,noexec,relatime,mode=700)

systemd-1 on /proc/sys/fs/binfmt_misc type autofs (rw,relatime,fd=30,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=35777)

mqueue on /dev/mqueue type mqueue (rw,nosuid,nodev,noexec,relatime)

debugfs on /sys/kernel/debug type debugfs (rw,nosuid,nodev,noexec,relatime)

hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime,pagesize=2M)

tracefs on /sys/kernel/tracing type tracefs (rw,nosuid,nodev,noexec,relatime)

fusectl on /sys/fs/fuse/connections type fusectl (rw,nosuid,nodev,noexec,relatime)

configfs on /sys/kernel/config type configfs (rw,nosuid,nodev,noexec,relatime)

sunrpc on /run/rpc_pipefs type rpc_pipefs (rw,relatime)

lxcfs on /var/lib/lxcfs type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

/dev/fuse on /etc/pve type fuse (rw,nosuid,nodev,relatime,user_id=0,group_id=0,default_permissions,allow_other)

tmpfs on /run/user/0 type tmpfs (rw,nosuid,nodev,relatime,size=13195860k,nr_inodes=3298965,mode=700,inode64)

-------------------------------------------------------------------------------------

root@pve:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 63G 0 63G 0% /dev

tmpfs 13G 1.7M 13G 1% /run

/dev/mapper/pve-root 94G 89G 525M 100% /

tmpfs 63G 49M 63G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/fuse 128M 20K 128M 1% /etc/pve

tmpfs 13G 0 13G 0% /run/user/0

-------------------------------------------------------------------------------------

root@pve:~# du -h -d1 /

14G /Proxmox_Backup_Main

du: cannot access '/proc/27804/task/27804/fd/3': No such file or directory

du: cannot access '/proc/27804/task/27804/fdinfo/3': No such file or directory

du: cannot access '/proc/27804/fd/4': No such file or directory

du: cannot access '/proc/27804/fdinfo/4': No such file or directory

0 /proc

8.7G /var

4.0K /home

92M /boot

12K /backup

2.3G /usr

0 /sys

20K /lost+found

4.0K /media

46M /dev

4.0K /mnt

40K /tmp

1.7M /run

4.9M /etc

4.0K /opt

4.0K /srv

40K /root

65G /Proxmox_Backup

89G /

Looks like /Proxmox_Backup is the culprit

@milew I believe you meant pve (typo?) see below

root@pve:/etc# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content vztmpl,iso,backup

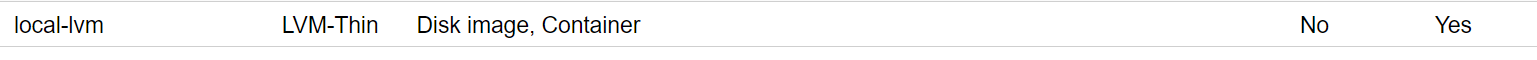

lvmthin: local-lvm

thinpool data

vgname pve

content rootdir,images

dir: Promox_Backup_Main

path /Proxmox_Backup_Main

content images,backup

nodes pve

prune-backups keep-all=1

shared 0