I've been trying to install a win10 VM for some days. It's been nearly impossible.

Every time I need to reboot the VM or shutdown, proxmox gets stuck. I can't stop or reset the vm, even with the unlock command. After some minutes, proxmox disconnects and just dies with no output on the display. I need to hard-reset the machine after that.

When the reset happens, the CTs and the TrueNas VM take like 5 minutes to boot up.

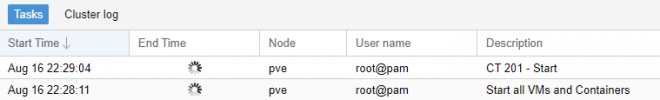

Image when win10 vm gets stuck:

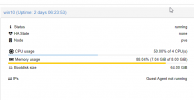

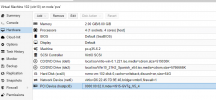

win10vm config.

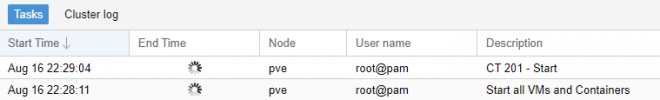

image when booting up

I hope you can help me diagnose. Should I do a proxmox clean install?

I can backup the CTs and just assign the IPs again. The problem might be the TrueNas VM, but as far as I know, it recognizes the created pools from the disks right?

Every time I need to reboot the VM or shutdown, proxmox gets stuck. I can't stop or reset the vm, even with the unlock command. After some minutes, proxmox disconnects and just dies with no output on the display. I need to hard-reset the machine after that.

When the reset happens, the CTs and the TrueNas VM take like 5 minutes to boot up.

Image when win10 vm gets stuck:

win10vm config.

image when booting up

Code:

pveversion -v

proxmox-ve: 7.2-1 (running kernel: 5.15.39-3-pve)

pve-manager: 7.2-7 (running version: 7.2-7/d0dd0e85)

pve-kernel-5.15: 7.2-8

pve-kernel-helper: 7.2-8

pve-kernel-5.13: 7.1-9

pve-kernel-5.15.39-3-pve: 5.15.39-3

pve-kernel-5.15.35-2-pve: 5.15.35-5

pve-kernel-5.15.35-1-pve: 5.15.35-3

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph-fuse: 15.2.15-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve1

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.2-2

libpve-guest-common-perl: 4.1-2

libpve-http-server-perl: 4.1-3

libpve-storage-perl: 7.2-7

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.2.5-1

proxmox-backup-file-restore: 2.2.5-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.1

pve-cluster: 7.2-2

pve-container: 4.2-2

pve-docs: 7.2-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.5-1

pve-ha-manager: 3.4.0

pve-i18n: 2.7-2

pve-qemu-kvm: 6.2.0-11

pve-xtermjs: 4.16.0-1

qemu-server: 7.2-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.5-pve1I hope you can help me diagnose. Should I do a proxmox clean install?

I can backup the CTs and just assign the IPs again. The problem might be the TrueNas VM, but as far as I know, it recognizes the created pools from the disks right?