First of all: thanks to the dev team for working hard to accomodate VMware users that possibly might convert to Proxmox! Probably I'm doing something wrong but so far it doesn't work for me.

I've set up a ProxMox POC at work next to our VMware cluster. Obviously, first thing I did after reading about the ESXi import wizard is upgrade . I added one of our ESXi servers as import storage and it seems to have worked at the time of setup. I was able to see the list of VMs once. Then I get an error 500 if I want to see the list again, I get this in the interface:

. I added one of our ESXi servers as import storage and it seems to have worked at the time of setup. I was able to see the list of VMs once. Then I get an error 500 if I want to see the list again, I get this in the interface:

I've set up a ProxMox POC at work next to our VMware cluster. Obviously, first thing I did after reading about the ESXi import wizard is upgrade

Code:

(vim.fault.HostConnectFault) { (500)

Code:

root@pve1:~# systemctl status pve-esxi*

● pve-esxi-fuse-pve1.example.org.scope

Loaded: loaded (/run/systemd/transient/pve-esxi-fuse-vms1.example.org.scope; transient)

Transient: yes

Active: active (running) since Thu 2024-03-28 05:12:48 CET; 52min ago

Tasks: 34 (limit: 231862)

Memory: 7.3M

CPU: 454ms

CGroup: /system.slice/pve-esxi-fuse-vms1.example.org.scope

└─141992 /usr/libexec/pve-esxi-import-tools/esxi-folder-fuse --skip-cert-verification --change-user nobody --chang>

Mar 28 05:12:48 pve1 systemd[1]: Started pve-esxi-fuse-vms1.example.org.scope.

Mar 28 05:13:00 pve1 esxi-folder-fus[141992]: pve1 esxi-folder-fuse[141992]: esxi fuse mount ready

lines 1-12/12 (END)

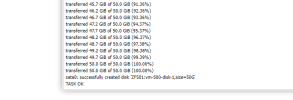

Code:

root@pve1:~# grep vms1 /var/log/*/* # then piped through sed. I redacted out my company's real domain name SAN name and 3 VM names.

grep: /var/log/journal/3d3ea5d411a34d5299bff9ac341c2d7b/system.journal: binary file matches

/var/log/pveproxy/access.log:::ffff:172.22.39.2 - root@pam [28/03/2024:05:13:10 +0100] "GET /api2/extjs/storage/vms1.example.org?_dc=1711599190610 HTTP/1.1" 200 234

/var/log/pveproxy/access.log:::ffff:172.22.39.2 - root@pam [28/03/2024:05:13:27 +0100] "GET /api2/json/nodes/pve3/storage/vms1.example.org/content?content=import HTTP/1.1" 200 837

/var/log/pveproxy/access.log:::ffff:172.22.39.2 - root@pam [28/03/2024:05:14:55 +0100] "GET /api2/extjs/nodes/pve3/storage/vms1.example.org/import-metadata?volume=ha-datacenter%2FSAN01_production02%2Fsomevms.example.org%2Fsomevms.example.org.vmx&_dc=1711599295190 HTTP/1.1" 200 96

/var/log/pveproxy/access.log:::ffff:172.22.39.2 - root@pam [28/03/2024:05:15:00 +0100] "GET /api2/extjs/nodes/pve3/storage/vms1.example.org/import-metadata?volume=ha-datacenter%2FSAN01_production02%2Fsomevms.example.org%2Fsomevms.example.org.vmx&_dc=1711599300895 HTTP/1.1" 200 96

/var/log/pveproxy/access.log:::ffff:172.22.39.2 - root@pam [28/03/2024:05:15:49 +0100] "GET /api2/extjs/nodes/pve3/storage/vms1.example.org/import-metadata?volume=ha-datacenter%2FSAN01_production02%2Fsomevms.example.org%2Fsomevms.example.org.vmx&_dc=1711599349061 HTTP/1.1" 200 96

/var/log/pveproxy/access.log:::ffff:172.22.39.2 - root@pam [28/03/2024:05:16:07 +0100] "GET /api2/json/nodes/pve3/storage/vms1.example.org/content?content=import HTTP/1.1" 500 13

/var/log/pveproxy/access.log:::ffff:172.22.39.2 - root@pam [28/03/2024:05:16:16 +0100] "GET /api2/json/nodes/pve3/storage/vms1.example.org/content?content=import HTTP/1.1" 500 13

/var/log/pveproxy/access.log:::ffff:172.22.39.2 - root@pam [28/03/2024:05:27:54 +0100] "GET /api2/json/nodes/pve3/storage/vms1.example.org/content?content=import HTTP/1.1" 500 13

/var/log/pveproxy/access.log:::ffff:172.22.39.2 - root@pam [28/03/2024:05:28:01 +0100] "GET /api2/json/nodes/pve3/storage/vms1.example.org/content?content=import HTTP/1.1" 500 13

/var/log/pveproxy/access.log:::ffff:172.22.39.2 - root@pam [28/03/2024:05:28:12 +0100] "GET /api2/json/nodes/pve1/storage/vms1.example.org/content?content=import HTTP/1.1" 500 13

/var/log/pveproxy/access.log:::ffff:172.22.39.2 - root@pam [28/03/2024:05:28:15 +0100] "GET /api2/json/nodes/pve2/storage/vms1.example.org/content?content=import HTTP/1.1" 500 13

/var/log/pveproxy/access.log.1:::ffff:172.22.39.7 - root@pam [27/03/2024:20:46:01 +0100] "GET /api2/json/nodes/pve2/storage/vms1.example.org/content?content=import HTTP/1.1" 500 13

/var/log/pveproxy/access.log.1:::ffff:172.22.39.7 - root@pam [27/03/2024:20:46:12 +0100] "GET /api2/extjs/storage/vms1.example.org?_dc=1711568772158 HTTP/1.1" 200 209

/var/log/pveproxy/access.log.1:::ffff:172.22.39.7 - root@pam [27/03/2024:20:46:22 +0100] "PUT /api2/extjs/storage/vms1.example.org HTTP/1.1" 200 65

/var/log/pveproxy/access.log.1:::ffff:172.22.39.7 - root@pam [27/03/2024:20:46:24 +0100] "GET /api2/extjs/storage/vms1.example.org?_dc=1711568784015 HTTP/1.1" 200 209

/var/log/pveproxy/access.log.1:::ffff:172.22.39.7 - root@pam [27/03/2024:20:46:25 +0100] "PUT /api2/extjs/storage/vms1.example.org HTTP/1.1" 200 65

/var/log/pveproxy/access.log.1:::ffff:172.22.39.7 - root@pam [27/03/2024:20:46:28 +0100] "GET /api2/json/nodes/pve2/storage/vms1.example.org/content?content=import HTTP/1.1" 500 13

/var/log/pveproxy/access.log.1:::ffff:172.22.39.7 - root@pam [27/03/2024:20:54:58 +0100] "GET /api2/json/nodes/pve3/storage/vms1.example.org/content?content=import HTTP/1.1" 500 13

/var/log/pveproxy/access.log.1:::ffff:172.22.39.7 - root@pam [27/03/2024:20:55:16 +0100] "GET /api2/json/nodes/pve3/storage/vms1.example.org/content?content=import HTTP/1.1" 500 13

/var/log/pveproxy/access.log.1:::ffff:172.22.39.7 - root@pam [27/03/2024:20:55:49 +0100] "DELETE /api2/extjs/storage//vms1.example.org HTTP/1.1" 200 25

root@pve1:~#