[SOLVED] Proxmox 8.0 / Kernel 6.2.x 100%CPU issue with Windows Server 2019 VMs

- Thread starter jens-maus

- Start date

-

- Tags

- issue proxmox8 windows2019

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

This is interesting.

My gui install server 2022 (also why so many of you on 2019 - that's so half a decade ago... ;-) )

My 2019 Core install for just admin center

So its not a general 'windows server 2019 pegs CPU on 2019 issue` definitely more complicated than that.... are you all running with UEFI and TPM (i am on all windows VMs). I am using host as CPU - i see for folks with issue, that didn't help.

What caching policy / disk driver are you using? I use write through and virtio block. Over the years i know paging performance issues can easily tank a windows server and spike CPU... even if you have gobs of free memory in the VM... windows is interesting in how it uses memory for cache and paging in general...

My gui install server 2022 (also why so many of you on 2019 - that's so half a decade ago... ;-) )

My 2019 Core install for just admin center

So its not a general 'windows server 2019 pegs CPU on 2019 issue` definitely more complicated than that.... are you all running with UEFI and TPM (i am on all windows VMs). I am using host as CPU - i see for folks with issue, that didn't help.

What caching policy / disk driver are you using? I use write through and virtio block. Over the years i know paging performance issues can easily tank a windows server and spike CPU... even if you have gobs of free memory in the VM... windows is interesting in how it uses memory for cache and paging in general...

I can confirm that with 6.2.16-14 problem still exists

Setting "mitigations=off" the only solution

@ProxmoxTeam

Any progress on this issue?

P.S Taking into consideration that KSM with kernel 6.x is broken on dual socket boards +1 vote to release 5.15 kernel

Setting "mitigations=off" the only solution

@ProxmoxTeam

Any progress on this issue?

P.S Taking into consideration that KSM with kernel 6.x is broken on dual socket boards +1 vote to release 5.15 kernel

Last edited:

Setting

I use Intel Xeon Gold 5317 CPU on 2 sockets.

The vm is a windows server 2022, usually the vm has a cpu load of 20-35% (12 cores), which is quite a significant load.

When using kernel 6.x I get a load of 95 to 99%, so no chance for a working system at all. Increasing the number of vm cores didn't help.

mitigations=off didn't help me either.I use Intel Xeon Gold 5317 CPU on 2 sockets.

The vm is a windows server 2022, usually the vm has a cpu load of 20-35% (12 cores), which is quite a significant load.

When using kernel 6.x I get a load of 95 to 99%, so no chance for a working system at all. Increasing the number of vm cores didn't help.

Settingmitigations=offdidn't help me either.

I use Intel Xeon Gold 5317 CPU on 2 sockets.

The vm is a windows server 2022, usually the vm has a cpu load of 20-35% (12 cores), which is quite a significant load.

When using kernel 6.x I get a load of 95 to 99%, so no chance for a working system at all. Increasing the number of vm cores didn't help.

If on your systems KSM is in use then try to disable it with "systemctl disable --now ksmtuned" on top of

and cross-check that mitigations are really off with lscpumitigations=off

If on your systems KSM is in use then try to disable it with "systemctl disable --now ksmtuned" on top of

and cross-check that mitigations are really off with lscpu

I've changed the /etc/grub file and added

mitigations=off.This is what I get with

lscpu

Code:

Model name: Intel(R) Xeon(R) Gold 5317 CPU @ 3.00GHz

(...)

Vulnerabilities:

Gather data sampling: Vulnerable: No microcode

Itlb multihit: Not affected

L1tf: Not affected

Mds: Not affected

Meltdown: Not affected

Mmio stale data: Vulnerable: Clear CPU buffers attempted, no microcode; SMT vulnerable

Retbleed: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Spectre v2: Mitigation; Enhanced IBRS, IBPB conditional, RSB filling, PBRSB-eIBRS SW sequence

Srbds: Not affected

Tsx async abort: Not affectedI still get massive loads. Am I doing something wrong? If so, I'd be happy to learn.

1. updated /etc/default/grub:

2. update-grub

3. reboot

4. lscpu

GRUB_CMDLINE_LINUX_DEFAULT="quiet mitigations=off"GRUB_CMDLINE_LINUX="mitigations=off"2. update-grub

3. reboot

4. lscpu

Last edited:

If I am not mistaken mitigations=off is not applied

What filesystem behind your root partition?

In my case (zfs) i had to edit cmdfile file

Check this post:

https://forum.proxmox.com/threads/disable-spectre-meltdown-mitigations.112553/post-485863

What filesystem behind your root partition?

In my case (zfs) i had to edit cmdfile file

Check this post:

https://forum.proxmox.com/threads/disable-spectre-meltdown-mitigations.112553/post-485863

If I am not mistaken mitigations=off is not applied

What filesystem behind your root partition?

In my case (zfs) i had to edit cmdfile file

Check this post:

https://forum.proxmox.com/threads/disable-spectre-meltdown-mitigations.112553/post-485863

Looks like this is exactly what I needed. Thanks a lot!

Now my configuration looks like this:

Code:

Vulnerabilities:

Gather data sampling: Vulnerable: No microcode

Itlb multihit: Not affected

L1tf: Not affected

Mds: Not affected

Meltdown: Not affected

Mmio stale data: Vulnerable

Retbleed: Not affected

Spec store bypass: Vulnerable

Spectre v1: Vulnerable: __user pointer sanitization and usercopy barriers only; no swapgs barriers

Spectre v2: Vulnerable, IBPB: disabled, STIBP: disabled, PBRSB-eIBRS: Vulnerable

Srbds: Not affected

Tsx async abort: Not affectedSadly my vm still has a massive workload with the current kernel 6.2.16-12-pve and so the problem remains.

Last edited:

Looks like this is exactly what I needed. Thanks a lot!

Now my configuration looks like this:

Code:Vulnerabilities: Gather data sampling: Vulnerable: No microcode Itlb multihit: Not affected L1tf: Not affected Mds: Not affected Meltdown: Not affected Mmio stale data: Vulnerable Retbleed: Not affected Spec store bypass: Vulnerable Spectre v1: Vulnerable: __user pointer sanitization and usercopy barriers only; no swapgs barriers Spectre v2: Vulnerable, IBPB: disabled, STIBP: disabled, PBRSB-eIBRS: Vulnerable Srbds: Not affected Tsx async abort: Not affected

Sadly my vm still has a massive workload with the current kernel 6.2.16-12-pve and so the problem remains.

Well, now it looks reasonable

Next, what about KSM? Have you disabled it?

Yup, has already been deactivated

I'm really puzzling.

I can well imagine that my application VMs are not the norm because they already have 20-30% load anyway. And that's with 16 cores and 48 GB RAM. But with the current kernel, it's only gotten worse so far.

Code:

systemctl status ksmtuned.service

○ ksmtuned.service - Kernel Samepage Merging (KSM) Tuning Daemon

Loaded: loaded (/lib/systemd/system/ksmtuned.service; disabled; preset: enabled)

Active: inactive (dead)I'm really puzzling.

I can well imagine that my application VMs are not the norm because they already have 20-30% load anyway. And that's with 16 cores and 48 GB RAM. But with the current kernel, it's only gotten worse so far.

Yup, has already been deactivated

Code:systemctl status ksmtuned.service ○ ksmtuned.service - Kernel Samepage Merging (KSM) Tuning Daemon Loaded: loaded (/lib/systemd/system/ksmtuned.service; disabled; preset: enabled) Active: inactive (dead)

I'm really puzzling.

I can well imagine that my application VMs are not the norm because they already have 20-30% load anyway. And that's with 16 cores and 48 GB RAM. But with the current kernel, it's only gotten worse so far.

Could you try to continuously ping your VM (from any other device in network) like gateway?

Huge ping delay spikes are common for the topic issue. If you don't have any then probably there is something else interfere

P.S. Do not ping from another VM on the same host

P.S.S In my case before setting mitigations=off I saw ping spikes and RDS server was almost impossible to use by users

Last edited:

Hello. Have the same problem as described on post #1.

Workarounded following @Whatever instructions. Added

Steps made:

1.

2.

3.

4.

My Specs:

I have another Proxmox 8 installations with similar configurations (also with vm2019 vms) and had this problem just in one of them (even a case with near identical in hardware). Probably because ram size and cpu cores assigned to vm as discused on previous posts:

> PVE 8.0.4 / 6.2.16-15-pve / Dual Xeon E5-2696 v3 / ZFS RAID1 (Enterprise NVME) / VM: W2019 (64GB RAM + 32 cores)

> PVE 8.0.4 / 6.2.16-12-pve / Dual Xeon E5-2696 v3 / ZFS RAID1 (Enterprise NVME) / VM: W2019 (16GB RAM + 16 cores)

> PVE 8.0.4 / 6.2.16-15-pve / Dual Xeon Silver 4208 / LVM (SAS SSD Hard RAID) / VM: W2019 (32GB RAM + 16 cores)

> PVE 8.0.4 / 6.2.16-12-pve / Dual Xeon X5670 / LVM (SAS SSD Hardware RAID) / VM: W2019 (24GB RAM + 12 cores)

Thanx @Whatever for your invaluable help!

Workarounded following @Whatever instructions. Added

mitigations=off on the end of /etc/kernel/cmdline (not "cdmfile" in my case) and disabling KSM using systemctl disable --now ksmtunedSteps made:

1.

nano /etc/kernel/cmdline -> root=ZFS=rpool/ROOT/pve-1 boot=zfs mitigations=off2.

proxmox-boot-tool refresh3.

systemctl disable --now ksmtuned4.

rebootMy Specs:

> Proxmox 8.0.4

> kernel 6.2.16-15-pve

> Dual Xeon E5-2696 v3

> ZFS RAID1 (Enterprise NVME)

> VM with Win Server 2019 (64GB RAM + 32 cores)I have another Proxmox 8 installations with similar configurations (also with vm2019 vms) and had this problem just in one of them (even a case with near identical in hardware). Probably because ram size and cpu cores assigned to vm as discused on previous posts:

> PVE 8.0.4 / 6.2.16-15-pve / Dual Xeon E5-2696 v3 / ZFS RAID1 (Enterprise NVME) / VM: W2019 (64GB RAM + 32 cores)

CPU Problems> PVE 8.0.4 / 6.2.16-12-pve / Dual Xeon E5-2696 v3 / ZFS RAID1 (Enterprise NVME) / VM: W2019 (16GB RAM + 16 cores)

No issues> PVE 8.0.4 / 6.2.16-15-pve / Dual Xeon Silver 4208 / LVM (SAS SSD Hard RAID) / VM: W2019 (32GB RAM + 16 cores)

No issues> PVE 8.0.4 / 6.2.16-12-pve / Dual Xeon X5670 / LVM (SAS SSD Hardware RAID) / VM: W2019 (24GB RAM + 12 cores)

No issuesThanx @Whatever for your invaluable help!

I have another Proxmox 8 installations with similar configurations (also with vm2019 vms) and had this problem just in one of them (even a case with near identical in hardware). Probably because ram size and cpu cores assigned to vm as discused on previous posts:

> PVE 8.0.4 / 6.2.16-15-pve / Dual Xeon E5-2696 v3 / ZFS RAID1 (Enterprise NVME) / VM: W2019 (64GB RAM + 32 cores)

CPU Problems

Host1 - problems

| CPU(s) 56 x Intel(R) Xeon(R) CPU E5-2690 v4 @ 2.60GHz (2 Sockets) Kernel Version Linux 6.2.16-14-pve #1 SMP PREEMPT_DYNAMIC PMX 6.2.16-14 (2023-09-19T08:17Z)

| |

| VM: W2019 (96GB RAM + 42 cores, NUMA) | |

Host2 - problems

| CPU(s) 48 x Intel(R) Xeon(R) CPU E5-2697 v2 @ 2.70GHz (2 Sockets) Kernel Version Linux 6.2.16-15-pve #1 SMP PREEMPT_DYNAMIC PMX 6.2.16-15 (2023-09-28T13:53Z)

| |

| VM: W2019 (128GB RAM + 32 cores, NUMA) |

For the sake of completeness: the server and its services are closely monitored. I don't have exact ping numbers, but the access times shoot up as soon as I move the vm to the cluster node with kernel 6.2.x or restart it there.Could you try to continuously ping your VM (from any other device in network) like gateway?

Huge ping delay spikes are common for the topic issue. If you don't have any then probably there is something else interfere

P.S. Do not ping from another VM on the same host

P.S.S In my case before setting mitigations=off I saw ping spikes and RDS server was almost impossible to use by users

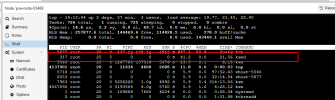

One more observation:

Disabling KSM requires rebooting the host because stopping KSM service (and/or disabling it) does not really stop it.

ksmd becomes ghost and cannot be killed (I didnt manage to kill it)

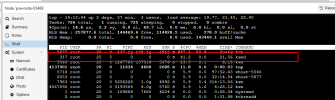

This screen shows: kernel with mitigations=off and ksdtuned stopped and disabled (but host is not yet rebooted)

Disabling KSM requires rebooting the host because stopping KSM service (and/or disabling it) does not really stop it.

ksmd becomes ghost and cannot be killed (I didnt manage to kill it)

This screen shows: kernel with mitigations=off and ksdtuned stopped and disabled (but host is not yet rebooted)

This seems to be getting some love from Proxmox over on this thread: https://forum.proxmox.com/threads/ksm-memory-sharing-not-working-as-expected-on-6-2-x-kernel.131082/