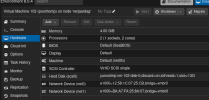

Today I was unable to start any freeBSD (I tried OpnSense and PfSense) based OS using Proxmox Version 8.0.4 after a server reboot. Both VMs got stuck at boot when loading CAM. I also checked on my second playing field server which also suffered from the issue.

What solved my issues was rolling back the last 8 updates done by apt. I did:

I don't know which package cause the issue but I still wanted to report it.

What solved my issues was rolling back the last 8 updates done by apt. I did:

- apt install libpve-rs-perl=0.8.5

- apt install pve-qemu-kvm=8.0.2-7

- apt install libpve-storage-perl=8.0.2

- apt install ifupdown2=3.2.0-1+pmx5

- apt install pve-xtermjs=4.16.0-3

- apt install qemu-server=8.0.7

- apt install libpve-http-server-perl=5.0.4

- apt install libpve-common-perl=8.0.9

I don't know which package cause the issue but I still wanted to report it.