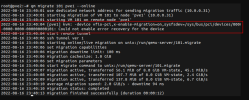

2024-04-05T17:40:34.214650+10:00 DEV3 systemd[1]: Started session-323.scope - Session 323 of User root.

2024-04-05T17:40:35.717408+10:00 DEV3 qm[2866488]: <root@pam> starting task UPID

EV3:002BBD39:044EA2E9:660FAAF3:qmstart:100:root@pam:

2024-04-05T17:40:35.718014+10:00 DEV3 qm[2866489]: start VM 100: UPID

EV3:002BBD39:044EA2E9:660FAAF3:qmstart:100:root@pam:

2024-04-05T17:40:35.898306+10:00 DEV3 kernel: [722616.748068] nvidia-vgpu-vfio 00000000-0000-0000-0000-000000000100: Adding to iommu group 97

2024-04-05T17:40:36.047105+10:00 DEV3 systemd[1]: Started 100.scope.

2024-04-05T17:40:37.610297+10:00 DEV3 kernel: [722618.457164] tap100i0: entered promiscuous mode

2024-04-05T17:40:37.726306+10:00 DEV3 kernel: [722618.574823] vmbr0: port 1(fwpr100p0) entered blocking state

2024-04-05T17:40:37.726340+10:00 DEV3 kernel: [722618.574837] vmbr0: port 1(fwpr100p0) entered disabled state

2024-04-05T17:40:37.726345+10:00 DEV3 kernel: [722618.574898] fwpr100p0: entered allmulticast mode

2024-04-05T17:40:37.726349+10:00 DEV3 kernel: [722618.575030] fwpr100p0: entered promiscuous mode

2024-04-05T17:40:37.726353+10:00 DEV3 kernel: [722618.575161] vmbr0: port 1(fwpr100p0) entered blocking state

2024-04-05T17:40:37.726358+10:00 DEV3 kernel: [722618.575166] vmbr0: port 1(fwpr100p0) entered forwarding state

2024-04-05T17:40:37.750308+10:00 DEV3 kernel: [722618.598775] fwbr100i0: port 1(fwln100i0) entered blocking state

2024-04-05T17:40:37.750344+10:00 DEV3 kernel: [722618.598788] fwbr100i0: port 1(fwln100i0) entered disabled state

2024-04-05T17:40:37.750348+10:00 DEV3 kernel: [722618.598843] fwln100i0: entered allmulticast mode

2024-04-05T17:40:37.750350+10:00 DEV3 kernel: [722618.599036] fwln100i0: entered promiscuous mode

2024-04-05T17:40:37.750353+10:00 DEV3 kernel: [722618.599150] fwbr100i0: port 1(fwln100i0) entered blocking state

2024-04-05T17:40:37.750380+10:00 DEV3 kernel: [722618.599156] fwbr100i0: port 1(fwln100i0) entered forwarding state

2024-04-05T17:40:37.774300+10:00 DEV3 kernel: [722618.623041] fwbr100i0: port 2(tap100i0) entered blocking state

2024-04-05T17:40:37.774325+10:00 DEV3 kernel: [722618.623052] fwbr100i0: port 2(tap100i0) entered disabled state

2024-04-05T17:40:37.774329+10:00 DEV3 kernel: [722618.623110] tap100i0: entered allmulticast mode

2024-04-05T17:40:37.774332+10:00 DEV3 kernel: [722618.623298] fwbr100i0: port 2(tap100i0) entered blocking state

2024-04-05T17:40:37.774335+10:00 DEV3 kernel: [722618.623303] fwbr100i0: port 2(tap100i0) entered forwarding state

2024-04-05T17:40:37.887943+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_env_log: vmiop-env: guest_max_gpfn:0x0

2024-04-05T17:40:37.888181+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_env_log: (0x0): Received start call from nvidia-vgpu-vfio module: mdev uuid 00000000-0000-0000-0000-000000000100 GPU >

2024-04-05T17:40:37.888703+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_env_log: (0x0): pluginconfig: vgpu_type_id=63

2024-04-05T17:40:37.893382+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_env_log: Successfully updated env symbols!

2024-04-05T17:40:37.898304+10:00 DEV3 kernel: [722618.746481] NVRM: Software scheduler timeslice set to 2083uS.

2024-04-05T17:40:37.898499+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: (0x0): gpu-pci-id : 0x8300

2024-04-05T17:40:37.898613+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: (0x0): vgpu_type : Quadro

2024-04-05T17:40:37.898705+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: (0x0): Framebuffer: 0x38000000

2024-04-05T17:40:37.898803+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: (0x0): Virtual Device Id: 0x1bb3:0x1204

2024-04-05T17:40:37.898877+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: (0x0): FRL Value: 60 FPS

2024-04-05T17:40:37.898960+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: ######## vGPU Manager Information: ########

2024-04-05T17:40:37.899046+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: Driver Version: 535.104.06

2024-04-05T17:40:37.899131+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: (0x0): Detected ECC enabled on physical GPU.

2024-04-05T17:40:37.899218+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: (0x0): Guest usable FB size is reduced due to ECC.

2024-04-05T17:40:37.902992+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: (0x0): vGPU supported range: (0x70001, 0x120001)

2024-04-05T17:40:37.948013+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: (0x0): Init frame copy engine: syncing...

2024-04-05T17:40:38.022556+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: (0x0): vGPU migration enabled

2024-04-05T17:40:38.058949+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: (0x0): vGPU manager is running in non-SRIOV mode.

2024-04-05T17:40:38.064635+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: display_init inst: 0 successful

2024-04-05T17:40:38.074290+10:00 DEV3 kernel: [722618.922608] [nvidia-vgpu-vfio] 00000000-0000-0000-0000-000000000100: vGPU migration enabled with upstream V2 migration protocol

2024-04-05T17:40:38.314880+10:00 DEV3 qm[2866488]: <root@pam> end task UPID

EV3:002BBD39:044EA2E9:660FAAF3:qmstart:100:root@pam: OK

2024-04-05T17:40:38.363846+10:00 DEV3 systemd[1]: session-323.scope: Deactivated successfully.

2024-04-05T17:40:38.364013+10:00 DEV3 systemd[1]: session-323.scope: Consumed 1.809s CPU time.

2024-04-05T17:40:38.922660+10:00 DEV3 systemd[1]: Started session-324.scope - Session 324 of User root.

2024-04-05T17:40:40.375557+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_env_log: (0x0): Plugin migration stage change none -> resume. QEMU migration state: RESUME

2024-04-05T17:40:55.837677+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: (0x0): Start restoring vGPU state ...

2024-04-05T17:40:55.837982+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: Source host driver version: 535.104.06

2024-04-05T17:40:55.958828+10:00 DEV3 nvidia-vgpu-mgr[2866699]: error: vmiop_log: Assertion Failed at 0x97f0d3c2:2372

2024-04-05T17:40:55.959401+10:00 DEV3 nvidia-vgpu-mgr[2866699]: error: vmiop_log: 8 frames returned by backtrace

2024-04-05T17:40:55.959548+10:00 DEV3 nvidia-vgpu-mgr[2866699]: error: vmiop_log: /lib/x86_64-linux-gnu/libnvidia-vgpu.so(_nv009111vgpu+0x35) [0x7f0397ec5675]

2024-04-05T17:40:55.959666+10:00 DEV3 nvidia-vgpu-mgr[2866699]: error: vmiop_log: /lib/x86_64-linux-gnu/libnvidia-vgpu.so(_nv011623vgpu+0x180) [0x7f0397ed4c10]

2024-04-05T17:40:55.959758+10:00 DEV3 nvidia-vgpu-mgr[2866699]: error: vmiop_log: /lib/x86_64-linux-gnu/libnvidia-vgpu.so(_nv011633vgpu+0x3e2) [0x7f0397f0d3c2]

2024-04-05T17:40:55.959851+10:00 DEV3 nvidia-vgpu-mgr[2866699]: error: vmiop_log: /lib/x86_64-linux-gnu/libnvidia-vgpu.so(_nv004320vgpu+0xcf) [0x7f0397ed6b6f]

2024-04-05T17:40:55.959942+10:00 DEV3 nvidia-vgpu-mgr[2866699]: error: vmiop_log: /lib/x86_64-linux-gnu/libnvidia-vgpu.so(_nv011566vgpu+0x4b) [0x7f0397e69adb]

2024-04-05T17:40:55.960033+10:00 DEV3 nvidia-vgpu-mgr[2866699]: error: vmiop_log: vgpu(+0x167e1) [0x563932c167e1]

2024-04-05T17:40:55.960127+10:00 DEV3 nvidia-vgpu-mgr[2866699]: error: vmiop_log: /lib/x86_64-linux-gnu/libc.so.6(+0x89044) [0x7f03984d7044]

2024-04-05T17:40:55.960219+10:00 DEV3 nvidia-vgpu-mgr[2866699]: error: vmiop_log: /lib/x86_64-linux-gnu/libc.so.6(+0x10961c) [0x7f039855761c]

2024-04-05T17:40:55.960312+10:00 DEV3 nvidia-vgpu-mgr[2866699]: notice: vmiop_log: (0x0): Migration Ended

2024-04-05T17:40:55.960408+10:00 DEV3 nvidia-vgpu-mgr[2866699]: error: vmiop_env_log: (0x0): Failed to write device buffer err: 0x1f

2024-04-05T17:40:55.962296+10:00 DEV3 kernel: [722636.810144] [nvidia-vgpu-vfio] 00000000-0000-0000-0000-000000000100: Failed to write device data -5

2024-04-05T17:40:55.963109+10:00 DEV3 QEMU[2866502]: kvm: error while loading state section id 59(0000:00:10.0/vfio)

2024-04-05T17:40:55.963620+10:00 DEV3 QEMU[2866502]: kvm: load of migration failed: Input/output error

2024-04-05T17:40:56.422270+10:00 DEV3 kernel: [722637.271571] tap100i0: left allmulticast mode

2024-04-05T17:40:56.422302+10:00 DEV3 kernel: [722637.271649] fwbr100i0: port 2(tap100i0) entered disabled state

2024-04-05T17:40:56.458295+10:00 DEV3 kernel: [722637.307397] fwbr100i0: port 1(fwln100i0) entered disabled state

2024-04-05T17:40:56.458317+10:00 DEV3 kernel: [722637.307595] vmbr0: port 1(fwpr100p0) entered disabled state

2024-04-05T17:40:56.458334+10:00 DEV3 kernel: [722637.308154] fwln100i0 (unregistering): left allmulticast mode

2024-04-05T17:40:56.458339+10:00 DEV3 kernel: [722637.308161] fwln100i0 (unregistering): left promiscuous mode

2024-04-05T17:40:56.458343+10:00 DEV3 kernel: [722637.308167] fwbr100i0: port 1(fwln100i0) entered disabled state

2024-04-05T17:40:56.502301+10:00 DEV3 kernel: [722637.348766] fwpr100p0 (unregistering): left allmulticast mode

2024-04-05T17:40:56.502322+10:00 DEV3 kernel: [722637.348774] fwpr100p0 (unregistering): left promiscuous mode

2024-04-05T17:40:56.502328+10:00 DEV3 kernel: [722637.348778] vmbr0: port 1(fwpr100p0) entered disabled state

2024-04-05T17:40:56.618972+10:00 DEV3 systemd[1]: Started session-325.scope - Session 325 of User root.

2024-04-05T17:40:56.856328+10:00 DEV3 systemd[1]: 100.scope: Deactivated successfully.

2024-04-05T17:40:56.856645+10:00 DEV3 systemd[1]: 100.scope: Consumed 6.240s CPU time.

2024-04-05T17:40:58.147517+10:00 DEV3 qm[2866793]: <root@pam> starting task UPID

EV3:002BBE81:044EABAC:660FAB0A:qmstop:100:root@pam:

2024-04-05T17:40:58.148090+10:00 DEV3 qm[2866817]: stop VM 100: UPID

EV3:002BBE81:044EABAC:660FAB0A:qmstop:100:root@pam:

2024-04-05T17:41:08.170305+10:00 DEV3 kernel: [722649.017065] nvidia-vgpu-vfio 00000000-0000-0000-0000-000000000100: Removing from iommu group 97

2024-04-05T17:41:08.184098+10:00 DEV3 qm[2866793]: <root@pam> end task UPID

EV3:002BBE81:044EABAC:660FAB0A:qmstop:100:root@pam: OK

2024-04-05T17:41:08.228716+10:00 DEV3 systemd[1]: session-325.scope: Deactivated successfully.

2024-04-05T17:41:08.228908+10:00 DEV3 systemd[1]: session-325.scope: Consumed 1.556s CPU time.

2024-04-05T17:41:08.266559+10:00 DEV3 systemd[1]: session-324.scope: Deactivated successfully.

2024-04-05T17:41:08.266900+10:00 DEV3 systemd[1]: session-324.scope: Consumed 1.380s CPU time.

2024-04-05T17:41:09.255200+10:00 DEV3 pmxcfs[1723]: [status] notice: received log

2024-04-05T17:41:18.375265+10:00 DEV3 systemd[1]: Stopping

user@0.service - User Manager for UID 0...

2024-04-05T17:41:18.376578+10:00 DEV3 systemd[2866427]: Activating special unit exit.target...

2024-04-05T17:41:18.376778+10:00 DEV3 systemd[2866427]: Stopped target default.target - Main User Target.

2024-04-05T17:41:18.376952+10:00 DEV3 systemd[2866427]: Stopped target basic.target - Basic System.

2024-04-05T17:41:18.377081+10:00 DEV3 systemd[2866427]: Stopped target paths.target - Paths.

2024-04-05T17:41:18.377207+10:00 DEV3 systemd[2866427]: Stopped target sockets.target - Sockets.

2024-04-05T17:41:18.377330+10:00 DEV3 systemd[2866427]: Stopped target timers.target - Timers.

2024-04-05T17:41:18.377466+10:00 DEV3 systemd[2866427]: Closed dbus.socket - D-Bus User Message Bus Socket.

2024-04-05T17:41:18.377591+10:00 DEV3 systemd[2866427]: Closed dirmngr.socket - GnuPG network certificate management daemon.

2024-04-05T17:41:18.377721+10:00 DEV3 systemd[2866427]: Closed gpg-agent-browser.socket - GnuPG cryptographic agent and passphrase cache (access for web browsers).

2024-04-05T17:41:18.377930+10:00 DEV3 systemd[2866427]: Closed gpg-agent-extra.socket - GnuPG cryptographic agent and passphrase cache (restricted).

2024-04-05T17:41:18.378124+10:00 DEV3 systemd[2866427]: Closed gpg-agent-ssh.socket - GnuPG cryptographic agent (ssh-agent emulation).

2024-04-05T17:41:18.378375+10:00 DEV3 systemd[2866427]: Closed gpg-agent.socket - GnuPG cryptographic agent and passphrase cache.

2024-04-05T17:41:18.378912+10:00 DEV3 systemd[2866427]: Removed slice app.slice - User Application Slice.

2024-04-05T17:41:18.379089+10:00 DEV3 systemd[2866427]: Reached target shutdown.target - Shutdown.

2024-04-05T17:41:18.379239+10:00 DEV3 systemd[2866427]: Finished systemd-exit.service - Exit the Session.