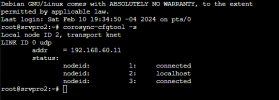

The nodes inexplicably turn off in CEPH cluster in proxmox ve 8.1.4, this happens occasionally when a backup is made of a VM whose storage is in a pool, suddenly the node is inhibited and I have to restart it to make it work again.

I have 3 identical nodes.

Please could someone help me with this problem?

I have 3 identical nodes.

Please could someone help me with this problem?