I recently purchased a used R730xd LFF 12-bay (3.5”x 12 bay backplane) that I have installed Proxmox on and plan to use for some VMs (Emby/Jellyfin/Plex/Docker, etc, experimenting) and ZFS storage. Basically doing compute and storage with this until and it's a sandbox until I get a feel for what my needs are and how I want to continue building my lab out.

Anyhow, the R730xd came with a PERC H730 Mini. Everything that I've read says *DO NOT USE A RAID CARD WITH ZFS, EVEN IF IT IS IN "HBA MODE" - THIS IS A HORRIBLE IDEA*. So I was HBA shopping and asking for some advice on a pure HBA replacement for the H730 when a couple people told me that was ridiculous and that setting the H730 to HBA mode would be perfectly fine. They essentially scoffed at me for even considering buying an HBA card and insisted that the H730 is perfectly fine.

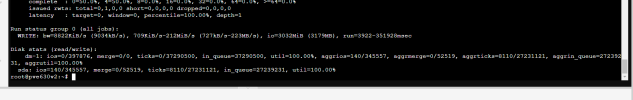

I did some testing, set the H730 to HBA mode, and installed Proxmox. I haven't setup any pools really as you can see (screenshots attached) or dug into any config, I just wanted to see if all of the drives would show up, and it looks like passthrough is working properly with the PERC H730 Mini in HBA mode. In case you're wondering about the NVMe drives, I have a x16 PCIe adapter card w/ 4 512GB NVMe drives in it and this board supports bifurcation so that's what those are.

My gut and experience has taught me that, although things may "look OK", risk taking behavior and going against established protocol is not a good idea. Everywhere I look it says DO NOT simply use a RAID card in "HBA mode". I have the impression that even though the drives are showing up properly, there are issues that can arise later on that I haven't thought about yet if I'm not using a true HBA card. I'm super new to ZFS, and don't want to start out with a dicey config that is bending/breaking rules and pay for it in the long run. I've heard odd things can happen w/ RAID cards in "HBA mode" w/ ZFS, like not being able to hotswap a failed drive in a zpool and have it resilver without having to reboot the server.

I realize there are big giant red warning signs that say to ONLY use true HBA cards, and I had probably better listen to them, but thought I'd check one more time and make sure that using a PERC H730 Mini in HBA mode in this scenario is truly bad idea.

Thanks!

Anyhow, the R730xd came with a PERC H730 Mini. Everything that I've read says *DO NOT USE A RAID CARD WITH ZFS, EVEN IF IT IS IN "HBA MODE" - THIS IS A HORRIBLE IDEA*. So I was HBA shopping and asking for some advice on a pure HBA replacement for the H730 when a couple people told me that was ridiculous and that setting the H730 to HBA mode would be perfectly fine. They essentially scoffed at me for even considering buying an HBA card and insisted that the H730 is perfectly fine.

I did some testing, set the H730 to HBA mode, and installed Proxmox. I haven't setup any pools really as you can see (screenshots attached) or dug into any config, I just wanted to see if all of the drives would show up, and it looks like passthrough is working properly with the PERC H730 Mini in HBA mode. In case you're wondering about the NVMe drives, I have a x16 PCIe adapter card w/ 4 512GB NVMe drives in it and this board supports bifurcation so that's what those are.

My gut and experience has taught me that, although things may "look OK", risk taking behavior and going against established protocol is not a good idea. Everywhere I look it says DO NOT simply use a RAID card in "HBA mode". I have the impression that even though the drives are showing up properly, there are issues that can arise later on that I haven't thought about yet if I'm not using a true HBA card. I'm super new to ZFS, and don't want to start out with a dicey config that is bending/breaking rules and pay for it in the long run. I've heard odd things can happen w/ RAID cards in "HBA mode" w/ ZFS, like not being able to hotswap a failed drive in a zpool and have it resilver without having to reboot the server.

I realize there are big giant red warning signs that say to ONLY use true HBA cards, and I had probably better listen to them, but thought I'd check one more time and make sure that using a PERC H730 Mini in HBA mode in this scenario is truly bad idea.

Thanks!