Hello everyone,

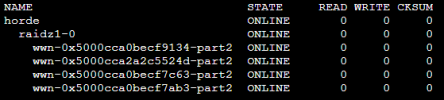

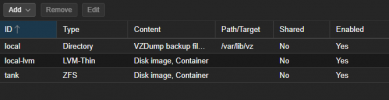

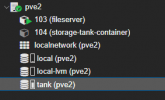

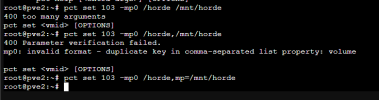

I've been using TrueNas Core/Scale(After upgrade) as my NAS solution for the last two years. I've also recently built a PVE on a separate system to which I've migrated all my services / apps to in the last few days. I'm at the point where I want to know migrate my ZFS pool from my TrueNas Scale server to a second node in my Proxmox Cluster. I'm having a hard to time finding any guidelines or tutorials in how to do this but I've managed so far to mount this pool in my second node and I am able to browse the contents via shell. Could anyone help me figure out how do I make my pool available for other containers (some that immediately I want to implement to host Photoprism, Plex and nextcloud) and perhaps return the ability for me to browse my data via Samba from my other devices or my personal computer like I would with TrueNas before?

PS: I am not interested in making a VM or container with TrueNas since I've seen a lot of that floating around.

Any help is greatly appreciated, and pardon my English it is not my primary language.

I've been using TrueNas Core/Scale(After upgrade) as my NAS solution for the last two years. I've also recently built a PVE on a separate system to which I've migrated all my services / apps to in the last few days. I'm at the point where I want to know migrate my ZFS pool from my TrueNas Scale server to a second node in my Proxmox Cluster. I'm having a hard to time finding any guidelines or tutorials in how to do this but I've managed so far to mount this pool in my second node and I am able to browse the contents via shell. Could anyone help me figure out how do I make my pool available for other containers (some that immediately I want to implement to host Photoprism, Plex and nextcloud) and perhaps return the ability for me to browse my data via Samba from my other devices or my personal computer like I would with TrueNas before?

PS: I am not interested in making a VM or container with TrueNas since I've seen a lot of that floating around.

Any help is greatly appreciated, and pardon my English it is not my primary language.