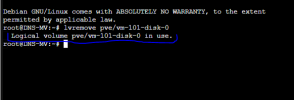

Logical volume pve/vm-101-disk-0 in use.

- Thread starter Niraat

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

First check that no other VMs or CTs accidentally use this disk.

grep -R vm-101-disk-0 /etc/pve

Second list volumes on your system:

pvesm list local-lvm

Third remove the disk:

pvesm free local-lvm:vm-101-disk-0

Unless you know what you are doing, you shouldnt be manipulating backend storage directly.

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

grep -R vm-101-disk-0 /etc/pve

Second list volumes on your system:

pvesm list local-lvm

Third remove the disk:

pvesm free local-lvm:vm-101-disk-0

Unless you know what you are doing, you shouldnt be manipulating backend storage directly.

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

https://unix.stackexchange.com/questions/455229/how-to-check-what-is-using-a-logical-volume

https://serverfault.com/questions/753124/unmounted-logical-volume-is-busy

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

https://serverfault.com/questions/753124/unmounted-logical-volume-is-busy

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

For later referanse to help others - I have working vms so I couldn't just take down the entire host. I solved it finally after lot of threads here giving no help. The method of using "Third remove the disk:" also suggested above didn't work, as it gave the same error message that it "containered a filesystem in use". So same error as in the GUI, with no option to force it (that I knew of).

I couldn't find the ID of this container in the process list (ps -aux), so I couldn't kill it either. Those links above didn't help me much either, but it gave me a hint at least.

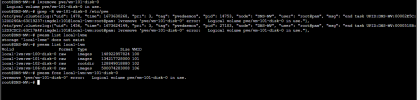

Below you can see what I got of issue during the process. I had tried to remove a stopped container several times, but always with same error.

What solved it for me, was this:

mount (to show all mounted containers disk)

umount /dev/mapper/local--main-vm--111--disk--0 (where 111 was the containers disk listed for the pve from the command above)

Then I could go into GUI and finally remove it with no errors and no reboot/downtime needed.

I couldn't find the ID of this container in the process list (ps -aux), so I couldn't kill it either. Those links above didn't help me much either, but it gave me a hint at least.

Below you can see what I got of issue during the process. I had tried to remove a stopped container several times, but always with same error.

What solved it for me, was this:

mount (to show all mounted containers disk)

umount /dev/mapper/local--main-vm--111--disk--0 (where 111 was the containers disk listed for the pve from the command above)

Then I could go into GUI and finally remove it with no errors and no reboot/downtime needed.

Code:

grep -R vm-111-disk-0 /etc/pve

/etc/pve/lxc/111.conf:rootfs: local-main:vm-111-disk-0,size=600G

/etc/pve/local/lxc/111.conf:rootfs: local-main:vm-111-disk-0,size=600G

/etc/pve/.clusterlog:{"uid": 53, "time": 1680012100, "pri": 3, "tag": "pvedaemon", "pid": 2771446, "node": "px1", "user": "root@pam", "msg": "end task UPID:px1:002A783C:6A5FB181:6422F344:vzdestroy:111:root@pam: lvremove 'local-main/vm-111-disk-0' error: Logical volume local-main/vm-111-disk-0 contains a filesystem in use."},

/etc/pve/.clusterlog:{"uid": 44, "time": 1680011720, "pri": 3, "tag": "pvedaemon", "pid": 2771446, "node": "px1", "user": "root@pam", "msg": "end task UPID:px1:002A635F:6A5F1CE9:6422F1C7:vzdestroy:111:root@pam: lvremove 'local-main/vm-111-disk-0' error: Logical volume local-main/vm-111-disk-0 contains a filesystem in use."},

/etc/pve/.clusterlog:{"uid": 42, "time": 1680011540, "pri": 3, "tag": "pvedaemon", "pid": 2771446, "node": "px1", "user": "root@pam", "msg": "end task UPID:px1:002A5AC1:6A5ED69B:6422F113:vzdestroy:111:root@pam: lvremove 'local-main/vm-111-disk-0' error: Logical volume local-main/vm-111-disk-0 contains a filesystem in use."},

/etc/pve/.clusterlog:{"uid": 40, "time": 1680011483, "pri": 3, "tag": "pvedaemon", "pid": 2771446, "node": "px1", "user": "root@pam", "msg": "end task UPID:px1:002A57EF:6A5EC037:6422F0DA:vzdestroy:111:root@pam: lvremove 'local-main/vm-111-disk-0' error: Logical volume local-main/vm-111-disk-0 contains a filesystem in use."},

/etc/pve/.clusterlog:{"uid": 36, "time": 1680011452, "pri": 3, "tag": "pvedaemon", "pid": 2002621, "node": "px1", "user": "root@pam", "msg": "end task UPID:px1:002A569D:6A5EB46B:6422F0BC:vzdestroy:111:root@pam: lvremove 'local-main/vm-111-disk-0' error: Logical volume local-main/vm-111-disk-0 contains a filesystem in use."},

/etc/pve/nodes/px1/lxc/111.conf:rootfs: local-main:vm-111-disk-0,size=600G

Last edited:

For those who come later !

This is all i did with VMs, no need to restart node, hope helps for CTs.

- Info:

storage : pve

VM disk : vm-101-disk-0

1. If you don't have VMID:101, create a new VM like that. (disk : vm-101-disk-1 will be spawned because vm-101-disk-0 already exists)

2. If VMID:101 already exists, add it to the config file:

nano /etc/pve/qemu-server/101.conf

add

"unused0: pve:vm-101-disk-0"

save

3. Go to GUI => VMID => Hardware => Unused Disk 0 pve:vm-101-disk-0 => Remove

This is all i did with VMs, no need to restart node, hope helps for CTs.

- Info:

storage : pve

VM disk : vm-101-disk-0

1. If you don't have VMID:101, create a new VM like that. (disk : vm-101-disk-1 will be spawned because vm-101-disk-0 already exists)

2. If VMID:101 already exists, add it to the config file:

nano /etc/pve/qemu-server/101.conf

add

"unused0: pve:vm-101-disk-0"

save

3. Go to GUI => VMID => Hardware => Unused Disk 0 pve:vm-101-disk-0 => Remove

Hello, please help me. I got lvremove 'pve/vm-101-disk-0' error when rolling back the snapshot of the lxc container: Logical volume pve/vm-101-disk-0 contains a filesystem in use. But my native language is Chinese. I use the browser to translate your post. I still don’t understand your solution. Can you help me?为了以后参考以帮助其他人 - 我有工作虚拟机,所以我不能只是删除整个主机。经过这里的很多线程没有提供任何帮助后,我终于解决了这个问题。上面建议的使用“第三次删除磁盘:”的方法也不起作用,因为它给出了相同的错误消息,即“包含正在使用的文件系统”。与 GUI 中的错误相同,没有选项强制它(据我所知)。

我在进程列表(ps -aux)中找不到这个容器的ID,所以我也无法杀死它。上面的这些链接对我也没有多大帮助,但至少给了我一个提示。

下面你可以看到我在这个过程中遇到的问题。我曾多次尝试删除已停止的容器,但总是出现相同的错误。

为我解决的问题是:

mount(显示所有已安装的容器磁盘)

umount /dev/mapper/local--main-vm--111--disk--0 (其中 111 是上面命令中为 pve 列出的容器磁盘)

然后我可以进入 GUI 并最终删除它,没有错误,也不需要重新启动/停机。

[代码]grep -R vm-111-disk-0 /etc/pve

/etc/pve/lxc/111.conf:rootfs: local-main:vm-111-disk-0,size=600G

/etc/pve/local/lxc/111.conf:rootfs: local-main:vm-111-disk-0,size=600G

/etc/pve/.clusterlog:{"uid": 53, "时间": 1680012100, "pri": 3, "标签": "pvedaemon", "pid": 2771446, "节点": "px1", " user": "root@pam", "msg": "结束任务 UPIDx1:002A783C:6A5FB181:6422F344:vzdestroy:111:root@pam: lvremove 'local-main/vm-111-disk-0' 错误:逻辑卷 local-main/vm-111-disk-0 包含正在使用的文件系统。"},

/etc/pve/.clusterlog:{"uid": 44, "时间": 1680011720, "pri": 3, "标记": "pvedaemon", "pid": 2771446, "节点": "px1", " user": "root@pam", "msg": "结束任务 UPIDx1:002A635F:6A5F1CE9:6422F1C7:vzdestroy:111:root@pam: lvremove 'local-main/vm-111-disk-0' 错误:逻辑卷 local-main/vm-111-disk-0 包含正在使用的文件系统。"},

/etc/pve/.clusterlog:{"uid": 42, "时间": 1680011540, "pri": 3, "标签": "pvedaemon", "pid": 2771446, "节点": "px1", " user": "root@pam", "msg": "结束任务 UPIDx1:002A5AC1:6A5ED69B:6422F113:vzdestroy:111:root@pam: lvremove 'local-main/vm-111-disk-0' 错误:逻辑卷 local-main/vm-111-disk-0 包含正在使用的文件系统。"},

/etc/pve/.clusterlog:{"uid": 40, "时间": 1680011483, "pri": 3, "标签": "pvedaemon", "pid": 2771446, "节点": "px1", " user": "root@pam", "msg": "结束任务 UPIDx1:002A57EF:6A5EC037:6422F0DA:vzdestroy:111:root@pam: lvremove 'local-main/vm-111-disk-0' 错误:逻辑卷 local-main/vm-111-disk-0 包含正在使用的文件系统。"},

/etc/pve/.clusterlog:{"uid": 36, "时间": 1680011452, "pri": 3, "标签": "pvedaemon", "pid": 2002621, "节点": "px1", " user": "root@pam", "msg": "结束任务 UPIDx1:002A569D:6A5EB46B:6422F0BC:vzdestroy:111:root@pam: lvremove 'local-main/vm-111-disk-0' 错误:逻辑卷 local-main/vm-111-disk-0 包含正在使用的文件系统。"},

/etc/pve/nodes/px1/lxc/111.conf:rootfs: local-main:vm-111-disk-0,size=600G

[/代码]

I tried restarting but still can't delete the lxc container. The current situation is that the snapshot cannot be rolled back and the container cannot be deleted.Hi, Just Restart the Proxmox Server

Another late note for anyone reading this. I had this issue where an old disk was showing in the disks list but I could not remove it becasu hit said it belonged to an existing VM. I discovered this simple way to remove it.

In Proxmox terminal issue the command

This will add the disk back to the VM as "Unused" you can then simply go to the VM hardware tab and select delete. Disk is then completely removed and deleted form the disks list.

In Proxmox terminal issue the command

Code:

qm rescanThis will add the disk back to the VM as "Unused" you can then simply go to the VM hardware tab and select delete. Disk is then completely removed and deleted form the disks list.

Does this command support lxc containers?Another late note for anyone reading this. I had this issue where an old disk was showing in the disks list but I could not remove it becasu hit said it belonged to an existing VM. I discovered this simple way to remove it.

In Proxmox terminal issue the command

Code:qm rescan

This will add the disk back to the VM as "Unused" you can then simply go to the VM hardware tab and select delete. Disk is then completely removed and deleted form the disks list.

its worrked for me after restartingHi, Just Restart the Proxmox Server