I have a new PVE deployment PVE 7.2-3. I am trying to get a X520-DA2 10Gbe NIC PCI-e card to work. Currently the system uses the motherboard NIC which is 1Gbe. I’ve looked through forum posts most of which attribute issues with the NIC to use of "unsupported transceivers". I am using a Twinax cable from 10Gtek designed for Intel (according to the company). The same twinax cable /and NIC is already in use on my network on a different system. In that case, the x520-DA2 is connected via twinax to the same switch where I am trying to connect the new PVE system. I booted my PVE system in its current state into Windows to verify that the x520-DA2 and twinax works as expected. In the Windows environment it is fully functional:

I have added GRUB_CMDLINE_LINUX=”ixgbe.allow_unsupported_sfp=1″ to /etc/default/grub, but I don’t know if I needed to. I never saw any messages regarding SFP incapability. I have not installed drives though I have been tempted to, since many forum posts indicate Proxmox should have native support for the x520-DA2 PCI-e cards.

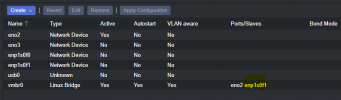

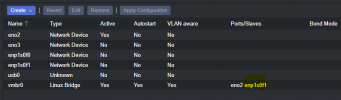

What I am experiencing is the 'ip address' command shows no interfaces for the x520-DA2. I cannot perform any configurations to the ports. I intend to replace my network connection on the PVE with the 10Gbe one. The PCI-e card is in the first slot, so according to what I've been reading the enp1s0f0 and enp1s0f1 are the 2 ports on this NIC. They appear in the dmesg, and the ports are referenced in the network interfaces file, but are not present in 'ip address' command - they also cannot be bound in the GUI. I receive the message "No such device" (referencing enp1s0f1 or enp1s0f0 depending on which I am trying to bridge) when I try.

With a GUI-bound configuration, executing 'ifreload -a' give the following error:

# ifreload -a

Any help on getting my setup to work would be appreciated.

Here are the configurations and reports most forums were asking for.

# dmesg | grep ixgbe

# lspci -nnk [Manually trimmed to the relevant information]

# /etc/network/interfaces

# ip a

I have added GRUB_CMDLINE_LINUX=”ixgbe.allow_unsupported_sfp=1″ to /etc/default/grub, but I don’t know if I needed to. I never saw any messages regarding SFP incapability. I have not installed drives though I have been tempted to, since many forum posts indicate Proxmox should have native support for the x520-DA2 PCI-e cards.

What I am experiencing is the 'ip address' command shows no interfaces for the x520-DA2. I cannot perform any configurations to the ports. I intend to replace my network connection on the PVE with the 10Gbe one. The PCI-e card is in the first slot, so according to what I've been reading the enp1s0f0 and enp1s0f1 are the 2 ports on this NIC. They appear in the dmesg, and the ports are referenced in the network interfaces file, but are not present in 'ip address' command - they also cannot be bound in the GUI. I receive the message "No such device" (referencing enp1s0f1 or enp1s0f0 depending on which I am trying to bridge) when I try.

With a GUI-bound configuration, executing 'ifreload -a' give the following error:

# ifreload -a

Code:

error: netlink: enp1s0f1: cannot enslave link enp1s0f1 to vmbr0: operation failed with 'No such device' (19)

error: vmbr0: bridge port enp1s0f1 does not existAny help on getting my setup to work would be appreciated.

Here are the configurations and reports most forums were asking for.

# dmesg | grep ixgbe

Code:

[ 1.137988] ixgbe: Intel(R) 10 Gigabit PCI Express Network Driver

[ 1.137992] ixgbe: Copyright (c) 1999-2016 Intel Corporation.

[ 2.329529] ixgbe 0000:01:00.0: Multiqueue Enabled: Rx Queue count = 20, Tx Queue count = 20 XDP Queue count = 0

[ 2.329819] ixgbe 0000:01:00.0: 32.000 Gb/s available PCIe bandwidth (5.0 GT/s PCIe x8 link)

[ 2.330141] ixgbe 0000:01:00.0: MAC: 2, PHY: 1, PBA No: G73129-000

[ 2.330142] ixgbe 0000:01:00.0: a0:36:9f:37:43:e0

[ 2.332911] ixgbe 0000:01:00.0: Intel(R) 10 Gigabit Network Connection

[ 2.501610] ixgbe 0000:01:00.1: Multiqueue Enabled: Rx Queue count = 20, Tx Queue count = 20 XDP Queue count = 0

[ 2.501900] ixgbe 0000:01:00.1: 32.000 Gb/s available PCIe bandwidth (5.0 GT/s PCIe x8 link)

[ 2.502222] ixgbe 0000:01:00.1: MAC: 2, PHY: 14, SFP+: 4, PBA No: G73129-000

[ 2.502224] ixgbe 0000:01:00.1: a0:36:9f:37:43:e2

[ 2.505017] ixgbe 0000:01:00.1: Intel(R) 10 Gigabit Network Connection

[ 10.002508] ixgbe 0000:01:00.1 enp1s0f1: renamed from eth3

[ 10.049440] ixgbe 0000:01:00.0 enp1s0f0: renamed from eth2

[ 14.447287] ixgbe 0000:01:00.0: registered PHC device on enp1s0f0

[ 14.651123] ixgbe 0000:01:00.1: registered PHC device on enp1s0f1

[ 14.845468] ixgbe 0000:01:00.1 enp1s0f1: detected SFP+: 4

[ 15.073653] ixgbe 0000:01:00.1 enp1s0f1: NIC Link is Up 10 Gbps, Flow Control: RX/TX

[ 31.285492] ixgbe 0000:01:00.0: removed PHC on enp1s0f0

[ 31.625309] ixgbe 0000:01:00.0: complete

[ 31.645536] ixgbe 0000:01:00.1: removed PHC on enp1s0f1

[ 31.753280] ixgbe 0000:01:00.1: complete# lspci -nnk [Manually trimmed to the relevant information]

Code:

01:00.0 Ethernet controller [0200]: Intel Corporation Ethernet 10G 2P X520 Adapter [8086:154d] (rev 01)

Subsystem: Intel Corporation 10GbE 2P X520 Adapter [8086:7b11]

Kernel driver in use: vfio-pci

Kernel modules: ixgbe

01:00.1 Ethernet controller [0200]: Intel Corporation Ethernet 10G 2P X520 Adapter [8086:154d] (rev 01)

Subsystem: Intel Corporation 10GbE 2P X520 Adapter [8086:7b11]

Kernel driver in use: vfio-pci

Kernel modules: ixgbe# /etc/network/interfaces

Code:

auto lo

iface lo inet loopback

iface eno2 inet manual

iface usb0 inet manual

iface eno3 inet manual

iface enp1s0f0 inet manual

iface enp1s0f1 inet manual

auto vmbr0

iface vmbr0 inet static

address 10.0.100.252/24

gateway 10.0.100.1

bridge-ports eno2 enp1s0f1

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094# ip a

Code:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno3: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 3c:ec:ef:c0:38:10 brd ff:ff:ff:ff:ff:ff

altname enp4s0

3: eno2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master vmbr0 state UP group default qlen 1000

link/ether 3c:ec:ef:be:9e:12 brd ff:ff:ff:ff:ff:ff

altname enp0s31f6

6: usb0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 4e:41:1c:a6:1a:06 brd ff:ff:ff:ff:ff:ff

7: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 3c:ec:ef:be:9e:12 brd ff:ff:ff:ff:ff:ff

inet 10.0.100.252/24 scope global vmbr0

valid_lft forever preferred_lft forever

inet6 fe80::3eec:efff:febe:9e12/64 scope link

valid_lft forever preferred_lft forever

8: tap100i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master vmbr0 state UNKNOWN group default qlen 1000

link/ether 82:0e:c6:30:2e:10 brd ff:ff:ff:ff:ff:ff

9: tap101i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master fwbr101i0 state UNKNOWN group default qlen 1000

link/ether 26:3d:a7:e1:6b:ee brd ff:ff:ff:ff:ff:ff

10: fwbr101i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 9a:b0:9c:fd:c3:2c brd ff:ff:ff:ff:ff:ff

11: fwpr101p0@fwln101i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP group default qlen 1000

link/ether 56:b5:4f:ce:75:f8 brd ff:ff:ff:ff:ff:ff

12: fwln101i0@fwpr101p0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master fwbr101i0 state UP group default qlen 1000

link/ether 42:f3:b7:48:e9:36 brd ff:ff:ff:ff:ff:ff

13: tap102i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master fwbr102i0 state UNKNOWN group default qlen 1000

link/ether 02:98:cc:74:87:f2 brd ff:ff:ff:ff:ff:ff

14: fwbr102i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether aa:cc:55:c0:2b:8a brd ff:ff:ff:ff:ff:ff

15: fwpr102p0@fwln102i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP group default qlen 1000

link/ether de:06:36:5d:bc:9e brd ff:ff:ff:ff:ff:ff

16: fwln102i0@fwpr102p0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master fwbr102i0 state UP group default qlen 1000

link/ether 8a:ab:b6:97:57:0a brd ff:ff:ff:ff:ff:ff

17: tap103i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master fwbr103i0 state UNKNOWN group default qlen 1000

link/ether b6:a0:2f:3a:04:c4 brd ff:ff:ff:ff:ff:ff

18: fwbr103i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 9a:8c:28:27:27:11 brd ff:ff:ff:ff:ff:ff

19: fwpr103p0@fwln103i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP group default qlen 1000

link/ether c2:2b:01:61:f6:7b brd ff:ff:ff:ff:ff:ff

20: fwln103i0@fwpr103p0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master fwbr103i0 state UP group default qlen 1000

link/ether 92:1e:ad:4e:9f:8f brd ff:ff:ff:ff:ff:ff