I have here 2 HP Servers which have the same problems (3 more servers of dL380 with older PV which are working):

* SATA and SSD HD are well working

* SAS RAID is extremly slow. Backup recover with wmrestore from 30 GB HDD needs 5 hours.

Gen8: Smart Array P420i on Gen9: Smart Array P840 . Boot: SSD, 8x600 GB SAS in Raid 6 -> /dev/sdb and on the other 4x4TB SAS Raid also Raid 6

-> The SAS-RAID has LVM-thin

-> When recovering a VM to the SAS-RAID, it starts Ok, but after about 50% the speed slows extremly down - instead of 1 minute I needed 5-8 hours to recover one VM.

-> When recovering to a local SATA, performance is OK.

_> No Error Messages in dmesg or wherever.

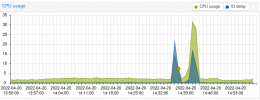

-> CPU, Memory etc. is completly OK

Ps help! The only solution I see, is to put the SAS away an buy SSD .. But could this be a problem of PVE7?

root@taurus:# pveperf

CPU BOGOMIPS: 207865.20

REGEX/SECOND: 2778674

HD SIZE: 93.93 GB (/dev/mapper/pve-root)

BUFFERED READS: 154.11 MB/sec

AVERAGE SEEK TIME: 13.19 ms

FSYNCS/SECOND: 18.91

DNS EXT: 24.50 ms

DNS INT: 29.17 ms (vipweb.at)

* SATA and SSD HD are well working

* SAS RAID is extremly slow. Backup recover with wmrestore from 30 GB HDD needs 5 hours.

Gen8: Smart Array P420i on Gen9: Smart Array P840 . Boot: SSD, 8x600 GB SAS in Raid 6 -> /dev/sdb and on the other 4x4TB SAS Raid also Raid 6

-> The SAS-RAID has LVM-thin

-> When recovering a VM to the SAS-RAID, it starts Ok, but after about 50% the speed slows extremly down - instead of 1 minute I needed 5-8 hours to recover one VM.

-> When recovering to a local SATA, performance is OK.

_> No Error Messages in dmesg or wherever.

-> CPU, Memory etc. is completly OK

Ps help! The only solution I see, is to put the SAS away an buy SSD .. But could this be a problem of PVE7?

root@taurus:# pveperf

CPU BOGOMIPS: 207865.20

REGEX/SECOND: 2778674

HD SIZE: 93.93 GB (/dev/mapper/pve-root)

BUFFERED READS: 154.11 MB/sec

AVERAGE SEEK TIME: 13.19 ms

FSYNCS/SECOND: 18.91

DNS EXT: 24.50 ms

DNS INT: 29.17 ms (vipweb.at)