I have two Mellanox ConnectX-3 10GbE NICs installed on each proxmox server. I checked NIC link speed at 10Mb with ethtool. However, running iperf tests I get 941Mbits/sec. What am I missing?

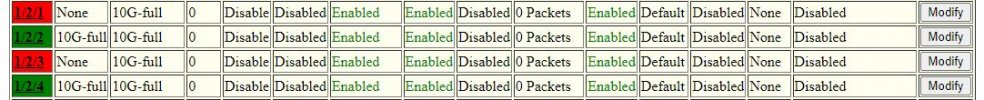

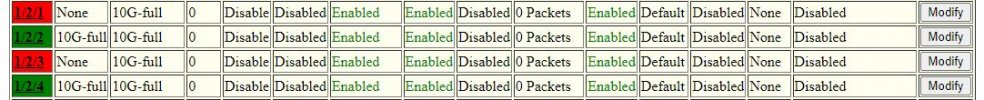

Switch port at 10G:

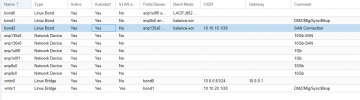

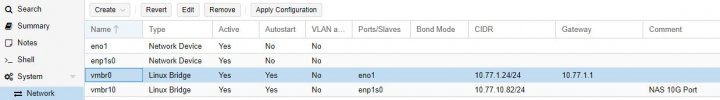

Edited: added network configuration and switch port status

Code:

# iperf -s

------------------------------------------------------------

Server listening on TCP port 5001

TCP window size: 128 KByte (default)

------------------------------------------------------------

[ 4] local 10.77.1.82 port 5001 connected with 10.77.1.81 port 35467

[ ID] Interval Transfer Bandwidth

[ 4] 0.0-10.0 sec 1.10 GBytes 939 Mbits/sec

Code:

# lspci

01:00.0 Network controller: Mellanox Technologies MT27500 Family [ConnectX-3]

Code:

# ethtool enp1s0

Settings for enp1s0:

Supported ports: [ FIBRE ]

Supported link modes: 1000baseKX/Full

10000baseKX4/Full

10000baseKR/Full

40000baseCR4/Full

40000baseSR4/Full

56000baseCR4/Full

56000baseSR4/Full

Supported pause frame use: Symmetric Receive-only

Supports auto-negotiation: Yes

Supported FEC modes: Not reported

Advertised link modes: 1000baseKX/Full

10000baseKX4/Full

10000baseKR/Full

40000baseCR4/Full

40000baseSR4/Full

Advertised pause frame use: Symmetric

Advertised auto-negotiation: Yes

Advertised FEC modes: Not reported

Speed: 10000Mb/s

Duplex: Full

Port: Direct Attach Copper

PHYAD: 0

Transceiver: internal

Auto-negotiation: off

Supports Wake-on: d

Wake-on: d

Current message level: 0x00000014 (20)

link ifdown

Link detected: yes

Code:

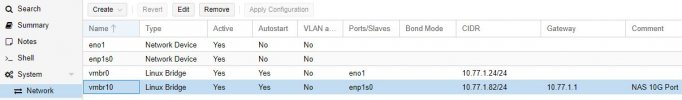

~# cat /etc/network/interfaces

auto lo

iface lo inet loopback

iface eno1 inet manual

iface enp1s0 inet manual

mtu 9000

auto vmbr0

iface vmbr0 inet static

address 10.77.1.24/24

bridge-ports eno1

bridge-stp off

bridge-fd 0

auto vmbr10

iface vmbr10 inet static

address 10.77.1.82/24

gateway 10.77.1.1

bridge-ports enp1s0

bridge-stp off

bridge-fd 0

mtu 9000

#NAS 10G Port

Code:

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr0 state UP group default qlen 1000

link/ether 10:98:36:b5:48:ed brd ff:ff:ff:ff:ff:ff

3: eno2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr1 state UP group default qlen 1000

link/ether 10:98:36:b5:48:ee brd ff:ff:ff:ff:ff:ff

4: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq state UP group default qlen 1000

link/ether 00:02:c9:37:c6:10 brd ff:ff:ff:ff:ff:ff

inet 10.77.1.81/24 scope global enp1s0

valid_lft forever preferred_lft forever

inet6 fe80::202:c9ff:fe37:c610/64 scope link

valid_lft forever preferred_lft foreverSwitch port at 10G:

Edited: added network configuration and switch port status

Last edited: