root@srv2:~# ha-manager status -v

quorum OK

master srv2 (active, Thu Oct 7 20:45:47 2021)

lrm srv1 (active, Thu Oct 7 20:45:44 2021)

lrm srv2 (active, Thu Oct 7 20:45:52 2021)

lrm srv3 (active, Thu Oct 7 20:45:44 2021)

service ct:107 (srv3, stopped)

service ct:110 (srv2, stopped)

service ct:111 (srv2, started)

service ct:112 (srv3, started)

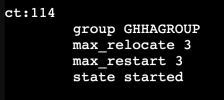

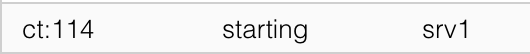

service ct:114 (srv2, started)

service ct:115 (srv2, stopped)

service ct:116 (srv2, started)

service ct:117 (srv2, stopped)

service ct:119 (srv2, started)

service ct:120 (srv2, stopped)

service ct:121 (srv2, stopped)

service ct:122 (srv2, started)

service ct:124 (srv2, stopped)

service ct:125 (srv2, started)

service ct:126 (srv2, started)

service ct:151 (srv3, started)

service vm:100 (srv2, stopped)

service vm:101 (srv3, started)

service vm:102 (srv3, stopped)

service vm:103 (srv3, started)

service vm:104 (srv2, started)

service vm:105 (srv3, stopped)

service vm:106 (srv3, started)

service vm:108 (srv2, started)

service vm:109 (srv3, started)

service vm:113 (srv3, started)

service vm:118 (srv3, started)

service vm:127 (srv2, stopped)

service vm:152 (srv3, started)

full cluster state:

{

"lrm_status" : {

"srv1" : {

"mode" : "active",

"results" : {

"IttCKFmEqRR0oQppcCK/dw" : {

"exit_code" : 0,

"sid" : "vm:118",

"state" : "started"

},

"pVtvNn/ke6wGqbPy8T00SQ" : {

"exit_code" : 0,

"sid" : "ct:120",

"state" : "stopped"

}

},

"state" : "active",

"timestamp" : 1633632344

},

"srv2" : {

"mode" : "active",

"results" : {

"5gZ+cGO2HAca6BHjQLwOSQ" : {

"exit_code" : 0,

"sid" : "ct:111",

"state" : "started"

},

"8jU8EMFHFCmE3gkNGMG1cw" : {

"exit_code" : 0,

"sid" : "ct:114",

"state" : "started"

},

"Bg1b/Fq7ZIn2Up8t9jvHZg" : {

"exit_code" : 0,

"sid" : "ct:115",

"state" : "stopped"

},

"Cun557sFiGdukKHrNINgyw" : {

"exit_code" : 0,

"sid" : "ct:121",

"state" : "stopped"

},

"EFg5yCXr5ixVyl0rgGtdiw" : {

"exit_code" : 0,

"sid" : "ct:119",

"state" : "started"

},

"HFbk3wL93BZyICge4V/D4w" : {

"exit_code" : 0,

"sid" : "vm:100",

"state" : "stopped"

},

"KUs7Mw6xhjmIcFGF/c7UCA" : {

"exit_code" : 0,

"sid" : "ct:116",

"state" : "started"

},

"MU7FkOF+ggDsi/NzG2/WCA" : {

"exit_code" : 0,

"sid" : "ct:120",

"state" : "stopped"

},

"NYHWPcZ9Z1d6G/ih0GBJyw" : {

"exit_code" : 0,

"sid" : "ct:125",

"state" : "started"

},

"NvVKVQ2m0BSOBuyX65XtjA" : {

"exit_code" : 0,

"sid" : "ct:126",

"state" : "started"

},

"Qm+sOIYL3SCtelhOHYLtmg" : {

"exit_code" : 0,

"sid" : "vm:108",

"state" : "started"

},

"cBwwULZhFhwb80/aqYA/aw" : {

"exit_code" : 0,

"sid" : "ct:117",

"state" : "stopped"

},

"gbAws1VvZW6/oXUfluiGbg" : {

"exit_code" : 0,

"sid" : "vm:127",

"state" : "stopped"

},

"hgIGXFjTY+VdL0uT6TJ27g" : {

"exit_code" : 0,

"sid" : "ct:110",

"state" : "stopped"

},

"j5A0UFAbwzbcroDQs/M8Zw" : {

"exit_code" : 0,

"sid" : "ct:122",

"state" : "started"

},

"sqfexfgijfMdVcL+Zl98Lw" : {

"exit_code" : 0,

"sid" : "ct:124",

"state" : "stopped"

},

"u0wlhH07y73mOIwQFlpECA" : {

"exit_code" : 0,

"sid" : "vm:104",

"state" : "started"

}

},

"state" : "active",

"timestamp" : 1633632352

},

"srv3" : {

"mode" : "active",

"results" : {

"7eMlItnAtPgHJr10Leml3A" : {

"exit_code" : 0,

"sid" : "vm:101",

"state" : "started"

},

"94E3RtSxW3ckMqV1YhP3Lw" : {

"exit_code" : 0,

"sid" : "vm:106",

"state" : "started"

},

"Av+CNxI2ZzxR5e1Vqybp0A" : {

"exit_code" : 0,

"sid" : "vm:113",

"state" : "started"

},

"Io8L9xr3064Dc3Y1P4IcZQ" : {

"exit_code" : 0,

"sid" : "vm:102",

"state" : "stopped"

},

"JYRFepCENRlJKRwprdK2pQ" : {

"exit_code" : 0,

"sid" : "vm:118",

"state" : "started"

},

"PU57sfk+pF7GCteIPJ7scg" : {

"exit_code" : 0,

"sid" : "ct:112",

"state" : "started"

},

"SWBdzgnNiNOugcS1nQHnHw" : {

"exit_code" : 0,

"sid" : "vm:103",

"state" : "started"

},

"TOq09/GoFHvV5FtH9ah8lw" : {

"exit_code" : 0,

"sid" : "vm:105",

"state" : "stopped"

},

"kjeaA8o17lNQVMvjOMMFJw" : {

"exit_code" : 0,

"sid" : "ct:107",

"state" : "stopped"

},

"o8A4biCqJ4FfWvrDponYOA" : {

"exit_code" : 0,

"sid" : "vm:152",

"state" : "started"

},

"r6e7M2RvXRueEj7y2T5I+g" : {

"exit_code" : 0,

"sid" : "ct:151",

"state" : "started"

},

"wj4TZ9V9nMg2eI36//EhcQ" : {

"exit_code" : 0,

"sid" : "vm:109",

"state" : "started"

}

},

"state" : "active",

"timestamp" : 1633632344

}

},

"manager_status" : {

"master_node" : "srv2",

"node_status" : {

"srv1" : "fence",

"srv2" : "online",

"srv3" : "online"

},

"service_status" : {

"ct:107" : {

"node" : "srv3",

"state" : "stopped",

"uid" : "kjeaA8o17lNQVMvjOMMFJw"

},

"ct:110" : {

"node" : "srv2",

"state" : "stopped",

"uid" : "hgIGXFjTY+VdL0uT6TJ27g"

},

"ct:111" : {

"node" : "srv2",

"running" : 1,

"state" : "started",

"uid" : "5gZ+cGO2HAca6BHjQLwOSQ"

},

"ct:112" : {

"node" : "srv3",

"running" : 1,

"state" : "started",

"uid" : "lSlMUFTHf/ynyN8j0DyNjA"

},

"ct:114" : {

"node" : "srv2",

"running" : 1,

"state" : "started",

"uid" : "8jU8EMFHFCmE3gkNGMG1cw"

},

"ct:115" : {

"node" : "srv2",

"state" : "stopped",

"uid" : "Bg1b/Fq7ZIn2Up8t9jvHZg"

},

"ct:116" : {

"node" : "srv2",

"running" : 1,

"state" : "started",

"uid" : "KUs7Mw6xhjmIcFGF/c7UCA"

},

"ct:117" : {

"node" : "srv2",

"state" : "stopped",

"uid" : "cBwwULZhFhwb80/aqYA/aw"

},

"ct:119" : {

"node" : "srv2",

"running" : 1,

"state" : "started",

"uid" : "EFg5yCXr5ixVyl0rgGtdiw"

},

"ct:120" : {

"node" : "srv2",

"state" : "stopped",

"uid" : "MU7FkOF+ggDsi/NzG2/WCA"

},

"ct:121" : {

"node" : "srv2",

"state" : "stopped",

"uid" : "Cun557sFiGdukKHrNINgyw"

},

"ct:122" : {

"node" : "srv2",

"running" : 1,

"state" : "started",

"uid" : "j5A0UFAbwzbcroDQs/M8Zw"

},

"ct:124" : {

"node" : "srv2",

"state" : "stopped",

"uid" : "sqfexfgijfMdVcL+Zl98Lw"

},

"ct:125" : {

"node" : "srv2",

"running" : 1,

"state" : "started",

"uid" : "NYHWPcZ9Z1d6G/ih0GBJyw"

},

"ct:126" : {

"node" : "srv2",

"running" : 1,

"state" : "started",

"uid" : "NvVKVQ2m0BSOBuyX65XtjA"

},

"ct:151" : {

"node" : "srv3",

"running" : 1,

"state" : "started",

"uid" : "7HpJYP2IzTLwkXB+MyBaFQ"

},

"vm:100" : {

"node" : "srv2",

"state" : "stopped",

"uid" : "HFbk3wL93BZyICge4V/D4w"

},

"vm:101" : {

"node" : "srv3",

"running" : 1,

"state" : "started",

"uid" : "lmpzgzxVHz+oNCIub7cKmg"

},

"vm:102" : {

"node" : "srv3",

"state" : "stopped",

"uid" : "Io8L9xr3064Dc3Y1P4IcZQ"

},

"vm:103" : {

"node" : "srv3",

"running" : 1,

"state" : "started",

"uid" : "1QiDCArnWEWGqXvP9qu2eg"

},

"vm:104" : {

"node" : "srv2",

"running" : 1,

"state" : "started",

"uid" : "u0wlhH07y73mOIwQFlpECA"

},

"vm:105" : {

"node" : "srv3",

"state" : "stopped",

"uid" : "TOq09/GoFHvV5FtH9ah8lw"

},

"vm:106" : {

"node" : "srv3",

"running" : 1,

"state" : "started",

"uid" : "lZLVcWUNe7w4EiSJApyaxg"

},

"vm:108" : {

"node" : "srv2",

"running" : 1,

"state" : "started",

"uid" : "Qm+sOIYL3SCtelhOHYLtmg"

},

"vm:109" : {

"node" : "srv3",

"running" : 1,

"state" : "started",

"uid" : "BB3cHdg4hh+OlsTPV1jV+w"

},

"vm:113" : {

"node" : "srv3",

"running" : 1,

"state" : "started",

"uid" : "sOHdiCI+9yTQG9OSyhvjgg"

},

"vm:118" : {

"node" : "srv3",

"running" : 1,

"state" : "started",

"uid" : "uif7361JSqJb0HSMUzXwqg"

},

"vm:127" : {

"node" : "srv2",

"state" : "stopped",

"uid" : "gbAws1VvZW6/oXUfluiGbg"

},

"vm:152" : {

"node" : "srv3",

"running" : 1,

"state" : "started",

"uid" : "ToJcc0X6Eb5rTSqm60a/Zg"

}

},

"timestamp" : 1633632347

},

"quorum" : {

"node" : "srv2",

"quorate" : "1"

}

}