Forwarding Proxmox logs to Graylog

- Thread starter bsinha

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

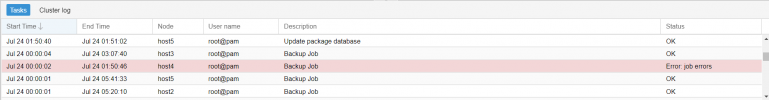

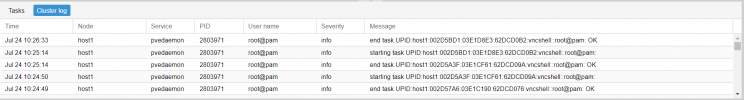

The result for the tasks itself (if you double click) is stored in /var/log/pve/tasks and could be exported from there. In general, all things you see in both views is normal daemon.log.

Depends on what you want.How do we debug it?

I got this in the tasks log 'Error: Failed to to run vncproxy.' on 2022-08-04 15:50:24 so lets see:

In general, all things you see in both views is normal daemon.log.

Code:

Aug 4 15:50:25 proxmox6 pvedaemon[4056356]: <lnxbil@local-ldap-replica> end task UPID:proxmox6:003FAFF3:6041BB0C:62EBCEA0:vncproxy:2999:lnxbil@local-ldap-replica: Failed to run vncproxy.The result for the tasks itself (if you double click) is stored in /var/log/pve/tasks and could be exported from there.

Code:

root@proxmox6 ~ > find /var/log/pve/tasks -name '*UPID:proxmox6:003FAFF3:6041BB0C:62EBCEA0:vncproxy:2999:lnxbil@local-ldap-replica*'

/var/log/pve/tasks/0/UPID:proxmox6:003FAFF3:6041BB0C:62EBCEA0:vncproxy:2999:lnxbil@local-ldap-replica:

root@proxmox6 ~ > cat /var/log/pve/tasks/0/UPID:proxmox6:003FAFF3:6041BB0C:62EBCEA0:vncproxy:2999:lnxbil@local-ldap-replica:

VM 2999 not running

TASK ERROR: Failed to run vncproxy.So, just a "simple" join over files.

Is there any way that we take the file names from /var/log/pve/tasks/ and join the content of that file and then transfer them to a log server? If we try to send the logs then the content of the file is getting transferred to the log server. However, the meaningful data is actually in the filename.

The log data could be something like this:

UPID:droxmox6:003FAFF3:6041BB0C:62EBCEA0:vncproxy:2999:lnxbil@local-ldap-replica:VM 2999 not running: TASK ERROR: Failed to run vncproxy

The log data could be something like this:

UPID:droxmox6:003FAFF3:6041BB0C:62EBCEA0:vncproxy:2999:lnxbil@local-ldap-replica:VM 2999 not running: TASK ERROR: Failed to run vncproxy

Sorry but have you read and tried to understood what I wrote?

If you want a more detailed explanation, just join that line with contents of the task with the given ID.

The log data from daemon.log IS ACTUALLY like this:The log data could be something like this:

UPID:droxmox6:003FAFF3:6041BB0C:62EBCEA0:vncproxy:2999:lnxbil@local-ldap-replica:VM 2999 not running: TASK ERROR: Failed to run vncproxy

Code:

Aug 4 15:50:25 proxmox6 pvedaemon[4056356]: <lnxbil@local-ldap-replica> end task UPID:proxmox6:003FAFF3:6041BB0C:62EBCEA0:vncproxy:2999:lnxbil@local-ldap-replica: Failed to run vncproxy.If you want a more detailed explanation, just join that line with contents of the task with the given ID.

My question, as I'm busy with a central logging project on multiple ProxMox clusters to log to Loki via Promtail: is there a hook script of sorts to be able to know when the next file is created/closed/etc.?These are the file names of the logs which are in the other folders in pve/tasks : )

A bit counter productive to scan the directory every couple of minutes, and then to have positional counters to monitor all the files that are (mostly) not being written to.

I didSorry but have you read and tried to understood what I wrote?

Bit.. of a bigger challenge when you want to stream the logs to a central logger/store (Like Loki / ElasticSearch) using a quite static tool (like Promtail / Fluentd / Graylog )The log data from daemon.log IS ACTUALLY like this:

Code:Aug 4 15:50:25 proxmox6 pvedaemon[4056356]: <lnxbil@local-ldap-replica> end task UPID:proxmox6:003FAFF3:6041BB0C:62EBCEA0:vncproxy:2999:lnxbil@local-ldap-replica: Failed to run vncproxy.

If you want a more detailed explanation, just join that line with contents of the task with the given ID.

Yes sure ... the problem I see is that you have some kind of 1:n table structure. The daemon.log consists of a single line that points to a file that has potentially more than one line, so the question is, how does a central log system should work with that?I did

Bit.. of a bigger challenge when you want to stream the logs to a central logger/store (Like Loki / ElasticSearch) using a quite static tool (like Promtail / Fluentd / Graylog )

I'd use fam to monitor the directory and stream the contents to the central log system.A bit counter productive to scan the directory every couple of minutes, and then to have positional counters to monitor all the files that are (mostly) not being written to.

I'm curious how to proceed further and I'm willing to also set this up. I played around with graylog last week, but I have to say that I'm not hooked. This looks so overcomplicated in comparison to a simple table like structure if you just want to use it as a human reading frontend (filtering, alertering may be superb, but I just looked at the human part). Maybe you can describe how you want to use it?

you tag the filename_upid and a special tag like this_is_upid_detail, and then when you get a UPID in the mainlog, you can do a query on the this_is_upid_detail with filename_upid == UPID to fetch the relevant details.Yes sure ... the problem I see is that you have some kind of 1:n table structure. The daemon.log consists of a single line that points to a file that has potentially more than one line, so the question is, how does a central log system should work with that?

incron/FAM/etc.[1] is adding a tad more... fragile complexity, to the hypervisors, where a hookscript from the task logger would've been a very KISS solution to (using ie. promtail) just stream the file to the central log

Note [1]: years gone back, there was an interface (or was it external module?) that was able to feed all the FS events and then you could filter what you want/etc. The problem with things FAM/inotify: you (recursively) need a FD per directory, and a recursive (as is the case with task/logs) could be a challenge on kernel resources

Is the query done automatically, or manualy on a per line basis? If it's manual, then there is really no up-side in doing this, is there? Normally things should get easier.you tag the filename_upid and a special tag like this_is_upid_detail, and then when you get a UPID in the mainlog, you can do a query on the this_is_upid_detail with filename_upid == UPID to fetch the relevant details.

Yes, the hookscript would be really better if it could already prepare some kind of json-like logging struture, of if PVE would implement GELF.

Oh, wasn't aware of the complexities behind it, that would really stress the hypervisor in this hash-organized directory structure.Note [1]: years gone back, there was an interface (or was it external module?) that was able to feed all the FS events and then you could filter what you want/etc. The problem with things FAM/inotify: you (recursively) need a FD per directory, and a recursive (as is the case with task/logs) could be a challenge on kernel resources

It seems to be possible to get something more automated than manual copy-pasting.Is the query done automatically, or manualy on a per line basis? If it's manual, then there is really no up-side in doing this, is there? Normally things should get easier.

Yes, the hookscript would be really better if it could already prepare some kind of json-like logging struture, of if PVE would implement GELF.

Oh, wasn't aware of the complexities behind it, that would really stress the hypervisor in this hash-organized directory structure.

Still, if it's there, then it's queriable