Hello,

Sorry if it's a stupid question but I can't solve it by myself.

I just created a couple of weeks ago a new PBS server (version 3.0.3), a physical machine with 1 TB of storage (single SSD)

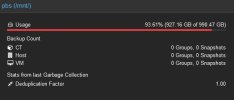

I set the automatic prune process to keep last 7 days of backup, we floated around 90 % of space used for some days. Unfortunately, last night pbs finished space and all backup fails.

Now i see pbs disk usage at 100.00 % and I don't know how to unlock the situation: plan was to change backup retention to only 5 days, I tried but how can I manually delete days 6 and 7 ?

I tried in datastore -> name_of_my_datastore -> content -> prune all -> Keep last 5 -> Prune -> but i receive error "ENOSPC: No space left on device"

i tried to manually delete some less important backup using the red recycle bin icon, it works but pbs is still at 100 % usage, no real file deleted.

I tried to manually start Garbage collection but i receive error "unable to start garbage collection job on datastore pbs - ENOSPC: No space left on device"

I can't even connect to shell because of full disk

How can I clean something ?

Thanks

Sorry if it's a stupid question but I can't solve it by myself.

I just created a couple of weeks ago a new PBS server (version 3.0.3), a physical machine with 1 TB of storage (single SSD)

I set the automatic prune process to keep last 7 days of backup, we floated around 90 % of space used for some days. Unfortunately, last night pbs finished space and all backup fails.

Now i see pbs disk usage at 100.00 % and I don't know how to unlock the situation: plan was to change backup retention to only 5 days, I tried but how can I manually delete days 6 and 7 ?

I tried in datastore -> name_of_my_datastore -> content -> prune all -> Keep last 5 -> Prune -> but i receive error "ENOSPC: No space left on device"

i tried to manually delete some less important backup using the red recycle bin icon, it works but pbs is still at 100 % usage, no real file deleted.

I tried to manually start Garbage collection but i receive error "unable to start garbage collection job on datastore pbs - ENOSPC: No space left on device"

I can't even connect to shell because of full disk

How can I clean something ?

Thanks