Hi all,

I'm fairly new here, I've been using Proxmox for a while now and pretty much got it running as I'd like with multiple VMS over 2 nodes. I have one query which I can't seem to get an answer too. When I setup a VM, I may allocate it say, 500GB, it will only actually be using 100GB perhaps, but when I look on Proxmox it says the full 500GB is used, then when I migrate it to another server, it takes a very long time becasue it's transferring the full 500GB. I've enabled discard and I've even run fstrim in the VMs, but it still shows as occupying the full allocated space.

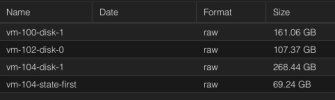

Disks are showing as being RAW and Iv'e tried them being stored on LVM-Thin as well as ZFS storage pool. What am I doing wrong here? Am I expecting it to be doing something it's not capable of or have I just got something setup wrong?

Thanks in advance for you help and advice!

I'm fairly new here, I've been using Proxmox for a while now and pretty much got it running as I'd like with multiple VMS over 2 nodes. I have one query which I can't seem to get an answer too. When I setup a VM, I may allocate it say, 500GB, it will only actually be using 100GB perhaps, but when I look on Proxmox it says the full 500GB is used, then when I migrate it to another server, it takes a very long time becasue it's transferring the full 500GB. I've enabled discard and I've even run fstrim in the VMs, but it still shows as occupying the full allocated space.

Disks are showing as being RAW and Iv'e tried them being stored on LVM-Thin as well as ZFS storage pool. What am I doing wrong here? Am I expecting it to be doing something it's not capable of or have I just got something setup wrong?

Thanks in advance for you help and advice!