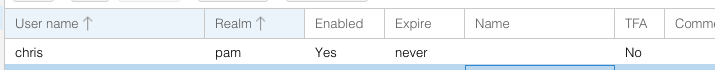

I've setup a new user from Datacenter > Permissions > Users

However on creating the user and attempting to set the password I get

If I then attempt to delete the user I get the below:

This is a brand new cluster - I really can't see what might be causing this... any ideas?

Thanks,

Chris.

However on creating the user and attempting to set the password I get

change password failed: user 'Chris' does not exist (500).If I then attempt to delete the user I get the below:

Code:

delete user failed: cannot update tfa config, following nodes are not up to date: cluster node 'pve02' is too old, did not broadcast its version info cluster node 'pve03' is too old, did not broadcast its version info cluster node 'pve01' is too old, did not broadcast its version info (500)This is a brand new cluster - I really can't see what might be causing this... any ideas?

Thanks,

Chris.