Hi All

I’ve spent all week trying to get clustering working but I’m having problems. I’m now at the point were I need to reach out to the community.

Steps:

I install Proxmox 8.0.3 to two separate machines.

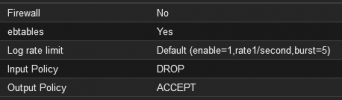

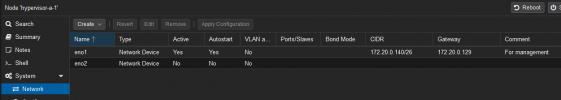

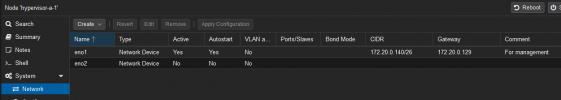

I edit the network config, delete the bridge and leave a single network interface configured with static IP. This interface will be dedicated to cluster comms. eno2 is currently unplugged and unused. My plan is to create a bridge from eno2 which VMs will use, but for now I've not set it up to simply the network config. Here a screenshot form one of my servers. The other server is setup the same only with a different IP address.

Note I have also tried it without changing the network settings and just going with the default bridge configured. Makes no difference i.e. I still get the same problem.

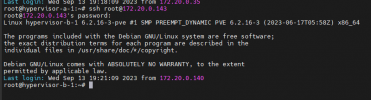

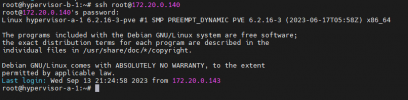

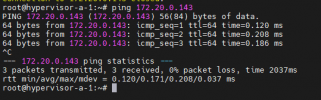

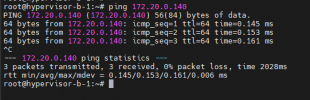

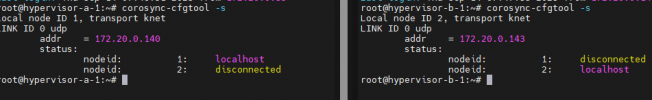

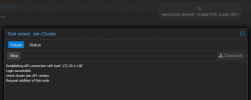

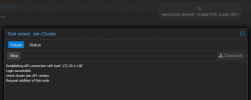

On node 1 I create a cluster, copy the join cluster info and paste it into node 2. On node 2 I select which network interface to use and enter the root password. The joining then begins and I lose connection to node 2. I think losing connection is possibly expected at this point but I don’t know enough about the process to be certain?

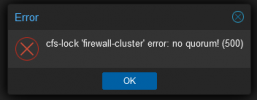

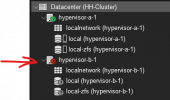

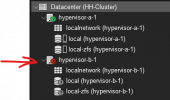

On node 1 I can see that node 2 has been added - so something worked. But node 2 has a red cross on it

When I try to access to node 2 from the GUI of node 1 I got some messages about SSL.

I thought maybe it’s because the default installs use self signed certs and when trying to access them via a browser you get the untrusted message. As a human you can simply accept the warning and proceed to the login page but a piece of code can’t do such a thing and so maybe that’s why the cluster joining is failing.

With this theory, I installed Proxmox again from scratch on both servers and before creating a cluster I uploaded my lets encrypt SSL certs to both machines. Verified that the browser recognised that official SSL certs were being used. I restarted both servers and then tried creating a cluster again. Still got the same issue.

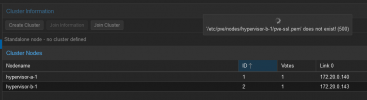

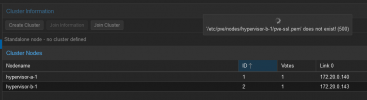

Another thing I have tried is on node one I copied the SSL certs from “/etc/pve/nodes/hypervisor-a-1/” to “/etc/pve/nodes/hypervisor-b-1/” as I saw they weren’t present. Restarted both machines but still no luck.

If I SSH into node 2 it does not have the same layout in "/etc/pve/nodes" directory. Maybe this is normal?

If it helps:

I can SSH from the GUI of node 1 to node 2.

I cant reach node2 via its web interface which possibly explains why node1 cant do the same. Its like as soon as I add node 2 to the cluster it dies.

I’m not really sure what to do at this point. My next task was to add a RPi as a 3rd voting node but there is no point moving onto this if I cant get 2 nodes doing anything.

I’ve spent all week trying to get clustering working but I’m having problems. I’m now at the point were I need to reach out to the community.

Steps:

I install Proxmox 8.0.3 to two separate machines.

I edit the network config, delete the bridge and leave a single network interface configured with static IP. This interface will be dedicated to cluster comms. eno2 is currently unplugged and unused. My plan is to create a bridge from eno2 which VMs will use, but for now I've not set it up to simply the network config. Here a screenshot form one of my servers. The other server is setup the same only with a different IP address.

Note I have also tried it without changing the network settings and just going with the default bridge configured. Makes no difference i.e. I still get the same problem.

On node 1 I create a cluster, copy the join cluster info and paste it into node 2. On node 2 I select which network interface to use and enter the root password. The joining then begins and I lose connection to node 2. I think losing connection is possibly expected at this point but I don’t know enough about the process to be certain?

On node 1 I can see that node 2 has been added - so something worked. But node 2 has a red cross on it

When I try to access to node 2 from the GUI of node 1 I got some messages about SSL.

I thought maybe it’s because the default installs use self signed certs and when trying to access them via a browser you get the untrusted message. As a human you can simply accept the warning and proceed to the login page but a piece of code can’t do such a thing and so maybe that’s why the cluster joining is failing.

With this theory, I installed Proxmox again from scratch on both servers and before creating a cluster I uploaded my lets encrypt SSL certs to both machines. Verified that the browser recognised that official SSL certs were being used. I restarted both servers and then tried creating a cluster again. Still got the same issue.

Another thing I have tried is on node one I copied the SSL certs from “/etc/pve/nodes/hypervisor-a-1/” to “/etc/pve/nodes/hypervisor-b-1/” as I saw they weren’t present. Restarted both machines but still no luck.

If I SSH into node 2 it does not have the same layout in "/etc/pve/nodes" directory. Maybe this is normal?

If it helps:

I can SSH from the GUI of node 1 to node 2.

I cant reach node2 via its web interface which possibly explains why node1 cant do the same. Its like as soon as I add node 2 to the cluster it dies.

I’m not really sure what to do at this point. My next task was to add a RPi as a 3rd voting node but there is no point moving onto this if I cant get 2 nodes doing anything.

Last edited: