Hi there,

I'm recently having a two nodes Cluster of PVE in my home environment.

Caused by some accidents i needed to change the IP address of one node (the other one remained where it was on the IP-range)

What did i do?

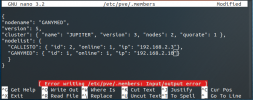

On both nodes (while they still had quorum) i changed the corosync.conf running :

and finally restarting the service on both nodes (just in case ;-)

after that i needed to change the nodes IP-address:

[This is the point where I am right now]

So now i have two issues which seem to be leftovers from the IP-change:

1. When I now move anything to the node with the new IP I receive this output in the Migration window:

If i get this correctly for the migrations there must be another config file storing the IP-adresses of the other nodes. Does anyone know where I need to edit this?

2. Logging into the webinterface on the node which didn't change I usually also saw the stats of the VMs from the other node (where I changed the IP-address). Unfortunately this looks like that now:

<description for those who aren't logged in here: Loading bar which desn't change any more, Message: "Error Connection error: 595: No route to host">

On the web-ui of the node with changed address anyway this doesn't occur and i cann see all the stats from both nodes.

So maybe in this second case it also tries to still connect to the old address of this node? How can fix this?

Thanks a lot already

This forum with all the help i found here reading in your threads is simply great!

I'm recently having a two nodes Cluster of PVE in my home environment.

Caused by some accidents i needed to change the IP address of one node (the other one remained where it was on the IP-range)

What did i do?

On both nodes (while they still had quorum) i changed the corosync.conf running :

Bash:

nano /etc/pve/corosync.confand finally restarting the service on both nodes (just in case ;-)

Bash:

systemctl restart corosyncafter that i needed to change the nodes IP-address:

Bash:

nano /etc/hosts

nano /etc/network/interfaces

systemctl restart networking[This is the point where I am right now]

So now i have two issues which seem to be leftovers from the IP-change:

1. When I now move anything to the node with the new IP I receive this output in the Migration window:

Code:

2021-06-01 11:38:36 # /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=<NODE_WITH_NEW_IP>' root@<THE_NODES_OLD_IP> /bin/true

2021-06-01 11:38:36 ssh: connect to host <THE_NODES_OLD_IP> port 22: No route to host

2021-06-01 11:38:36 ERROR: migration aborted (duration 00:00:03): Can't connect to destination address using public key

TASK ERROR: migration abortedIf i get this correctly for the migrations there must be another config file storing the IP-adresses of the other nodes. Does anyone know where I need to edit this?

2. Logging into the webinterface on the node which didn't change I usually also saw the stats of the VMs from the other node (where I changed the IP-address). Unfortunately this looks like that now:

<description for those who aren't logged in here: Loading bar which desn't change any more, Message: "Error Connection error: 595: No route to host">

On the web-ui of the node with changed address anyway this doesn't occur and i cann see all the stats from both nodes.

So maybe in this second case it also tries to still connect to the old address of this node? How can fix this?

Thanks a lot already

This forum with all the help i found here reading in your threads is simply great!