Hello,

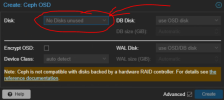

I have freshly installed proxmox 8.1.1 and formatted drive as XFS, now for some reason when clicking the create OSD button within the Ceph panel of the web gui in the OSD section, it keeps saying "No Disks unused" but when I run lsblk I can see 1.7TB is free on the drive?

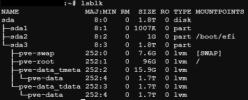

root@dws-zve-3:~# lsblkNAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTSsda 8:0 0 1.8T 0 disk ├─sda1 8:1 0 1007K 0 part ├─sda2 8:2 0 1G 0 part /boot/efi└─sda3 8:3 0 1.8T 0 part ├─pve-swap 252:0 0 7.6G 0 lvm [SWAP] ├─pve-root 252:1 0 96G 0 lvm / ├─pve-data_tmeta 252:2 0 15.9G 0 lvm │ └─pve-data 252:4 0 1.7T 0 lvm └─pve-data_tdata 252:3 0 1.7T 0 lvm └─pve-data 252:4 0 1.7T 0 lvm

Please any help would be greatly appreciated.

I have freshly installed proxmox 8.1.1 and formatted drive as XFS, now for some reason when clicking the create OSD button within the Ceph panel of the web gui in the OSD section, it keeps saying "No Disks unused" but when I run lsblk I can see 1.7TB is free on the drive?

root@dws-zve-3:~# lsblkNAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTSsda 8:0 0 1.8T 0 disk ├─sda1 8:1 0 1007K 0 part ├─sda2 8:2 0 1G 0 part /boot/efi└─sda3 8:3 0 1.8T 0 part ├─pve-swap 252:0 0 7.6G 0 lvm [SWAP] ├─pve-root 252:1 0 96G 0 lvm / ├─pve-data_tmeta 252:2 0 15.9G 0 lvm │ └─pve-data 252:4 0 1.7T 0 lvm └─pve-data_tdata 252:3 0 1.7T 0 lvm └─pve-data 252:4 0 1.7T 0 lvm

Please any help would be greatly appreciated.

Attachments

Last edited: