Hi all,

I have a setup of 3 proxmox servers (7.3.6) running Ceph 16.2.9. I have 21 SSD OSDs, 12*1.75TB,9*0.83TB. On these OSDs I have one pool with replication 1 (one copy).

I have set the pg_autoscale_mode to 'on' and the resulting PGs of the pool are 32.

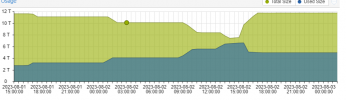

My problem is that the OSDs are very badly inbalanced:

As you can see I have an OSD at 89% while another OSD based on the same kind of disk is at 0.96% full. Apart from this two extremes, the other OSDs are also not balanced.

What can i do about this? Is it dangerous if an OSD fills up? Isn't ceph going to get space from another OSD that has available space?

Thanx,

Spiros

I have a setup of 3 proxmox servers (7.3.6) running Ceph 16.2.9. I have 21 SSD OSDs, 12*1.75TB,9*0.83TB. On these OSDs I have one pool with replication 1 (one copy).

I have set the pg_autoscale_mode to 'on' and the resulting PGs of the pool are 32.

My problem is that the OSDs are very badly inbalanced:

Code:

ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS

0 ssd 1.74599 0.90002 1.7 TiB 1.1 TiB 1.1 TiB 3.2 MiB 2.7 GiB 647 GiB 63.80 1.77 13 up

5 ssd 0.82999 0.90002 850 GiB 757 GiB 756 GiB 37 MiB 1.2 GiB 93 GiB 89.05 2.47 7 up

6 ssd 0.82999 1.00000 850 GiB 8.2 GiB 7.8 GiB 9.5 MiB 434 MiB 842 GiB 0.96 0.03 4 up

7 ssd 0.82999 1.00000 850 GiB 381 GiB 381 GiB 20 MiB 625 MiB 469 GiB 44.87 1.24 5 up

16 ssd 1.74599 0.90002 1.7 TiB 1.3 TiB 1.3 TiB 3.6 MiB 2.7 GiB 483 GiB 72.99 2.02 12 upAs you can see I have an OSD at 89% while another OSD based on the same kind of disk is at 0.96% full. Apart from this two extremes, the other OSDs are also not balanced.

What can i do about this? Is it dangerous if an OSD fills up? Isn't ceph going to get space from another OSD that has available space?

Thanx,

Spiros