CEPH monitor cannot be deleted when the node fails and goes offline !

- Thread starter zisain

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Because the old machine has been destroyed, it will be replaced by a new machine, the name will still be P3If I understand you correctly, the node p3 is currently down? Will it ever be back or will it be replaced by a freshly installed one?

do you have any /etc/systemd/system/ceph-mon.target.wants/ceph-mon@*.service on the P3 node ?

I have see this when reinstall a node without formating, and the previous mon service file was still there.

I have see this when reinstall a node without formating, and the previous mon service file was still there.

Okay. For Proxmox VE you should follow the guide on how to remove a node from the cluster if you have not done so yet. Also checkout the note at the end mentioning that stored SSH fingerprints need to be cleaned manually.Because the old machine has been destroyed, it will be replaced by a new machine, the name will still be P3

Since the old node is not present anymore, any steps regarding systemd services should be obsolete. The one thing you need to do manually is to remove the mon from Ceph: https://pve.proxmox.com/pve-docs/chapter-pvecm.html#_remove_a_cluster_node

Code:

ceph mon remove {mon-id}With that, it will hopefully be gone

Okay. For Proxmox VE you should follow the guide on how to remove a node from the cluster if you have not done so yet. Also checkout the note at the end mentioning that stored SSH fingerprints need to be cleaned manually.

Since the old node is not present anymore, any steps regarding systemd services should be obsolete. The one thing you need to do manually is to remove the mon from Ceph: https://pve.proxmox.com/pve-docs/chapter-pvecm.html#_remove_a_cluster_node

Code:ceph mon remove {mon-id}

With that, it will hopefully be gone

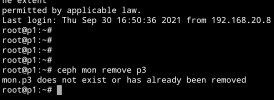

This command has been tested and cannot be implemented

Attachments

root@p1:~# pveceph mon destroy p3Okay. For Proxmox VE you should follow the guide on how to remove a node from the cluster if you have not done so yet. Also checkout the note at the end mentioning that stored SSH fingerprints need to be cleaned manually.

Since the old node is not present anymore, any steps regarding systemd services should be obsolete. The one thing you need to do manually is to remove the mon from Ceph: https://pve.proxmox.com/pve-docs/chapter-pvecm.html#_remove_a_cluster_node

Code:ceph mon remove {mon-id}

With that, it will hopefully be gone

no such monitor id 'p3'

But I modified the ceph.conf, and it still can't be cleared.Are they remnants in the/etc/pve/ceph.conffile? IP addresses, config sections?

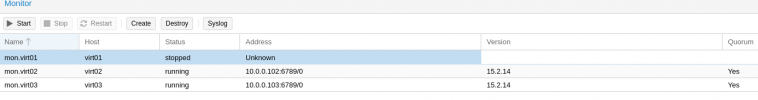

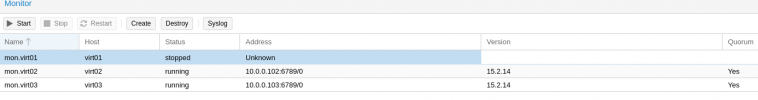

I am having a similar problem, I have proxmox sees a monitor, but it has been removed by ceph:

root@virt01:/var/lib/ceph# pveceph mon destroy virt01

no such monitor id 'virt01'

root@virt01:/var/lib/ceph# ceph mon remove virt01

mon.virt01 does not exist or has already been removed

root@virt01:/var/lib/ceph#

But I still see it in GUI so I can't add a new one...

root@virt01:/var/lib/ceph# pveceph mon destroy virt01

no such monitor id 'virt01'

root@virt01:/var/lib/ceph# ceph mon remove virt01

mon.virt01 does not exist or has already been removed

root@virt01:/var/lib/ceph#

But I still see it in GUI so I can't add a new one...

root@virt01:/var/lib/ceph# pveceph createmon --monid virt01 --mon-address 10.0.0.101

monitor 'virt01' already exists

monitor 'virt01' already exists

Because we don't know where the configuration path on pve is, otherwise we can find it and delete it, but I recently researched and found that: pve will limit the cluster to at least a few osd and monitors. This problem makes you unable to delete it in the end. It means that the establishment of ceph on pve is irreversible.. There is currently no official solutionroot@virt01:/var/lib/ceph# pveceph createmon --monid virt01 --mon-address 10.0.0.101

monitor 'virt01' already exists

Did you check the hints mentioned ealier in the thread? systemd unit, still mentioned in the Ceph config? Is the directory of the mon still present?root@virt01:/var/lib/ceph# pveceph createmon --monid virt01 --mon-address 10.0.0.101

monitor 'virt01' already exists

/var/lib/ceph/ceph-mon/*?And what is the output of

ceph -s?There are a few places where Proxmox VE checks if a mon is present and they all need to be cleared.

Another thing that can be tried is to disable the ceph-mon.target systemd unit on that node:

systemctl disable ceph-mon.target@zisain What do you get if you run

ceph mon dump on the remaining nodes?Do I understand it correctly, and you also have a few OSDs still lingering around from the destroyed node? If so, have a look at the Ceph documentation on how to manually remove OSDs from the clusters crush map: https://docs.ceph.com/en/latest/rados/operations/add-or-rm-osds/#removing-the-osdbut I recently researched and found that: pve will limit the cluster to at least a few osd and monitors

ceph osd purge {id} --yes-i-really-mean-itIn the environment at that time, I have used ceph's command:@zisain What do you get if you runceph mon dumpon the remaining nodes?

Do I understand it correctly, and you also have a few OSDs still lingering around from the destroyed node? If so, have a look at the Ceph documentation on how to manually remove OSDs from the clusters crush map: https://docs.ceph.com/en/latest/rados/operations/add-or-rm-osds/#removing-the-osd

ceph osd purge {id} --yes-i-really-mean-it

{

systemctl stop ceph-osd@0.service

ceph osd out 0

ceph osd crush rm osd.0

ceph osd rm osd.0

ceph auth del osd.0

} I completely deleted the osd, and because I couldn't delete the last mon, I finally had to rebuild the system. Later, I tested the problem with a virtual machine. I suspect that the pool was not deleted. I will test again in the future, and I will announce the test results again.

solution for me was to delete the folder:

Then from UI recreated mon and it failed with :

and fixed with:

Code:

rm -rf /var/lib/ceph/mon/ceph-test/Then from UI recreated mon and it failed with :

TASK ERROR: command '/bin/systemctl start ceph-mon@test' failed: exit code 1

and fixed with:

Code:

/bin/systemctl daemon-reload

/bin/systemctl enable ceph-mon@test

/bin/systemctl start ceph-mon@test

Last edited: