Hello,

I have issue with performance on VM. I tried messing with config, args and stuff, but nothing seems to work.

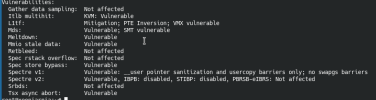

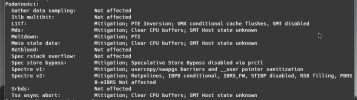

I tried lowering cores in hardware section of VM, lowering memory, setting args to stock, changing CPU type, reinstalling OS, creating different VM, disabling migitations etc.

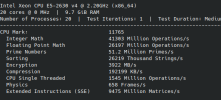

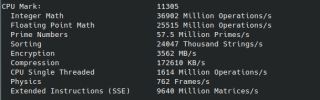

As you can see from the passmark results that I provided, the difference in performance is big.

Proxmox host spec:

Xeon E5-2630v4

DDR4 4x8GB (2666MHz CL19)

XFX RX 6600 (passthrough to VM)

PVE 8.1.4 (pve-manager/8.1.4/ec5affc9e41f1d79 (running kernel: 6.5.11-7-pve))

VM config:

Here is passmark result from host: LINK

Passmark result from host on Windows: Passmart result from VM:

PS: English isn't my first language, sorry for mistakes

Thanks,

Filip

I have issue with performance on VM. I tried messing with config, args and stuff, but nothing seems to work.

I tried lowering cores in hardware section of VM, lowering memory, setting args to stock, changing CPU type, reinstalling OS, creating different VM, disabling migitations etc.

As you can see from the passmark results that I provided, the difference in performance is big.

Proxmox host spec:

Xeon E5-2630v4

DDR4 4x8GB (2666MHz CL19)

XFX RX 6600 (passthrough to VM)

PVE 8.1.4 (pve-manager/8.1.4/ec5affc9e41f1d79 (running kernel: 6.5.11-7-pve))

VM config:

Code:

agent: 1

args: -cpu 'host,hv_ipi,hv_relaxed,hv_reset,hv_runtime,hv_spinlocks=0x1fff,hv_stimer,hv_synic,hv_time,hv_vapic,hv_vpindex,+kvm_pv_eoi,+kvm_pv_unhalt,+invtsc'

balloon: 0

bios: ovmf

boot: order=sata0;ide2;net0

cores: 16

cpu: host

efidisk0: local-lvm:vm-102-disk-1,efitype=4m,pre-enrolled-keys=1,size=4M

hostpci0: 0000:05:00,pcie=1,romfile=vbios-xfxrx6600.bin

hotplug: disk,network,usb

ide2: local:iso/virtio-win-0.1.240.iso,media=cdrom,size=612812K

machine: pc-q35-8.1

memory: 10240

meta: creation-qemu=7.2.0,ctime=1679563559

name: InkaVM-v2

net0: virtio=82:55:13:1F:40:7E,bridge=vmbr0

net1: virtio=CE:DD:E6:82:D7:BF,bridge=vmbr1,firewall=1

numa: 0

onboot: 1

ostype: win10

protection: 0

sata0: local-lvm:vm-102-disk-0,cache=writeback,discard=on,size=120G,ssd=1

scsi1: lexar-1000:vm-102-disk-0,backup=0,cache=writeback,discard=on,size=400G,ssd=1

scsi2: chrupek-500:102/vm-102-disk-0.qcow2,backup=0,cache=writeback,discard=on,size=350G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=d64f6615-0f4d-8016-ffff-1de971325348,manufacturer=UEZpbGlwVGVjaA==,product=WDk5LVR1cmJv,version=MDYuMDQuMjAyMw==,serial=RUY1REQ0MjA=,sku=U0tVTEw=,family=SW5rdcWbIEdhbWluZw==,base64=1

sockets: 1

tablet: 0

tags: bandytamoc;inkavm;logiceksploduje

vga: none

virtio0: lexar-1000e:102/vm-102-disk-0.qcow2,discard=on,iothread=1,size=1G

vmgenid: 06960840-91a6-4fe8-bfb0-cc1fb5a804bbHere is passmark result from host: LINK

Passmark result from host on Windows: Passmart result from VM:

PS: English isn't my first language, sorry for mistakes

Thanks,

Filip