Greetings,

A quick background on my small infrastructure:

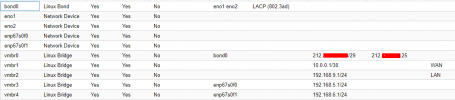

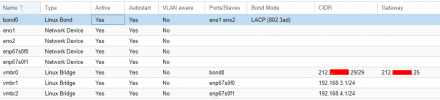

Proxmox server with multiple running VMs and LXCs. Empty SFP+ port. All incoming traffic from public IP is being routed via iptables, either to custom SSH port for direct server access, to port 8006, and the rest towards VM running PfSense).

Another physical server meant for running PBS. It has 2x 2TB nvme drives, 8x 10TB HDDs.

I would like to install proxmox and virtualize PBS (1. should I go VM or LXC?) on it on this fresh server, but I'm concerned that I will have connectivity problems because of iptables routing organization, never done this before.

Lets say servers get connected via free SFP+ to each other. I set IP on main server on SFP+ NIC to 192.168.5.2 and IP on Backup server on SFP+ NIC to 192.168.5.3.

2. Now I have to give PBS VM which IP? 3. Where?

4. Can I connect from main proxmox server, that runs on IP 10.0.0.2 as host, to this PBS VM on IP 192.168.5.4 that runs on host 192.168.5.3?

5. If I have 2x 2TB nvme drives meant for OS, how can I organize them to use ZFS Special device? Maybe question is more like: can I partition nvme drives in half (before installing Proxmox or PBS), and use 1TB partition in RAID1 for OS (either Proxmox or PBS) and second two 1TB partition as ZFS special device?

6. Does PBS need RAID 10 or can I go with RAID6/RAIDz2 in IOPS needs?

I know this is alot of questions, so If needed, we can arrange https://www.buymeacoffee.com/ paid session via some videoconference call or something.

A quick background on my small infrastructure:

Proxmox server with multiple running VMs and LXCs. Empty SFP+ port. All incoming traffic from public IP is being routed via iptables, either to custom SSH port for direct server access, to port 8006, and the rest towards VM running PfSense).

Another physical server meant for running PBS. It has 2x 2TB nvme drives, 8x 10TB HDDs.

I would like to install proxmox and virtualize PBS (1. should I go VM or LXC?) on it on this fresh server, but I'm concerned that I will have connectivity problems because of iptables routing organization, never done this before.

Lets say servers get connected via free SFP+ to each other. I set IP on main server on SFP+ NIC to 192.168.5.2 and IP on Backup server on SFP+ NIC to 192.168.5.3.

2. Now I have to give PBS VM which IP? 3. Where?

4. Can I connect from main proxmox server, that runs on IP 10.0.0.2 as host, to this PBS VM on IP 192.168.5.4 that runs on host 192.168.5.3?

5. If I have 2x 2TB nvme drives meant for OS, how can I organize them to use ZFS Special device? Maybe question is more like: can I partition nvme drives in half (before installing Proxmox or PBS), and use 1TB partition in RAID1 for OS (either Proxmox or PBS) and second two 1TB partition as ZFS special device?

6. Does PBS need RAID 10 or can I go with RAID6/RAIDz2 in IOPS needs?

I know this is alot of questions, so If needed, we can arrange https://www.buymeacoffee.com/ paid session via some videoconference call or something.