I have a strange issue whereby scheduled backups (that have been configured and running for over a year) are now causing my physical server to go offline.

It is a 2 node cluster (I know, accepted) pve, pve2.

All VMs run on pve, only Proxmox Backup Server runs on pve2 (set up like this for testing the failures).

Proxmox Backup Server mounts an NFS share from TrueNAS.

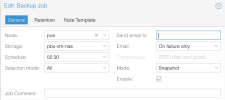

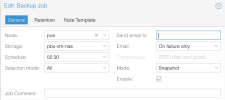

Backups are scheduled to run daily at 02:30, pointing to PBS.

A number of backup jobs complete, until at some point the server shuts off.

All Proxmox systems have plenty of resources.

PVE (frozen at time of crash)

PVE2

PBS

Pihole

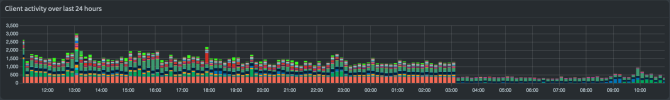

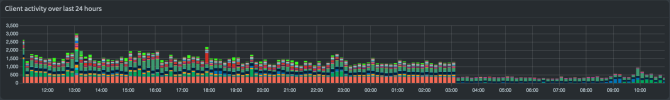

(network activity showing drop off around 03AM when the server dies during backup)

I've been troubleshooting this for just over a week now. I thought it might be a problematic VM, so I turned everything off and ran each VM for a day 1-by-1.

For the past week nothing has shut down.

The last thing I did (last night) was to start PBS VM and re-enable backups. The server shut off again.

I'm pretty confident this is caused by the schedule backups somehow, I'm just not sure how that might cause the hardware to halt.

It is a 2 node cluster (I know, accepted) pve, pve2.

All VMs run on pve, only Proxmox Backup Server runs on pve2 (set up like this for testing the failures).

Proxmox Backup Server mounts an NFS share from TrueNAS.

Backups are scheduled to run daily at 02:30, pointing to PBS.

A number of backup jobs complete, until at some point the server shuts off.

Code:

...

Jan 23 03:06:57 pbs proxmox-backup-proxy[656]: starting new backup on datastore 'truenas-nfs': "vm/127/2024-01-23T08:06:55Z"

Jan 23 03:06:57 pbs proxmox-backup-proxy[656]: download 'index.json.blob' from previous backup.

Jan 23 03:06:57 pbs proxmox-backup-proxy[656]: register chunks in 'drive-scsi0.img.fidx' from previous backup.

Jan 23 03:06:57 pbs proxmox-backup-proxy[656]: download 'drive-scsi0.img.fidx' from previous backup.

Jan 23 03:06:57 pbs proxmox-backup-proxy[656]: created new fixed index 1 ("vm/127/2024-01-23T08:06:55Z/drive-scsi0.img.fidx")

Jan 23 03:06:57 pbs proxmox-backup-proxy[656]: add blob "/mnt/truenas/vm/127/2024-01-23T08:06:55Z/qemu-server.conf.blob" (396 bytes, comp: 396)

Jan 23 03:07:22 pbs proxmox-backup-proxy[656]: Upload statistics for 'drive-scsi0.img.fidx'

Jan 23 03:07:22 pbs proxmox-backup-proxy[656]: UUID: 2f837bbe75634a6eb8ac051245ae2a8d

Jan 23 03:07:22 pbs proxmox-backup-proxy[656]: Checksum: c138ebd31da3b5bd442cfd3fb7ef8ed9549469a3fe5fca5d2a816461c5a604bd

Jan 23 03:07:22 pbs proxmox-backup-proxy[656]: Size: 25769803776

Jan 23 03:07:22 pbs proxmox-backup-proxy[656]: Chunk count: 6144

Jan 23 03:07:22 pbs proxmox-backup-proxy[656]: Upload size: 4194304 (0%)

Jan 23 03:07:22 pbs proxmox-backup-proxy[656]: Duplicates: 6143+1 (100%)

Jan 23 03:07:22 pbs proxmox-backup-proxy[656]: Compression: 0%

Jan 23 03:07:22 pbs proxmox-backup-proxy[656]: successfully closed fixed index 1

Jan 23 03:07:22 pbs proxmox-backup-proxy[656]: add blob "/mnt/truenas/vm/127/2024-01-23T08:06:55Z/index.json.blob" (438 bytes, comp: 438)

Jan 23 03:07:22 pbs proxmox-backup-[656]: pbs proxmox-backup-proxy[656]: syncing filesystem

Jan 23 03:07:22 pbs proxmox-backup-proxy[656]: successfully finished backup

Jan 23 03:07:22 pbs proxmox-backup-proxy[656]: backup finished successfully

Jan 23 03:07:22 pbs proxmox-backup-proxy[656]: TASK OK

Jan 23 03:07:24 pbs proxmox-backup-proxy[656]: Upload backup log to datastore 'truenas-nfs', root namespace vm/127/2024-01-23T08:06:55Z/client.log.blob

Jan 23 03:07:24 pbs proxmox-backup-proxy[656]: starting new backup on datastore 'truenas-nfs': "vm/128/2024-01-23T08:07:24Z"

Jan 23 03:07:24 pbs proxmox-backup-proxy[656]: download 'index.json.blob' from previous backup.

Jan 23 03:07:24 pbs proxmox-backup-proxy[656]: register chunks in 'drive-scsi0.img.fidx' from previous backup.

Jan 23 03:07:24 pbs proxmox-backup-proxy[656]: download 'drive-scsi0.img.fidx' from previous backup.

Jan 23 03:07:24 pbs proxmox-backup-proxy[656]: created new fixed index 1 ("vm/128/2024-01-23T08:07:24Z/drive-scsi0.img.fidx")

Jan 23 03:07:24 pbs proxmox-backup-proxy[656]: add blob "/mnt/truenas/vm/128/2024-01-23T08:07:24Z/qemu-server.conf.blob" (405 bytes, comp: 405)

Jan 23 03:10:01 pbs CRON[19197]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Jan 23 03:10:01 pbs CRON[19198]: (root) CMD (test -e /run/systemd/system || SERVICE_MODE=1 /sbin/e2scrub_all -A -r)

Jan 23 03:10:01 pbs CRON[19197]: pam_unix(cron:session): session closed for user root

Jan 23 03:17:01 pbs CRON[19203]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Jan 23 03:17:01 pbs CRON[19204]: (root) CMD ( cd / && run-parts --report /etc/cron.hourly)

Jan 23 03:17:01 pbs CRON[19203]: pam_unix(cron:session): session closed for user root

Jan 23 03:22:29 pbs proxmox-backup-proxy[656]: backup failed: connection error: timed out

Jan 23 03:22:29 pbs proxmox-backup-proxy[656]: removing failed backup

Jan 23 03:22:29 pbs proxmox-backup-[656]: pbs proxmox-backup-proxy[656]: removing backup snapshot "/mnt/truenas/vm/128/2024-01-23T08:07:24Z"

Jan 23 03:22:29 pbs proxmox-backup-proxy[656]: TASK ERROR: removing backup snapshot "/mnt/truenas/vm/128/2024-01-23T08:07:24Z" failed - Directory not empty (os error 39)

...

All Proxmox systems have plenty of resources.

PVE (frozen at time of crash)

PVE2

PBS

Pihole

(network activity showing drop off around 03AM when the server dies during backup)

I've been troubleshooting this for just over a week now. I thought it might be a problematic VM, so I turned everything off and ran each VM for a day 1-by-1.

For the past week nothing has shut down.

The last thing I did (last night) was to start PBS VM and re-enable backups. The server shut off again.

I'm pretty confident this is caused by the schedule backups somehow, I'm just not sure how that might cause the hardware to halt.