I already have a Proxmox server. I've raised a new Proxmox server and before adding any service, I'm trying to add it as a node to the first one. So I copy the join info and launch it and it stays thinking. After some time I can see that in the background cluster info screen has appeared a message that says "Connection Error".But the join process seems to have ended, so I close the window and the node seems added to the cluster. Shows the other server and it appears in the Main server GUI.

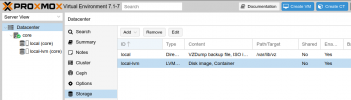

The only problem it's that it has lost the main storage. It says:

And logically adding any VM fails.Does anyone knows what is happening here? It is a blank new Proxmox instance (7.1-8)

The only problem it's that it has lost the main storage. It says:

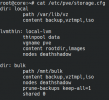

Code:

could not activate storage 'local-zfs', zfs error: cannot import 'rpool': no such pool available (500)And logically adding any VM fails.Does anyone knows what is happening here? It is a blank new Proxmox instance (7.1-8)

Code:

# pvecm nodes

Membership information

----------------------

Nodeid Votes Name

1 1 deathshadow (local)

2 1 core