Hi, every morning at 3.00AM proxmox starts to backup 1 vm and run apt-get update for new updates

After that, the CPU of all my nodes has grown up from 2-3% of activity to over 60%

pveversion -v

proxmox-ve: 5.3-1 (running kernel: 4.15.18-10-pve)

pve-manager: 5.3-8 (running version: 5.3-8/2929af8e)

pve-kernel-4.15: 5.3-1

pve-kernel-4.15.18-10-pve: 4.15.18-32

corosync: 2.4.4-pve1

criu: 2.11.1-1~bpo90

glusterfs-client: 3.8.8-1

ksm-control-daemon: 1.2-2

libjs-extjs: 6.0.1-2

libpve-access-control: 5.1-3

libpve-apiclient-perl: 2.0-5

libpve-common-perl: 5.0-44

libpve-guest-common-perl: 2.0-19

libpve-http-server-perl: 2.0-11

libpve-storage-perl: 5.0-36

libqb0: 1.0.3-1~bpo9

lvm2: 2.02.168-pve6

lxc-pve: 3.1.0-2

lxcfs: 3.0.2-2

novnc-pve: 1.0.0-2

proxmox-widget-toolkit: 1.0-22

pve-cluster: 5.0-33

pve-container: 2.0-33

pve-docs: 5.3-1

pve-edk2-firmware: 1.20181023-1

pve-firewall: 3.0-17

pve-firmware: 2.0-6

pve-ha-manager: 2.0-6

pve-i18n: 1.0-9

pve-libspice-server1: 0.14.1-1

pve-qemu-kvm: 2.12.1-1

pve-xtermjs: 3.10.1-1

qemu-server: 5.0-45

smartmontools: 6.5+svn4324-1

spiceterm: 3.0-5

vncterm: 1.5-3

zfsutils-linux: 0.7.12-pve1~bpo1

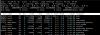

Top command

After that, the CPU of all my nodes has grown up from 2-3% of activity to over 60%

pveversion -v

proxmox-ve: 5.3-1 (running kernel: 4.15.18-10-pve)

pve-manager: 5.3-8 (running version: 5.3-8/2929af8e)

pve-kernel-4.15: 5.3-1

pve-kernel-4.15.18-10-pve: 4.15.18-32

corosync: 2.4.4-pve1

criu: 2.11.1-1~bpo90

glusterfs-client: 3.8.8-1

ksm-control-daemon: 1.2-2

libjs-extjs: 6.0.1-2

libpve-access-control: 5.1-3

libpve-apiclient-perl: 2.0-5

libpve-common-perl: 5.0-44

libpve-guest-common-perl: 2.0-19

libpve-http-server-perl: 2.0-11

libpve-storage-perl: 5.0-36

libqb0: 1.0.3-1~bpo9

lvm2: 2.02.168-pve6

lxc-pve: 3.1.0-2

lxcfs: 3.0.2-2

novnc-pve: 1.0.0-2

proxmox-widget-toolkit: 1.0-22

pve-cluster: 5.0-33

pve-container: 2.0-33

pve-docs: 5.3-1

pve-edk2-firmware: 1.20181023-1

pve-firewall: 3.0-17

pve-firmware: 2.0-6

pve-ha-manager: 2.0-6

pve-i18n: 1.0-9

pve-libspice-server1: 0.14.1-1

pve-qemu-kvm: 2.12.1-1

pve-xtermjs: 3.10.1-1

qemu-server: 5.0-45

smartmontools: 6.5+svn4324-1

spiceterm: 3.0-5

vncterm: 1.5-3

zfsutils-linux: 0.7.12-pve1~bpo1

Top command