Yes, it really seems like those SSDs in particular perform awful, judging from a short googling session [1] - even when compared to other consumer SSDs.

Have you tried benchmarking the storage with rados bench, as described in our Ceph benchmarking report [2]? You can also read up a bit about the performance characteristics of different SSDs there.

[1] https://www.tomshardware.com/features/crucial-p2-ssd-qlc-flash-swap-downgrade/2

[2] https://www.proxmox.com/en/downloads/item/proxmox-ve-ceph-benchmark-2020-09

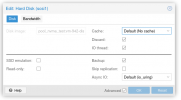

I would strongly recommend against using 'Writeback (No Cache)' mode for production systems.-> Setting the VM Disk Cache to "WriteBack" doesn't really change anything. BUT: Setting this to "WriteBack (unsafe)" massively increases performance of our little "Gimp Install" Test Case.

Seems like on sustained writes those P2's can go as low as 40 Mbps (when the SLC cache gets filled) [1]. Ceph in general already has some issues with many small IO operations, since the overhead is quite large compared to just using the hardware directly. Writeback should alleviate that issue somewhat by queueing write commands. But in your case I'm afraid it would make sense that those P2's are just hitting limits.BUT: is it really realistic that the VM I/O performance (and notice: just when writing small files) when using NVMEs as backend feels even SLOWER compared when using SAS HDDs with 7.200 rpm? Sure, we understood that consumer NVMEs would not perform as good as enterprise ones... but even SLOWER than pure HDDs? Really?

Have you tried benchmarking the storage with rados bench, as described in our Ceph benchmarking report [2]? You can also read up a bit about the performance characteristics of different SSDs there.

[1] https://www.tomshardware.com/features/crucial-p2-ssd-qlc-flash-swap-downgrade/2

[2] https://www.proxmox.com/en/downloads/item/proxmox-ve-ceph-benchmark-2020-09

Last edited: