Latest activity

-

dcsapak replied to the thread Can't add Proxmox Backup Server node.Hi, what is the info of the data you entered? (remote id, etc.) maybe you can post a screenshot of the summary page in the wizard?

dcsapak replied to the thread Can't add Proxmox Backup Server node.Hi, what is the info of the data you entered? (remote id, etc.) maybe you can post a screenshot of the summary page in the wizard? -

Hannes Laimer replied to the thread [SOLVED] Can a VM backup be used on a different node?.Hey, yes. That'll work, you just need access to the same backup storage. Backups do include the config, so you may have to update things like NIC names if they should be different on the new node after restoring.

Hannes Laimer replied to the thread [SOLVED] Can a VM backup be used on a different node?.Hey, yes. That'll work, you just need access to the same backup storage. Backups do include the config, so you may have to update things like NIC names if they should be different on the new node after restoring. -

FFrankList80 reacted to BobhWasatch's post in the thread Full Server backup + restore solution with

Like.

Personally, I just minimize how much I customize PVE and back up /etc. I recently replaced my Intel server with a much newer AMD one and this approach had me back up pretty quickly. No worries about requiring different firmware packages or...

Like.

Personally, I just minimize how much I customize PVE and back up /etc. I recently replaced my Intel server with a much newer AMD one and this approach had me back up pretty quickly. No worries about requiring different firmware packages or... -

dcsapak replied to the thread Datacenter ACME Accounts Add Customer account field is missing.i think the correct command here would be pvesh delete /cluster/acme/account/pvecluster so the name in the path yes on deletion we try to unregister that account. if you delete the file from /etc/pve this should not break our stack, but...

dcsapak replied to the thread Datacenter ACME Accounts Add Customer account field is missing.i think the correct command here would be pvesh delete /cluster/acme/account/pvecluster so the name in the path yes on deletion we try to unregister that account. if you delete the file from /etc/pve this should not break our stack, but... -

DdoubleUS reacted to Darkbotic's post in the thread e1000e eno1: Detected Hardware Unit Hang: with

Like.

Just a tip, you only need to add tso off. There's no need to turn off all others. I've been using this on /etc/network/interfaces for over 2 years with no issues: post-up ethtool -K eno1 tso off post-up ethtool -K vmbr0 tso off

Like.

Just a tip, you only need to add tso off. There's no need to turn off all others. I've been using this on /etc/network/interfaces for over 2 years with no issues: post-up ethtool -K eno1 tso off post-up ethtool -K vmbr0 tso off -

Ttenstareri posted the thread [SOLVED] Can a VM backup be used on a different node? in Proxmox VE: Installation and configuration.Hi, I was wondering, is it possible to use a VM backup as a way to "clone" and spin up new machines when setting up new nodes?

-

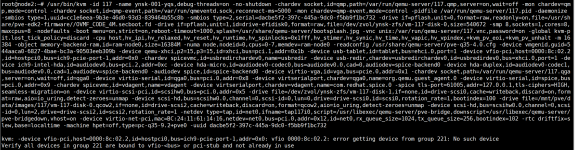

Yyiyang5188 replied to the thread The VPUG virtual machine of A16 cannot be started..Execute the startup command alone and report an error.

-

dakralex replied to the thread Proxmox does not start after attempted update from 7 to 8 to 9.From the apt history it looks like there was an in-between upgrade to many packages from Proxmox VE 9: Start-Date: 2025-10-05 16:21:44 Commandline: apt upgrade Install: [...] proxmox-kernel-6.14:amd64 (6.14.11-3, automatic)...

dakralex replied to the thread Proxmox does not start after attempted update from 7 to 8 to 9.From the apt history it looks like there was an in-between upgrade to many packages from Proxmox VE 9: Start-Date: 2025-10-05 16:21:44 Commandline: apt upgrade Install: [...] proxmox-kernel-6.14:amd64 (6.14.11-3, automatic)... -

dakralex replied to the thread Proxmox 9 HA Issue: Node stuck in "old timestamp- dead" after apt dist-upgrade.Hi! I haven't tried to reproduce it yet: When was the update performed? Has the watchdog been inactive before already (some recent entries in journalctl -u watchdog-mux)?

dakralex replied to the thread Proxmox 9 HA Issue: Node stuck in "old timestamp- dead" after apt dist-upgrade.Hi! I haven't tried to reproduce it yet: When was the update performed? Has the watchdog been inactive before already (some recent entries in journalctl -u watchdog-mux)? -

Mmimpqrf4s replied to the thread Made mistake in corosync.conf; now cannot edit.Your code is great. I would just add the reference to the documentation that (almost) covers this case and this script from github which recommends using a `sleep 30` before restarting anything, which I found very useful.

-

WWhatever posted the thread Correct VM NUMA config on 2 sockets HOST in Proxmox VE: Installation and configuration.Good day Help me figure out and implement the correct virtual machine configuration for a dual-socket motherboard (PVE 8.4, 6.8.12 kernel) Given: root@pve-node-04840:~# lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Address sizes...

-

TT.Grube posted the thread Proxmox 9 Veeam 12.3.2 Worker start failed in Proxmox VE: Installation and configuration.Hello! We have the problem that very often Veeam fails to start the worker. Now the problem could be with Veeam or with Proxmox. I then always have to manually abort the worker start in Proxmox. When I manually repeat the backup job, it usually...

-

Wwaltar reacted to Kingneutron's post in the thread One zfs pool slows down all other zfs pools with

Like.

> One of pools is running on simple consumer (dramless) nvme's (3 disk raidz1, one disk missing) Seriously, you're running a consumer-level 3-disk raidz DEGRADED with 1 disk MISSING, and posting about it here?? Fix your pool first. If you want...

Like.

> One of pools is running on simple consumer (dramless) nvme's (3 disk raidz1, one disk missing) Seriously, you're running a consumer-level 3-disk raidz DEGRADED with 1 disk MISSING, and posting about it here?? Fix your pool first. If you want... -

Wwaltar replied to the thread Recommendations for Dell R7715 Hardware selection / controller question.I mentioned a nvme config only as even a mix of 8 nvme and up to 16 sata/sas ports makes no sense anymore to me. If you want performance use a raid controller and if you want zfs for it's features don't select it.

-

Bblbecek replied to the thread Debian 13 lockup on reboot.So the "Standard VGA" solution seems to do the trick. I would prefer using virtviewer, but at least this is a pretty good workaround for text consoles. I also tried to use consoleblank=0 as VM kernel cmdline parameter and on the server with Xeon...

-

Gglaeken2 replied to the thread WARNING. Possible data lost after write and/or data corruption. MDRAID with writemostly. Kernel 6.14.11-2.Bug status: CONFIRMED, reproduced TURNING WRITEMOSTLY OFF, FOR MDRAID IS ADVISED ASAP HIGH DATA LOSS RISK Experiment type: system clone to VM Experiment start point: a running system in good condition after disabling writemostly Experiment...

-

KOh, I see. Yes, that is because you have the saferemove flag enabled on the storage: https://pve.proxmox.com/pve-docs/chapter-pvesm.html#pvesm_lvm_config The VM shuold already be functioning fine and not be blocked by that removal, just other VM...

-

KKingneutron replied to the thread One zfs pool slows down all other zfs pools.> One of pools is running on simple consumer (dramless) nvme's (3 disk raidz1, one disk missing) Seriously, you're running a consumer-level 3-disk raidz DEGRADED with 1 disk MISSING, and posting about it here?? Fix your pool first. If you want...

-

KKingneutron replied to the thread Backing up my PVE/PBS setup/configuration.https://github.com/kneutron/ansitest/tree/master/proxmox Look into the bkpcrit script, point it to external disk / NAS, run it nightly in cron

-

KKingneutron replied to the thread Double drive failure on zfs RAID 1. Any suggestions on how to debug?.Hope you have a backup. RAID is not