Hi!

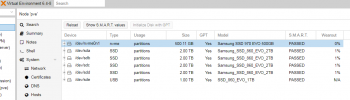

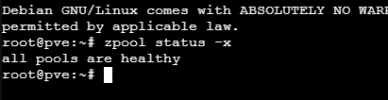

I just notice that zpool status give me:

I see that there is no data errors, but do i need to change disk? How can i determine that? I just started a pool check (zpool scrub), is there anything else i need to do?

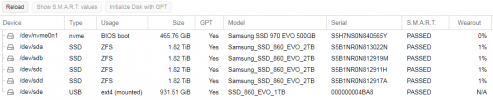

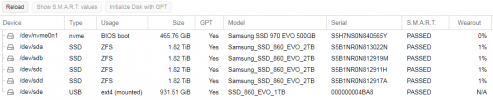

Wearout is only on 1% (discs practically idling most of the time):

I'm a little bit surprised, i must admit. After 3 months of very light working already problems? Are this serious (do i need to change disc)? For now all was working flawless, i intend to keep it that way. Server is mainly used for serving video content (cartoons and movies), serving personal webpage and running a Transmission client. Nothing stressfull really.

Thanx for any answer, really!

I just notice that zpool status give me:

I see that there is no data errors, but do i need to change disk? How can i determine that? I just started a pool check (zpool scrub), is there anything else i need to do?

Wearout is only on 1% (discs practically idling most of the time):

I'm a little bit surprised, i must admit. After 3 months of very light working already problems? Are this serious (do i need to change disc)? For now all was working flawless, i intend to keep it that way. Server is mainly used for serving video content (cartoons and movies), serving personal webpage and running a Transmission client. Nothing stressfull really.

Thanx for any answer, really!