I have a strange issue with PBS or maybe ZFS where it seems like read IO is capped until there is a high enough bandwidth write at which case read speed increases. I can't say for sure what is causing this behavior.

PBS is running in a VM with the sata controllers/cards/M2 passed through. The main pool drives are 7200 RPM HHDs with Optane SSDs for special vdev.

I've messed with several different zfs parameters that others said helped with slow reads such as: zfs_vdev_sync_read_max_active, zfs_dirty_data_sync_percent, zfs_checksum_events_per_second, and zfs_slow_io_events_per_second. This made no difference for me.

I've noticed this behavior for weeks and recently switched my motherboard (wanted the other motherboard for a different build). Running the verify task again with the new motherboard, and no other changes, the read speed increased from 80 MB/s to 120 MB/s but I am still seeing similar behavior where the read speed increases if a large enough write happens.

In both cases the upper limit seemed to be 160 MB/s which is still lower than I would expect from 3 stripped HDDs. It is also lower than the expected verification speed based on the PBS benchmark, ~200MB/s (screenshot below).

If any other information would be useful let me know. Thanks for any insight you all can offer.

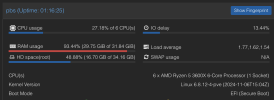

Performance stats (memory is that high only because of ARC usage, increasing memory or decreasing ARC makes no difference):

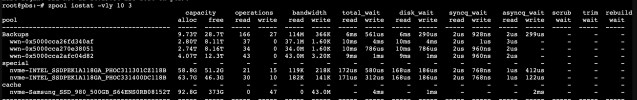

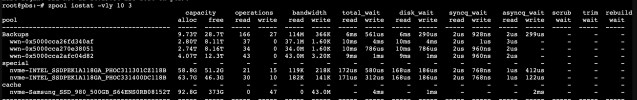

Iostat output during a verify task and incremental backup:

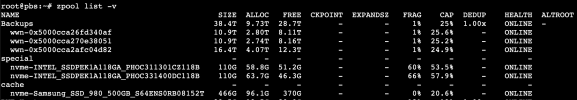

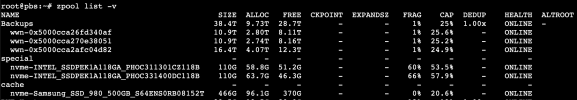

Here is the pool. I dont use mirrors since this is a backup and the data is not critical, the cache was recently added for some testing.

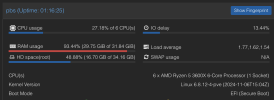

Here you can see that when the write cleared a certain threshold then read speed increased to nearly 160MB/s vs being capped at ~120MB/s

Arc_summary:

benchmark while verify and backup are running:

PBS is running in a VM with the sata controllers/cards/M2 passed through. The main pool drives are 7200 RPM HHDs with Optane SSDs for special vdev.

I've messed with several different zfs parameters that others said helped with slow reads such as: zfs_vdev_sync_read_max_active, zfs_dirty_data_sync_percent, zfs_checksum_events_per_second, and zfs_slow_io_events_per_second. This made no difference for me.

I've noticed this behavior for weeks and recently switched my motherboard (wanted the other motherboard for a different build). Running the verify task again with the new motherboard, and no other changes, the read speed increased from 80 MB/s to 120 MB/s but I am still seeing similar behavior where the read speed increases if a large enough write happens.

In both cases the upper limit seemed to be 160 MB/s which is still lower than I would expect from 3 stripped HDDs. It is also lower than the expected verification speed based on the PBS benchmark, ~200MB/s (screenshot below).

If any other information would be useful let me know. Thanks for any insight you all can offer.

Performance stats (memory is that high only because of ARC usage, increasing memory or decreasing ARC makes no difference):

Iostat output during a verify task and incremental backup:

Here is the pool. I dont use mirrors since this is a backup and the data is not critical, the cache was recently added for some testing.

Here you can see that when the write cleared a certain threshold then read speed increased to nearly 160MB/s vs being capped at ~120MB/s

Arc_summary:

benchmark while verify and backup are running: