I'm having severe slowness on a VM with Server 2016. I'm not familiar with Proxmox so any advice would help. The VM is running SQL & RemoteApp. There are about 30 users RDPing into this server and I've thrown all the resources I can at it. The server and the programs on the server just crawl. Any tips on how I can improve performance?

Slow Performance on Server 2016

- Thread starter freshbyte_syrah

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Also keep in mind that it is harder to migrate VMs between nodes if you are not using kvm64 but got several nodes with different hardware.Also, try set cpu type host(but it's reset your software licenses, such as windows, etc)

I think the slowness is the SQL performance. Our software is SQL based. I'm just trying to figure out how I can optimize their performance. We initially had their hard drive set at SATA and there weren't as MANY complaints, but as soon as I switched to Virtio - it's everyday they're complaining.Try troubleshoot what exactly slow: lack of ram, disk performance, network speed.

Also, try set cpu type host(but it's reset your software licenses, such as windows, etc)

1. what is your disk storage backend ("vms") - ext4, zfs, lvm...?I think the slowness is the SQL performance. Our software is SQL based. I'm just trying to figure out how I can optimize their performance. We initially had their hard drive set at SATA and there weren't as MANY complaints, but as soon as I switched to Virtio - it's everyday they're complaining.

2. Which disks used (sata,sas,ssd,nvme)? raid level and raid controller(cache)?

3. Try disable "Use tablet for pointer"

4. Post here screenshots from VM (cpu,ram,hdd,network) usage

how can you determine it's the DC500R? Sorry - I would just like to know.If I remember it right the DC500R got a terrible random write performance. A fio benchmark would be interesting to see.

NVM - I found it.how can you determine it's the DC500R? Sorry - I would just like to know.

I dont know if its the DC500R but would be logical if you got a workload with lots of small sync writes from the MySQL DB but using SSDs that aren'T made for writes or high IOPS. You should run some benchmarks using fio or something like that to see if the storage could be the bottleneck.

I will run the benchmarks from FIO and bring my results back. I didn't have nearly as many issues when we were on SATA.

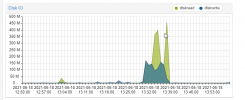

Could you also share some summary screenshots of the host (new-node6). In particular the io-delay.

BTW: Throwing in all the resources doesn’t always give you the best performance. Especially assinging (too many) cores could backfire. If I‘m seeing correctly you assigned all 64 cores of the host to the VM. Maybe cut back on these (32 would also work looking at the CPU usage).

I remember a case from a few years ago were on a 4-core host 4 cores were assigned to one of the 3 VMs. The VM was struggling and performnace was (very) poor. After changing the cores form 4 to 2 the VM was performing well.

BTW: Throwing in all the resources doesn’t always give you the best performance. Especially assinging (too many) cores could backfire. If I‘m seeing correctly you assigned all 64 cores of the host to the VM. Maybe cut back on these (32 would also work looking at the CPU usage).

I remember a case from a few years ago were on a 4-core host 4 cores were assigned to one of the 3 VMs. The VM was struggling and performnace was (very) poor. After changing the cores form 4 to 2 the VM was performing well.

Last edited:

Will this cause any issues with the windows image?if you can, move from zfs to ext4 this VM disk

I will make the change to the cores.Could you also share some summary screenshots of the host (new-node6). In particular the io-delay.

BTW: Throwing in all the resources doesn’t always give you the best performance. Especially assinging (too many) cores could backfire. If I‘m seeing correctly you assigned all 64 cores of the host to the VM. Maybe cut back on these (32 would also work looking at the CPU usage).

I remember a case from a few years ago were on a 4-core host 4 cores were assigned to one of the 3 VMs. The VM was struggling and performnace was (very) poor. After changing the cores form 4 to 2 the VM was performing well.

Proxmox should do the

And you didn't post any ZFS configurations. Looks like you are using 4x DC500R with ZFS but you didn'T tell us if it is maybe a raidz1/raidz2 (bad performance) instead of a striped mirror or what options are used (ashift, volblocksize, compression, deduplication, atime and so on).

I for example always deactivate atime so not every read is creating a (in most cases useless) write.

PVE should do the conversion of the image without a problem. But you would loose bit rot protection, loose the ability to use ZFS replication and so on.Will this cause any issues with the windows image?

And you didn't post any ZFS configurations. Looks like you are using 4x DC500R with ZFS but you didn'T tell us if it is maybe a raidz1/raidz2 (bad performance) instead of a striped mirror or what options are used (ashift, volblocksize, compression, deduplication, atime and so on).

I for example always deactivate atime so not every read is creating a (in most cases useless) write.

Last edited:

Read from the "Model" column of your screenshot regarding disks.how can you determine it's the DC500R? Sorry - I would just like to know.

Without VMs running, just as "poor man's benchmark", try a pveperf command from shell, against the "VM" pool.

My Kingston DC500M (960GB) has a fsync performance index of 8710 (ZFS single disk).

Hope you have zfs pool configured as Raid10 equivalent.

Also, model "M" (DC500M) has 1.3 DWPD/5yrs, while the "R" model has... 0.3!!!

Exactly. I see 4x "SEDC500R192..." (thats the 1.92TB Kingston DC500R) and 1x "SEDC500R480G" (thats the 480GB DC500R).Read from the "Model" column of your screenshot regarding disks.

Yeah, an output ofHope you have zfs pool configured as Raid10 equivalent.

zpool status and zfs get all YourPoolName would be usefull to see if ZFS is badly configured.Wow thats bad for a datacenter SSD...so basically my two 100GB system SSDs (10 DWPD; 10€ each) got nearly the same write endurance as all four 1.92TB DC500R combined...Also, model "M" (DC500M) has 1.3 DWPD/5yrs, while the "R" model has... 0.3!!!

Last edited:

first, i have to agree you absolutly need raid 10 on database VMs also instead of virtio i woudl recommend using SCSI/SSD Emulation and discard.

that allows to trim (hey we want anything extra we can get)

-as a quick note dirty hack to switch to SCSI (and not bluescreen because of drivers) is simply add a small 1gb disk as scsi drive while the machine is running. then shut it down, remove and delete the new dummy disk, detach old disk reattach as scsi. windows will load with scsi drivers

---

now i woudl also do a quick benchmark with something on windows. AS SSD is good enough just to get a rough idea whats going on that machine.

todo that i would stop the Databases and anything else running on it and post us 2 screenshots, 1gb test and 5gb test from the drive hosting the data partition of the MSSQL

if you run multiple disk on that machine try also IOthreat after switch to SCSI

that allows to trim (hey we want anything extra we can get)

-as a quick note dirty hack to switch to SCSI (and not bluescreen because of drivers) is simply add a small 1gb disk as scsi drive while the machine is running. then shut it down, remove and delete the new dummy disk, detach old disk reattach as scsi. windows will load with scsi drivers

---

now i woudl also do a quick benchmark with something on windows. AS SSD is good enough just to get a rough idea whats going on that machine.

todo that i would stop the Databases and anything else running on it and post us 2 screenshots, 1gb test and 5gb test from the drive hosting the data partition of the MSSQL

if you run multiple disk on that machine try also IOthreat after switch to SCSI