Hi everybody.

I had a cluster with 3 nodes. In Node1 and Node 2 VMs are running in a hard disk configured as drbd, and in Node 3 VMs are running on local-lvm.

Backups for Node1 and Node2 are stored in a third disk installed on the node, and backups for Node3 are stored in a secondary disk in Node3.

On three nodes are Dell 4 x Intel(R) Xeon(R) CPU E3-1220 v5 with 16Gb RAM and Proxmox 6.3

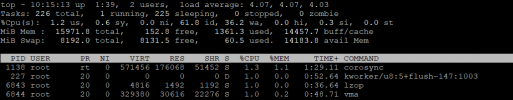

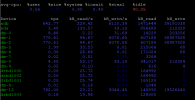

This is the status restoring a Backup VM with size 56.50Gb (vzdump-qemu-100-2021_09_28-06_36_57.vma.lzo) on Node1 in a drbdstorage. Ethernet for drbd is working as a Gb.

And this is the status restoring a Backup VM with size 56Gb on node3 on a lvm-local

This is a normal speed? How can i check where is the problem or how can I get a faster restore?

Thanks in advance.

I had a cluster with 3 nodes. In Node1 and Node 2 VMs are running in a hard disk configured as drbd, and in Node 3 VMs are running on local-lvm.

Backups for Node1 and Node2 are stored in a third disk installed on the node, and backups for Node3 are stored in a secondary disk in Node3.

On three nodes are Dell 4 x Intel(R) Xeon(R) CPU E3-1220 v5 with 16Gb RAM and Proxmox 6.3

Code:

root@node1:~# pveversion

pve-manager/6.3-2/22f57405 (running kernel: 5.4.73-1-pve)This is the status restoring a Backup VM with size 56.50Gb (vzdump-qemu-100-2021_09_28-06_36_57.vma.lzo) on Node1 in a drbdstorage. Ethernet for drbd is working as a Gb.

Code:

restore vma archive: lzop -d -c /media/vms/dump/dump/vzdump-qemu-100-2021_09_28-06_36_57.vma.lzo | vma extract -v -r /var/tmp/vzdumptmp3850.fifo - /var/tmp/vzdumptmp3850

CFG: size: 338 name: qemu-server.conf

DEV: dev_id=1 size: 214748364800 devname: drive-virtio0

CTIME: Tue Sep 28 06:36:58 2021

trying to acquire cfs lock 'storage-drbdstorage' ...

trying to acquire cfs lock 'storage-drbdstorage' ...

new volume ID is 'drbdstorage:vm-100-disk-1'

map 'drive-virtio0' to '/dev/drbd/by-res/vm-100-disk-1/0' (write zeros = 1)

progress 1% (read 2147483648 bytes, duration 17 sec)

.

.

.

.

.

progress 36% (read 77309411328 bytes, duration 19223 sec)

progress 37% (read 79456894976 bytes, duration 19869 sec)

progress 38% (read 81604378624 bytes, duration 20533 sec)

progress 39% (read 83751862272 bytes, duration 21172 sec)

progress 40% (read 85899345920 bytes, duration 21812 sec)And this is the status restoring a Backup VM with size 56Gb on node3 on a lvm-local

Code:

restore vma archive: lzop -d -c /media/Backups//dump/vzdump-qemu-104-2021_09_27-20_27_13.vma.lzo | vma extract -v -r /var/tmp/vzdumptmp27533.fifo - /var/tmp/vzdumptmp27533

CFG: size: 328 name: qemu-server.conf

DEV: dev_id=1 size: 187904819200 devname: drive-virtio0

CTIME: Mon Sep 27 20:27:21 2021

Logical volume "vm-104-disk-0" created.

new volume ID is 'local-lvm:vm-104-disk-0'

map 'drive-virtio0' to '/dev/pve/vm-104-disk-0' (write zeros = 0)

progress 1% (read 1879048192 bytes, duration 5 sec)

.

.

.

.

.

progress 40% (read 75161927680 bytes, duration 18672 sec)

progress 41% (read 77040975872 bytes, duration 19229 sec)

progress 42% (read 78920024064 bytes, duration 19829 sec)

progress 43% (read 80799072256 bytes, duration 20466 sec)

progress 44% (read 82678120448 bytes, duration 20794 sec)This is a normal speed? How can i check where is the problem or how can I get a faster restore?

Thanks in advance.

Last edited: