Hello,

I've been tinkering with Proxmox since a few days and I'm trying to have a 3-node failover cluster.

I am running 3x Fujitsu RX300 S7 with the following specs:

- 2x Intel(R) Xeon(R) CPU E5-2620

- 192GB RAM ECC RDIMM 1333MHz

- 8x SAS 300GB 2,5" with RAIDZ2

- 1x Dual 10GBit Card - Intel X520-DA2 / Fujitsu D2755 10GBit SFP+ PCIe NIC

(Broadcast Bond and Interconnected nodes for cluster and replication with DACs)

- 2x GBit Intel Onboard

- 2x GBit Intel PCIe

(Bonded as LACP to Ethernet Switch)

1x SAS2008 Controller flashed to IT-Mode with firmware version 19

RAIDZ2 built over 8 SAS-Drives with the guided initial Proxmox VE 6 Installer. All 3 pools are healthy. ZFS-ZED is additionally installed and is sending messages in case of a failure.

Proxmox is up-to-date (pveupdate && pveupgrade incl. reboot for kernel) and fencing is working with defaults - no hardware IPMI watchdogs are configured. Fencing is working as expected.

Nodes are called: pve01, pve02, pve03.

Initially I have 2 test-VMs sitting on the local-zfs of pve01.

- win10-64-01.test ID 100 (Guest Tools installed with virtio-win-0.1.171.iso - including Network, SCSI, Serial, Guest Tools, Ballooning inkl. blnsrv.exe -i - ZFS Thin Provisioned, SCSI Disk, Default (No cache), Discard active)

- ubn-1804-64-01 ID 101 (Ubuntu 18.04 LTS - no special customization in terms of tools - - ZFS Thin Provisioned, SCSI Disk, Default (No cache))

Now to the problem:

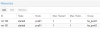

I've set up 2 replica-jobs for each of the VMs, one going to pve02, one going to pve03.

Replica goes fine without any reported errors.

"zfs list | grep -e 100 -e 101"

shows the same size on all 3 nodes.

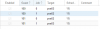

HA is configured as the following:

If I simulate failing the host pve01 by going to iRMC (out of band management console) and typing ifdown bond0 (2x 1Gbit LACP Trunk) && ifdown bond1 (10GBit Interconnect Broadcast bond) and shutting it down via iRMC after the watchdog is trying to reboot proxmox, the HA-Manager on pve02/pve03 seems to kick in after around 3,5 minutes and restarts both VMs on pve02.

After a while I restart pve01 and let it boot up completely.

The next step would be to let it re-replicate to pve01 and simulate a offline node pve02.

By now the replication jobs are somewhat in a bugged state:

root@pve02:~# tail /var/log/pve/replicate/100-1

2019-08-28 18:36:01 100-1: start replication job

2019-08-28 18:36:01 100-1: guest => VM 100, running => 2397745

2019-08-28 18:36:01 100-1: volumes => local-zfs:vm-100-disk-0

2019-08-28 18:36:03 100-1: freeze guest filesystem

2019-08-28 18:36:04 100-1: create snapshot '__replicate_100-1_1567010161__' on local-zfs:vm-100-disk-0

2019-08-28 18:36:04 100-1: thaw guest filesystem

2019-08-28 18:36:06 100-1: full sync 'local-zfs:vm-100-disk-0' (__replicate_100-1_1567010161__)

2019-08-28 18:36:08 100-1: delete previous replication snapshot '__replicate_100-1_1567010161__' on local-zfs:vm-100- disk-0

2019-08-28 18:36:08 100-1: end replication job with error: command 'set -o pipefail && pvesm export local-zfs:vm-100- disk-0 zfs - -with-snapshots 1 -snapshot __replicate_100-1_1567010161__' failed: exit code 1

Same thing for the other job, 100-0.

If I delete the ZFS disks "vm-100-disk-0" on pve01 and pve03, the replica starts to work again.

Is this expected behaviour?

Any ideas?

Thank you very much in advance!

Regards

Nyctophilia

I've been tinkering with Proxmox since a few days and I'm trying to have a 3-node failover cluster.

I am running 3x Fujitsu RX300 S7 with the following specs:

- 2x Intel(R) Xeon(R) CPU E5-2620

- 192GB RAM ECC RDIMM 1333MHz

- 8x SAS 300GB 2,5" with RAIDZ2

- 1x Dual 10GBit Card - Intel X520-DA2 / Fujitsu D2755 10GBit SFP+ PCIe NIC

(Broadcast Bond and Interconnected nodes for cluster and replication with DACs)

- 2x GBit Intel Onboard

- 2x GBit Intel PCIe

(Bonded as LACP to Ethernet Switch)

1x SAS2008 Controller flashed to IT-Mode with firmware version 19

RAIDZ2 built over 8 SAS-Drives with the guided initial Proxmox VE 6 Installer. All 3 pools are healthy. ZFS-ZED is additionally installed and is sending messages in case of a failure.

Proxmox is up-to-date (pveupdate && pveupgrade incl. reboot for kernel) and fencing is working with defaults - no hardware IPMI watchdogs are configured. Fencing is working as expected.

Nodes are called: pve01, pve02, pve03.

Initially I have 2 test-VMs sitting on the local-zfs of pve01.

- win10-64-01.test ID 100 (Guest Tools installed with virtio-win-0.1.171.iso - including Network, SCSI, Serial, Guest Tools, Ballooning inkl. blnsrv.exe -i - ZFS Thin Provisioned, SCSI Disk, Default (No cache), Discard active)

- ubn-1804-64-01 ID 101 (Ubuntu 18.04 LTS - no special customization in terms of tools - - ZFS Thin Provisioned, SCSI Disk, Default (No cache))

Now to the problem:

I've set up 2 replica-jobs for each of the VMs, one going to pve02, one going to pve03.

Replica goes fine without any reported errors.

"zfs list | grep -e 100 -e 101"

shows the same size on all 3 nodes.

HA is configured as the following:

If I simulate failing the host pve01 by going to iRMC (out of band management console) and typing ifdown bond0 (2x 1Gbit LACP Trunk) && ifdown bond1 (10GBit Interconnect Broadcast bond) and shutting it down via iRMC after the watchdog is trying to reboot proxmox, the HA-Manager on pve02/pve03 seems to kick in after around 3,5 minutes and restarts both VMs on pve02.

After a while I restart pve01 and let it boot up completely.

The next step would be to let it re-replicate to pve01 and simulate a offline node pve02.

By now the replication jobs are somewhat in a bugged state:

root@pve02:~# tail /var/log/pve/replicate/100-1

2019-08-28 18:36:01 100-1: start replication job

2019-08-28 18:36:01 100-1: guest => VM 100, running => 2397745

2019-08-28 18:36:01 100-1: volumes => local-zfs:vm-100-disk-0

2019-08-28 18:36:03 100-1: freeze guest filesystem

2019-08-28 18:36:04 100-1: create snapshot '__replicate_100-1_1567010161__' on local-zfs:vm-100-disk-0

2019-08-28 18:36:04 100-1: thaw guest filesystem

2019-08-28 18:36:06 100-1: full sync 'local-zfs:vm-100-disk-0' (__replicate_100-1_1567010161__)

2019-08-28 18:36:08 100-1: delete previous replication snapshot '__replicate_100-1_1567010161__' on local-zfs:vm-100- disk-0

2019-08-28 18:36:08 100-1: end replication job with error: command 'set -o pipefail && pvesm export local-zfs:vm-100- disk-0 zfs - -with-snapshots 1 -snapshot __replicate_100-1_1567010161__' failed: exit code 1

Same thing for the other job, 100-0.

If I delete the ZFS disks "vm-100-disk-0" on pve01 and pve03, the replica starts to work again.

Is this expected behaviour?

Any ideas?

Thank you very much in advance!

Regards

Nyctophilia

Attachments

Last edited: