Hi guys,

I'm fairly new to proxmox and virtualisation in general. I have setup some VM, one of them being my media center that is working absolutely fine in DirectPlay.

For transcoding, it's definitely not

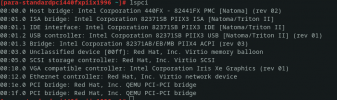

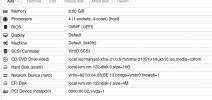

I have a NUC11, with a Core-i7-1165G7 and IRIS XE graphic.

I've been trying to figure out how to passthrough the intel iris xe graphic to my VM but i've struggled to find a way to do that.

I've followed this step by step : https://blog.ktz.me/passthrough-intel-igpu-with-gvt-g-to-a-vm-and-use-it-with-plex/

But got stuck at the last past, where "ls /sys/bus/pci/devices/0000\:00\:02.0/mdev_supported_types/" returns an empty results. So not sure what to do to fix it, or what to do next. Not even sure if that step by step is relevant for my setup.

Would you guys be able to advise what to do to get there ?

Happy to provide any information required.

Thanks for the read/help.

I'm fairly new to proxmox and virtualisation in general. I have setup some VM, one of them being my media center that is working absolutely fine in DirectPlay.

For transcoding, it's definitely not

I have a NUC11, with a Core-i7-1165G7 and IRIS XE graphic.

I've been trying to figure out how to passthrough the intel iris xe graphic to my VM but i've struggled to find a way to do that.

I've followed this step by step : https://blog.ktz.me/passthrough-intel-igpu-with-gvt-g-to-a-vm-and-use-it-with-plex/

But got stuck at the last past, where "ls /sys/bus/pci/devices/0000\:00\:02.0/mdev_supported_types/" returns an empty results. So not sure what to do to fix it, or what to do next. Not even sure if that step by step is relevant for my setup.

Would you guys be able to advise what to do to get there ?

Happy to provide any information required.

Thanks for the read/help.