I have a 4-node Promox 6.3 cluster, running Ceph for VM block storage. Underlying disks are NVMe disks.

Each node has a single-port Mellanox NIC, with a 100Gbe connection back to a 100Gbe switch.

This switch is then connected to an upstream router via a 10Gbps port. (However, I'm not sure the router itself is really capable of 10Gbps routing).

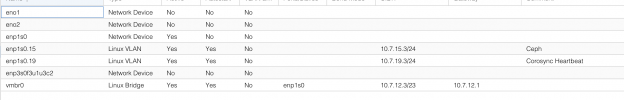

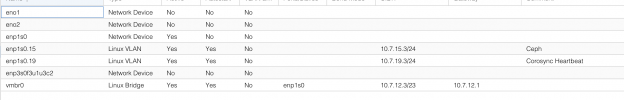

I have three separate VLANs in Proxmox setup:

My question is around inter-VLAN routing, and network contention.

The traffic within each VLAN I assume only goes back to the 100Gbe switch - and all is well. So traffic within say, the Ceph network goes at line-rate, as does say VM traffic.

However, if you need to go between the VLANs - it would bottleneck at our router.

What traffic in this case needs to pass between the three VLANs? And would that bottleneck?

We can move to Inter-VLAN routing on the switches, I just wasn't sure if it was worth it in this case.

What are people's experiences with Promox/Ceph networks, and is Layer 3 switching needed?

Each node has a single-port Mellanox NIC, with a 100Gbe connection back to a 100Gbe switch.

This switch is then connected to an upstream router via a 10Gbps port. (However, I'm not sure the router itself is really capable of 10Gbps routing).

I have three separate VLANs in Proxmox setup:

- VM traffic (vmbr0, going over enp1s0)

- Ceph traffic - enp1s0.15

- Corosync heartbeat network - enp1s0.19.

My question is around inter-VLAN routing, and network contention.

The traffic within each VLAN I assume only goes back to the 100Gbe switch - and all is well. So traffic within say, the Ceph network goes at line-rate, as does say VM traffic.

However, if you need to go between the VLANs - it would bottleneck at our router.

What traffic in this case needs to pass between the three VLANs? And would that bottleneck?

We can move to Inter-VLAN routing on the switches, I just wasn't sure if it was worth it in this case.

What are people's experiences with Promox/Ceph networks, and is Layer 3 switching needed?