Hello all,

I want to update our Proxmox nodes in a cluster with Ceph from 6.4 to 7 and read the how-to: https://pve.proxmox.com/wiki/Upgrade_from_6.x_to_7.0#Preconditions

In that how-to, I came across the "Preconditions" section and the "Check known upgrade issues". In there, at the last point a ZFS boot issue is mentioned when Proxmox was installed with BIOS boot before Proxmox VE 6.4 (and I think I installed Proxmox before 6.4, but I am not sure). So, I should switch to the Proxmox Boot Tool?: https://pve.proxmox.com/wiki/ZFS:_Switch_Legacy-Boot_to_Proxmox_Boot_Tool

Okay I thought before I make hard changes, I try to boot the nodes with UEFI: with 4/5 nodes (Dell 420/430) I could just switch to UEFI boot and they booted with GRUB and ZFS without issue. If I do a

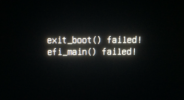

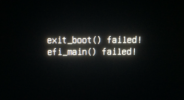

On the last node (a Supermicro CSE-826 X9DRi-LN4F+ with ZFS mirror as boot), If I change the boot to UEFI, now systemd is presented instead of GRUB and I get the following error

If I switch back to legacy boot in the BIOS, it will boot with GRUB again without issues.

Here some informations about the node:

2 Questions:

- Are the 4 nodes fine with the GRUB/ZFS boot via UEFI for the upgrade to Proxmox 7?

- Do I still have to initialize the vfat Partition because

Thanks in advance, any help is appreciated!

I want to update our Proxmox nodes in a cluster with Ceph from 6.4 to 7 and read the how-to: https://pve.proxmox.com/wiki/Upgrade_from_6.x_to_7.0#Preconditions

In that how-to, I came across the "Preconditions" section and the "Check known upgrade issues". In there, at the last point a ZFS boot issue is mentioned when Proxmox was installed with BIOS boot before Proxmox VE 6.4 (and I think I installed Proxmox before 6.4, but I am not sure). So, I should switch to the Proxmox Boot Tool?: https://pve.proxmox.com/wiki/ZFS:_Switch_Legacy-Boot_to_Proxmox_Boot_Tool

Okay I thought before I make hard changes, I try to boot the nodes with UEFI: with 4/5 nodes (Dell 420/430) I could just switch to UEFI boot and they booted with GRUB and ZFS without issue. If I do a

ls /sys/firmware/efi I get content - so far, so good and proxmox-boot-tool status outputs:

Code:

Re-executing '/usr/sbin/proxmox-boot-tool' in new private mount namespace..

System currently booted with uefi

A304-5D92 is configured with: uefi (versions: 5.4.78-1-pve, 5.4.78-2-pve)

A304-B425 is configured with: uefi (versions: 5.4.78-1-pve, 5.4.78-2-pve)On the last node (a Supermicro CSE-826 X9DRi-LN4F+ with ZFS mirror as boot), If I change the boot to UEFI, now systemd is presented instead of GRUB and I get the following error

If I switch back to legacy boot in the BIOS, it will boot with GRUB again without issues.

Here some informations about the node:

Code:

root@pve01:~# findmnt /

TARGET SOURCE FSTYPE OPTIONS

/ rpool/ROOT/pve-1 zfs rw,relatime,xattr,noacl

Code:

root@pve01:~# ls /sys/firmware/efi

ls: cannot access '/sys/firmware/efi': No such file or directory

Code:

root@pve01:~# lsblk -o +FSTYPE

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT FSTYPE

sda 8:0 0 119.2G 0 disk zfs_member

├─sda1 8:1 0 1007K 0 part zfs_member

├─sda2 8:2 0 512M 0 part vfat

└─sda3 8:3 0 118.8G 0 part zfs_member

sdb 8:16 0 119.2G 0 disk zfs_member

├─sdb1 8:17 0 1007K 0 part zfs_member

├─sdb2 8:18 0 512M 0 part vfat

└─sdb3 8:19 0 118.8G 0 part zfs_member

sdc 8:32 0 2.7T 0 disk LVM2_member

└─ceph--a22817d1--5482--41e4--b6af--a9c46640cef1-osd--block--a5ded46b--48f4--414f--b403--ea3c4da471d7 253:4 0 2.7T 0 lvm

sdd 8:48 0 2.7T 0 disk LVM2_member

└─ceph--57961904--5e07--415f--ab72--2afef4948820-osd--block--aa3a746a--5075--4c25--9473--d5ab943e4d4d 253:3 0 2.7T 0 lvm

sde 8:64 0 2.7T 0 disk LVM2_member

└─ceph--6f505afe--17d7--4427--8c81--ba4168ef28a2-osd--block--cb8cf2b6--9d64--4220--b2f9--a7904cc4c307 253:2 0 2.7T 0 lvm

sdf 8:80 0 2.7T 0 disk LVM2_member

└─ceph--0b4002cd--4e5e--46e8--84a4--81b75f39f15c-osd--block--18af26a5--e155--4048--85d7--d434739b5527 253:1 0 2.7T 0 lvm

sdg 8:96 0 2.7T 0 disk LVM2_member

└─ceph--06a2a994--7398--47aa--ab84--e29a84bfaec0-osd--block--b8818506--bd58--4268--80ac--65756c60c0c7 253:0 0 2.7T 0 lvm

sdh 8:112 0 447.1G 0 disk LVM2_member

├─ceph--f41fd84f--0e11--4975--8d50--7e72c81cb77e-osd--db--a8b32984--97f0--4cff--b7bc--242d5bfe9b05 253:5 0 55G 0 lvm

├─ceph--f41fd84f--0e11--4975--8d50--7e72c81cb77e-osd--db--5a182387--0f6c--428b--b09f--1cf1cf8fb986 253:6 0 55G 0 lvm

├─ceph--f41fd84f--0e11--4975--8d50--7e72c81cb77e-osd--db--223fd534--89ec--48b3--a759--0daee03ef4b0 253:7 0 55G 0 lvm

├─ceph--f41fd84f--0e11--4975--8d50--7e72c81cb77e-osd--db--f355418d--5c6b--4201--bbf7--4e91b68802b7 253:8 0 55G 0 lvm

├─ceph--f41fd84f--0e11--4975--8d50--7e72c81cb77e-osd--db--47621472--723f--4405--9c50--a40fe93d8217 253:9 0 55G 0 lvm

├─ceph--f41fd84f--0e11--4975--8d50--7e72c81cb77e-osd--db--1619928b--274f--4a00--a797--f25b0fd988ab 253:10 0 55G 0 lvm

├─ceph--f41fd84f--0e11--4975--8d50--7e72c81cb77e-osd--db--097c4cc2--5890--4160--9adf--950b13c0fa16 253:11 0 55G 0 lvm

└─ceph--f41fd84f--0e11--4975--8d50--7e72c81cb77e-osd--db--e912e03b--559f--492e--a4a8--06cd51849b96 253:12 0 60G 0 lvm

sdi 8:128 0 2.7T 0 disk LVM2_member

└─ceph--ec05cecf--f9a3--4c37--9d5d--a84e2bca2373-osd--block--08a1ca9b--01b0--4a2c--bd28--028b8ca371f0 253:13 0 2.7T 0 lvm

sdj 8:144 0 2.7T 0 disk LVM2_member

└─ceph--34d261f7--36e3--4a45--b696--4cc4d0b05c73-osd--block--527bbe1d--edf8--44ff--8a4f--028e5e4f3232 253:14 0 2.7T 0 lvm

sdk 8:160 0 2.7T 0 disk LVM2_member

└─ceph--b37a75c6--2296--4d8e--a027--1e66188003d7-osd--block--4ed79cc2--1df0--4116--8f30--60c55c61a8fa 253:15 0 2.7T 0 lvm

Code:

root@pve01:~# pveversion

pve-manager/6.4-13/9f411e79 (running kernel: 5.4.128-1-pve)

Code:

root@pve01:~# proxmox-boot-tool status

Re-executing '/usr/sbin/proxmox-boot-tool' in new private mount namespace..

System currently booted with legacy bios

5BFB-8BFB is configured with: uefi (versions: 5.4.78-1-pve, 5.4.78-2-pve)

5BFB-CD50 is configured with: uefi (versions: 5.4.78-1-pve, 5.4.78-2-pve)2 Questions:

- Are the 4 nodes fine with the GRUB/ZFS boot via UEFI for the upgrade to Proxmox 7?

- Do I still have to initialize the vfat Partition because

proxmox-boot-tool status outputs already uefi or is that issue related to something else?Thanks in advance, any help is appreciated!

Last edited: